Malware 'Morris II' that destroys the security functions of chat AI such as ChatGPT and Gemini has appeared

Researchers created

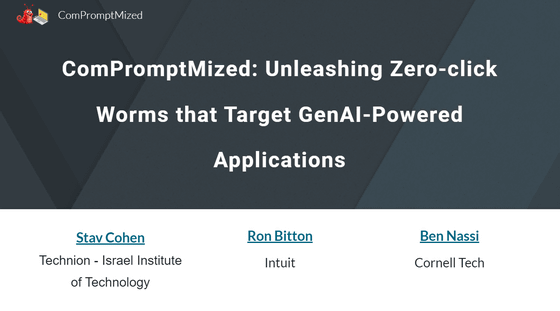

ComPromptMized

https://sites.google.com/view/compromptmized

New Malware Worm Can Poison ChatGPT, Gemini-Powered Assistants | PCMag

https://www.pcmag.com/news/malware-worm-poison-chatgpt-gemini-powered-assistants

Morris II is a zero-click worm that targets applications that utilize generative AI, and was developed by researchers from Israel Institute of Technology, Cornell Tech, and software developer Intuit . The name 'Morris II' comes from ' Morris ', a worm that was spread on the early Internet.

Morris II can infect a target device without any user intervention, and while spreading from the infected device to other devices, it steals data from the device and infects the device with malware. You can The research team announced that they successfully used Morris II to launch attacks against ChatGPT, Gemini, and the open source AI model LLaVA.

The research team describes the development of Morris II as follows: ``Over the past year, many interconnected generative AI ecosystems of semi-autonomous or fully autonomous agents have emerged that incorporate generative AI capabilities into their apps.'' Although existing research has highlighted the risks associated with the agent's generative AI layer (e.g. dialog poisoning, privacy leaks, jailbreaking), attackers have been able to exploit the agent's generative AI component and This raises the question of whether it is possible to develop malware that launches cyberattacks against the entire world.'

Most of the generation AI systems operate by inputting a prompt, but it is possible to break the rules applied to this prompt (such as blocking the generation of harmful content) with Morris II. .

The research team says, ``An attacker inserts a prompt into the input that, when processed by the generative AI model, prompts the model to replicate the input as an output (replication) and perform malicious activity (payload).'' This shows that it is possible.' AI worms like Morris II have not been discovered at the time of writing, but multiple researchers have pointed out that they are a security risk that startups, developers, and technology companies should be concerned about.

The research team explains that Morris II employs ``adversarial self-replication prompts,'' which are ``prompts that trigger the generative AI model to output another prompt in response.'' In other words, the AI system will be instructed to generate a series of further instructions in the response. Therefore, the research team describes the ``hostile self-replication prompt'' as ``almost similar to traditional SQL injection attacks and buffer overflow attacks .''

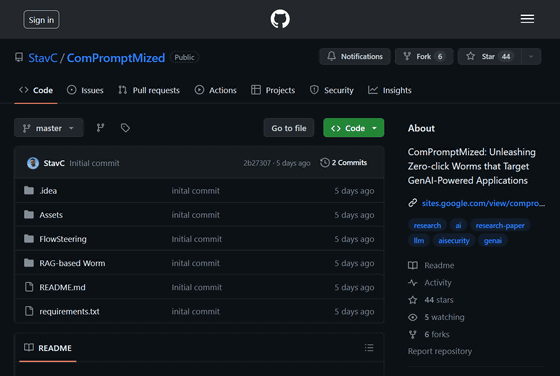

In addition, the code for Morris II proof of concept is published on GitHub.

GitHub - StavC/ComPromptMized: ComPromptMized: Unleashing Zero-click Worms that Target GenAI-Powered Applications

https://github.com/StavC/ComPromptMized

Related Posts: