Apple has released an image editing AI model ``MGIE'' that edits photos just by giving instructions in language, and a demo has been released so I tried using it

Apple, in collaboration with the University of California, Santa Barbara, has released MGIE , an AI model that can edit photos just by giving verbal instructions.

[2309.17102] Guiding Instruction-based Image Editing via Multimodal Large Language Models

apple/ml-mgie

https://github.com/apple/ml-mgie

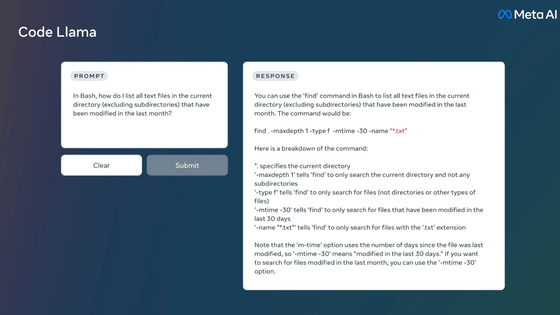

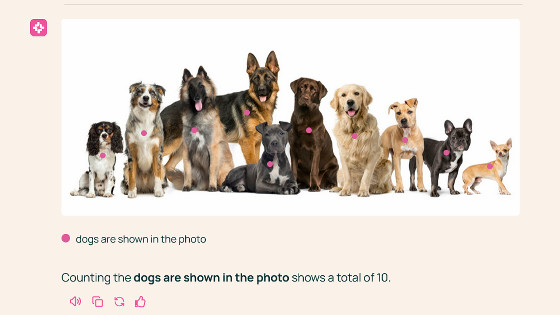

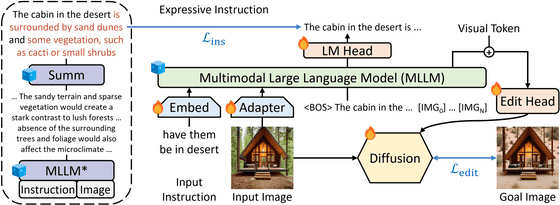

MGIE is an abbreviation for MLLM-Guided Image Editing, which allows you to perform various image editing tasks such as changing the shape of objects in an image and editing brightness. MGIE is a multimodal large-scale language model that handles both images and natural language, and users only need to give instructions in natural language. By generating ``expressive instructions'' based on user input, the AI that actually performs the editing will be able to perform appropriate image editing.

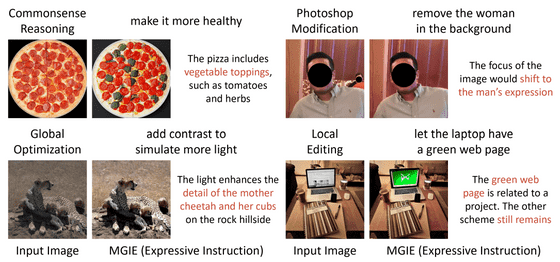

An example of editing using MGIE is shown below. For each image pair, the left is the original image and the right is the MGIE output result. In the pizza example in the upper left, if you give an ambiguous instruction such as 'make it more healthy,' the answer will be 'The pizza includes vegetable toppings, such as tomatoes and herbs.' Detailed instructions were generated and vegetable toppings were added. In the image editing example in the upper right, if you instruct ``remove the woman in the background'', it will be exactly as you want. In addition, it is also possible to increase the brightness of the image and change the content displayed on the PC in the image.

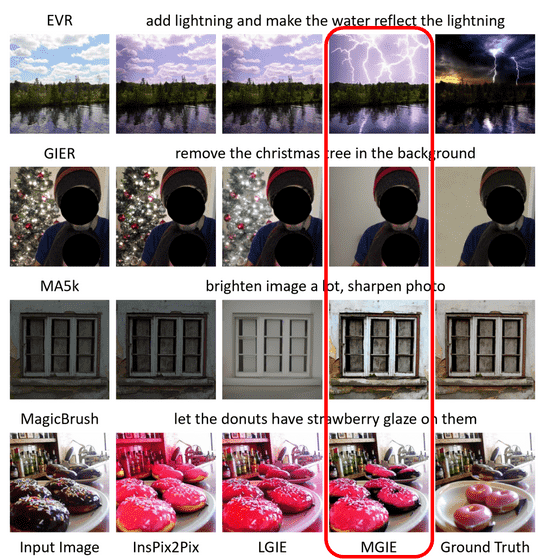

A comparison with the conventional methods 'InsPix2Pix' and 'LGIE' is like this. 'Input Image' on the left is the input data, and 'Ground Truth' on the right is the correct data. It's obvious that MGIE was able to edit according to the instructions, such as displaying lightning properly and erasing the Christmas tree.

The MGIE model is distributed as differential weights from LLaVA under the CC-BY-NC license, which does not allow commercial use. Therefore, in order to use the MGIE model, it is necessary to comply with the LLaVA license. CLIP , LLaMA , Vicuna , and GPT-4 are used for LLaVA training, so you must also follow their terms.

A demo of MGIE is available at the link below, and you can actually try out image editing using MGIE.

MLLM-guided Image Editing (MGIE) - a Hugging Face Space by tsujuifu

https://huggingface.co/spaces/tsujuifu/ml-mgie

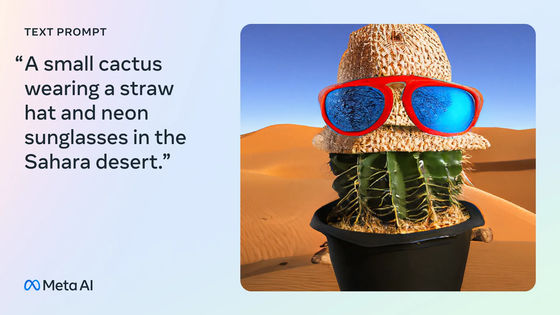

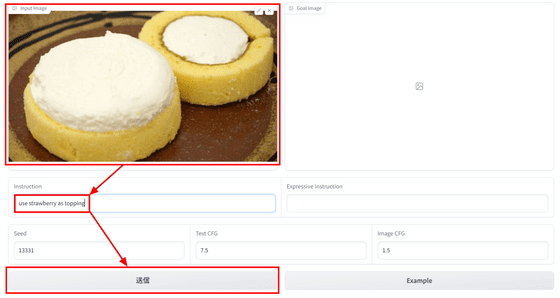

This time I will edit a photo of a roll cake .

Drag and drop the image into the 'Input Image' field, enter 'use strawberry as topping' in the Instructions, and click 'Send'.

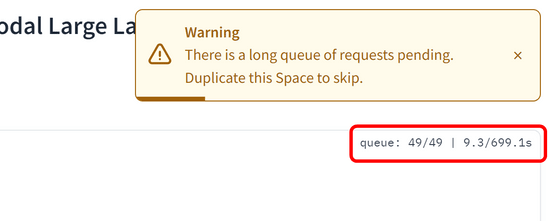

If there are many people using the service, there will be a waiting list. At the time of writing the article, there were about 50 people in line, and the estimated waiting time was 700 seconds.

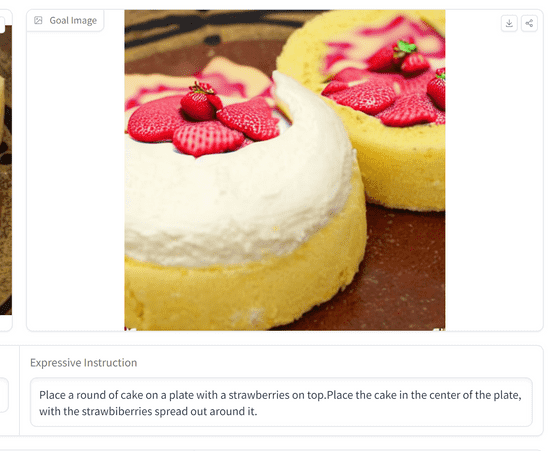

After waiting for a while, the output will appear as shown below. ``Place a round of cake on a plate with a strawberries on top.Place the cake in the center of the plate, with the strawberries spread out around it. Detailed instructions have been generated: ``Put the strawberries on top and spread the strawberries around them.''

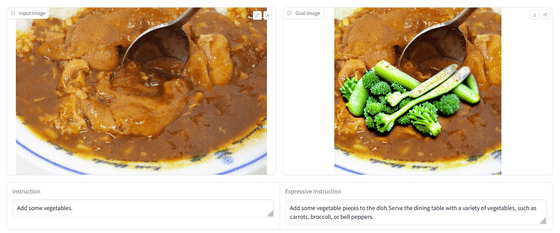

When I entered a photo of

In addition, Apple has announced its focus on the generative AI field, and in its 2024 first quarter financial results announcement held on February 1, the company emphasized that it is investing a huge amount of time and effort into AI. did.

Apple CEO Tim Cook reveals ``Details of generation AI function will be announced in the second half of 2024'' at the 2024 first quarter financial results announcement - GIGAZINE

Related Posts:

in Review, Software, Web Application, Posted by log1d_ts