Google DeepMind announces AI ``AlphaGeometry'' that can solve mathematics Olympiad level geometry problems, demonstrating performance close to human gold medalists

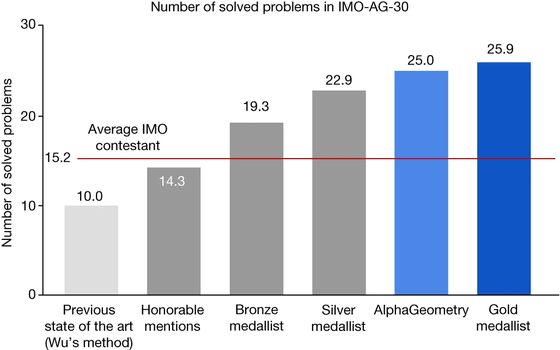

Google DeepMind has announced AlphaGeometry , an AI that can solve complex geometry problems at the level of the International Mathematics Olympiad. AlphaGeometry actually solved 25 of the 30 geometry problems posed at the International Mathematics Olympiad within the time limit.

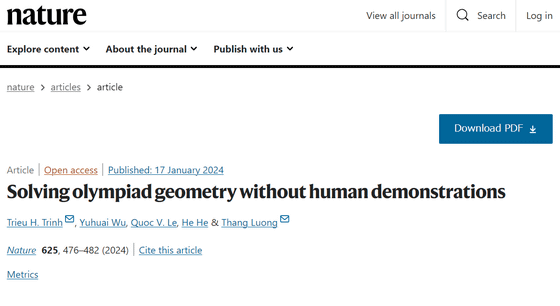

Solving olympiad geometry without human demonstrations | Nature

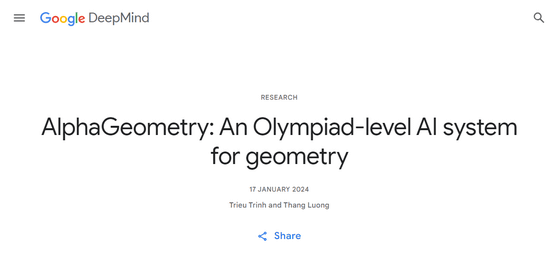

AlphaGeometry: An Olympiad-level AI system for geometry - Google DeepMind

https://deepmind.google/discover/blog/alphageometry-an-olympiad-level-ai-system-for-geometry/

Large-scale language models can generate natural sentences as if they were written by a human, but this is possible using an architecture called Transformer , where large-scale language models generate sentences probabilistically according to the context. Because we are hiring. However, in order to solve elementary arithmetic and mathematical problems, it is necessary to build up logic step by step, so large-scale language models are said to be weak at solving complex mathematical problems.

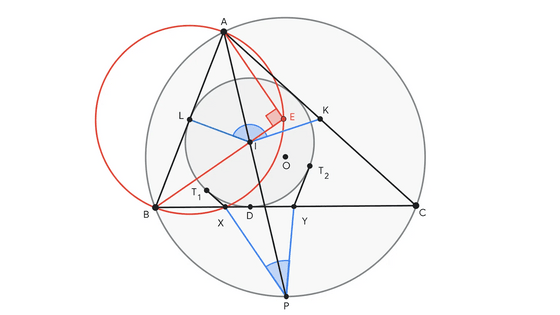

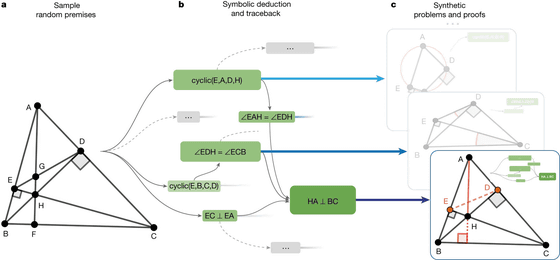

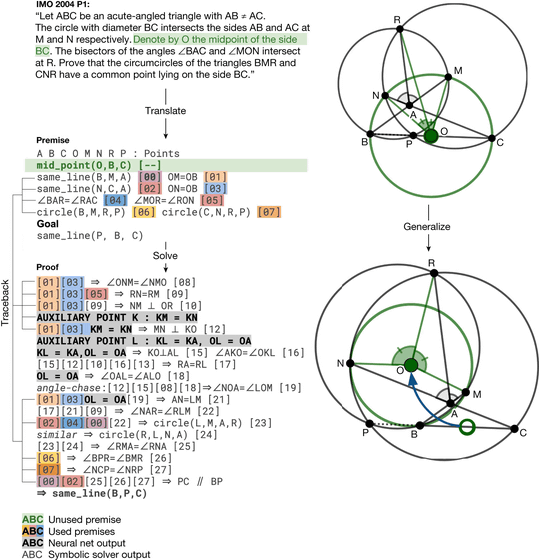

According to Google DeepMind, AlphaGeometry is a model that combines a neural language model and a symbolic deduction engine, and the concept is 'thinking fast and slow.'

Language models are good at identifying common patterns and relationships in data, allowing them to quickly predict potentially useful elements, but they lack the ability to reason and explain. . On the other hand, symbolic deduction engines are based on formal logic, so they develop logic based on clear rules. In other words, the neural language model is designed to provide quick and intuitive ideas, while the symbolic deduction engine simultaneously makes more careful and rational decisions.

The following is the result of actually benchmarking 30 questions asked at the International Mathematics Olympiad. The conventional model (far left) had about 10 questions, but AlphaGeometry (second from the right) succeeded in solving 25 questions. According to Google DeepMind, the International Mathematics Olympiad gold medalist (far right) was able to solve 25.9 questions on average, so AlphaGeometry's problem-solving ability is close to that of a human gold medalist. You can say that.

Mathematician Ngo Bao Chau, who won two consecutive gold medals at the International Mathematics Olympiad and also won the Fields Medal in 2010, said, ``It is amazing that AI researchers are working on the geometry problems of the International Mathematics Olympiad. I think it makes sense, because it's a bit like chess in the sense that there are fewer rational moves at each step. I'm surprised that Google DeepMind was able to pull this off. This is an impressive achievement.”

Google DeepMind says, ``The AI we've developed is approaching International Mathematics Olympiad gold medalist level in geometry, but we have our sights set on achieving even greater things. We train the AI from scratch using large-scale synthetic data.'' 'Given the wide range of possibilities for what AI can do, this approach has the potential to shape how future AI discovers new knowledge, not only in mathematics but also beyond.' We are recognized as a pioneer.

The AlphaGeometry code and model are published in the GitHub repository below.

GitHub - google-deepmind/alphageometry

https://github.com/google-deepmind/alphageometry

Related Posts: