Announces the establishment of a safety advisory group to monitor OpenAI to ensure that it does not pose a threat to the survival of humanity, allowing the board of directors to refuse to release an AI model even if management claims that the model is safe

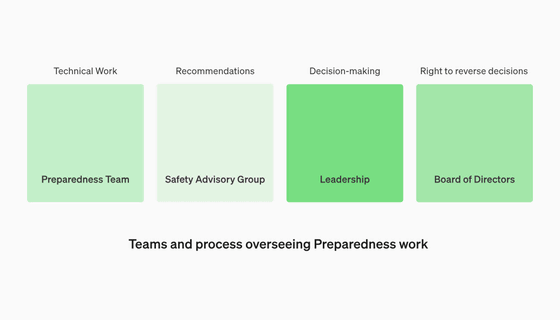

OpenAI has reviewed its internal safety processes to protect against harmful AI risks and threats, and announced the establishment of a ' Safety Advisory Group ' above its technical team. The Safety Advisory Group will continue to test AI models and make recommendations to management and the board of directors if there are any risks.

Preparedness

https://openai.com/safety/preparedness

Preparedness Framework(Beta) | OpenAI

(PDF file) https://cdn.openai.com/openai-preparedness-framework-beta.pdf

We are systemizing our safety thinking with our Preparedness Framework, a living document (currently in beta) which details the technical and operational investments we are adopting to guide the safety of our frontier model development. https://t.co/vWvvmR9tpP

— OpenAI (@OpenAI) December 18, 2023

OpenAI buffs safety team and gives board veto power on risky AI | TechCrunch

https://techcrunch.com/2023/12/18/openai-buffs-safety-team-and-gives-board-veto-power-on-risky-ai/

In October 2023, OpenAI announced that it had formed a ``Preparedness'' team to analyze the catastrophic risks of AI and protect humanity. The Preparedness team, led by Alexander Madrid, former director of the Massachusetts Institute of Technology's Center for Machine Learning (ML), tracks, assesses, and predicts catastrophic risks.

OpenAI, the developer of ChatGPT, forms a specialized team to analyze ``catastrophic risks of AI'' and protect humanity - GIGAZINE

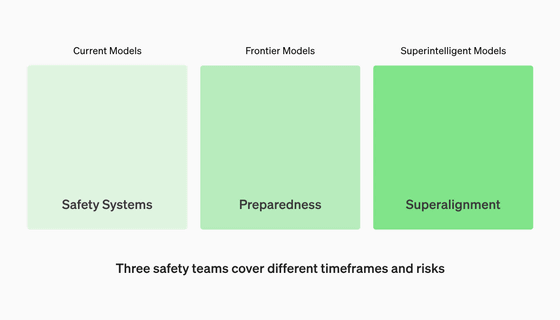

OpenAI updated this page about the Preparedness team on December 18, 2023. In addition to the Preparedness team, which investigates AI models under development, the Safety Systems team investigates the risks of current models, and the 'Safety Systems' team investigates the risks of superintelligent models such as artificial general intelligence that are expected to be put into practical use in the future. They announced that they will establish a team called ``Superalignment'' and that these three teams will ensure the safety of OpenAI.

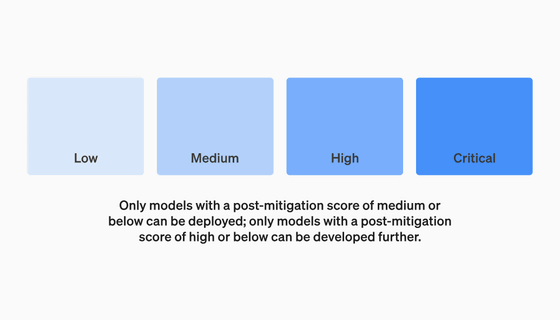

In addition, the safety evaluation level is divided into four stages: 'Low', 'Medium', 'High', and 'Critical', and if the risk of the AI under development exceeds High, development will be discontinued, and if it exceeds Medium, release may be discontinued. Set threshold. Evaluation criteria for each level are summarized in

In addition, OpenAI announced the creation of a division, the Safety Advisory Group, to oversee the technical work and operational structure for safety decision-making. This Safety Advisory Group, in addition to the Preparedness Team, will evaluate and report safety across various departments, including OpenAI's technical department.

The Safety Advisory Group sits above OpenAI's technical development and produces regular reports on AI models. Additionally, the report is submitted to both management and the board of directors. Management can decide whether to release an AI model based on the Safety Advisory Group's report, but the board of directors can veto management's decision. In other words, even if management ignores the Safety Advisory Group's report and decides to release an AI model that poses high risks on its own, the board can use the same report to overturn that decision. is.

However, IT news site TechCrunch points out that as a result of CEO Sam Altman's surprise retirement and return in mid-November 2023, members who opposed CEO Altman were excluded from the board of directors. 'If the Safety Advisory Group makes a recommendation and the CEO decides to release it based on that information, could a board that is effective at CEO Altman really put the brakes on it? If 'If so, how do we know? There's not much mention of transparency other than a pledge that OpenAI will be audited by an independent third party.' He expressed skepticism that a Safety Advisory Group would really work if it existed in reality.

◆Forum now open

A forum related to this article has been set up on the GIGAZINE official Discord server . Anyone can write freely, so please feel free to comment! If you do not have a Discord account, please create one by referring to the article explaining how to create an account!

• Discord | 'Do you think the establishment of OpenAI's safety monitoring department will affect the development speed?' | GIGAZINE

https://discord.com/channels/1037961069903216680/1186604238969786408

Related Posts: