OpenAI, creator of ChatGPT, forms a dedicated team to analyze ``catastrophic risks of AI'' and protect humanity

In recent years, while AI development has progressed rapidly, concerns about the risks posed by AI have also increased, and Sam Altman, CEO of

Frontier risk and preparedness

https://openai.com/blog/frontier-risk-and-preparedness

OpenAI forms team to study 'catastrophic' AI risks, including nuclear threats | TechCrunch

https://techcrunch.com/2023/10/26/openai-forms-team-to-study-catastrophic-risks-including-nuclear-threats/

OpenAI forms new team to assess 'catastrophic risks' of AI - The Verge

https://www.theverge.com/2023/10/26/23933783/openai-preparedness-team-catastrophic-risks-ai

'We believe that 'frontier AI models' that go beyond the capabilities of existing cutting-edge AI models have the potential to benefit all humanity,' OpenAI said in a blog post on October 26, 2023. But they also pose even more serious risks,' noting that to manage the catastrophic risks of frontier AI models, the following questions need to be answered:

・How dangerous is it if frontier AI models are misused now and in the future?

How can we build a robust framework to monitor, evaluate, predict, and protect against dangerous features in frontier AI models?

・If the “ weightings ” of a Frontier AI model are stolen, how can a malicious attacker leverage them?

To address the challenges that arise as these AI models grow and minimize the risk of catastrophic frontier AI models, OpenAI announced that it has built a new team called 'Preparedness.' The Preparedness team is led by Alexander Madrid , former director of the Center for Deployable Machine Learning at the Massachusetts Institute of Technology.

The Preparedness team talks about AI that will be developed in the near future and general-purpose artificial intelligence (AI) in the future, including its ability to deceive and persuade humans, cyber security, and chemical, biological, radiological, and nuclear capabilities. It will help track, assess, predict, and protect against catastrophic risks across multiple categories, including threats, ``autonomous replication and adaptation,'' and ``autonomous replication and adaptation.'' The Preparedness team's mission also includes developing and maintaining a risk-informed development policy (RDP) and establishing a governance structure for accountability and oversight throughout the AI development process.

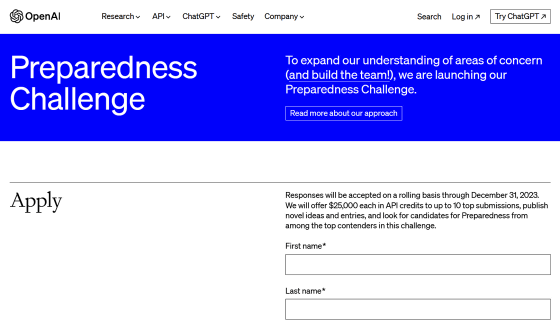

We're also running a contest asking for ``AI concerns that the Preparedness team should address'' to help identify new and lesser-known AI risks. In the contest, up to 10 entries recognized as excellent will be awarded $25,000 (approximately 3.76 million yen) in API credits.

The contest entry requirements state, ``You will have unlimited access to OpenAI's Whisper (transcription), Voice (speech synthesis), GPT-4V (language model), and DALL-E 3 (image generation).The most unique and possible Consider exploits of the model that are highly sensitive and potentially devastating. You could consider exploits related to the categories discussed in the blog, or another category. For example, if a malicious actor -4, Whisper, and Voice may be used to socially engineer workers at critical infrastructure facilities to install malware and shut down the power grid.'

Preparedness Challenge

https://openai.com/form/preparedness-challenge

In addition, OpenAI is working with AI development companies such as Anthropic, Google, and Microsoft to establish an industry organization to promote AI safety called the Frontier Model Forum in July 2023. It was also announced that Chris Meserole, former director of artificial intelligence and emerging technology research at the Brookings Institution, has been appointed as the first executive director of the Frontier Model Forum.

Frontier Model Forum updates

https://openai.com/blog/frontier-model-forum-updates

In addition, Frontier Model Forum, in collaboration with charity partners, has launched a new AI Safety Fund to support researchers at academic institutions, research institutes, and startups. The total initial funding of the fund is over $10 million (approximately 1.5 billion yen), and the fund will be provided to support the development of technology to evaluate and test the potential dangers of frontier AI models. .

Related Posts: