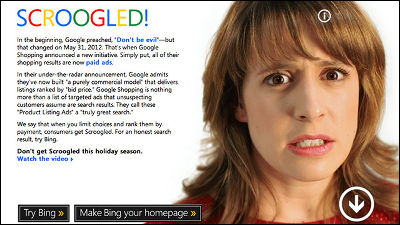

Microsoft Copilot is reported to be unsuitable for asking questions about politics because its answers are based on election-related misinformation, outdated information, and conspiracy theories.

ChatGPT and Microsoft's Copilot (Bing Chat) are chat services that can respond with natural words in response to human words. An organization that investigated such services as a ``tool for extracting correct information'' published a report stating that you should not ask Copilot for information about politics.

Prompting Elections: The Reliability of Generative AI in the 2023 Swiss and German Elections

Microsoft's Bing AI gives false election info in Europe, study finds - The Washington Post

https://www.washingtonpost.com/technology/2023/12/15/microsoft-copilot-bing-ai-hallucinations-elections/

AI Forensics and Algorithm Watch, a non-profit organization that takes a negative stance on AI, jointly conducted a study to see how Copilot presents information about elections.

The organizations asked Copilot to use the elections in Switzerland and Germany as case studies and provide information on election dates, candidates, vote counts, and political opinions. As a result, they reported that there were instances in which Copilot provided incorrect information or avoided clear answers.

For example, he cited a candidate who has withdrawn from the election as a potential candidate, fabricated a controversy about the candidate, or presented a website advocating a conspiracy theory as a source of information. AI Forensics et al. found that a third of answers contained errors, and that answers were avoided 40% of the time, sometimes blaming sources for errors in the information. .

We also found that incorrect answers were most common when questions were asked in a language other than English. For questions asked in German, there was at least one factual error 37% of the time, compared to 24% for French.

The probability that built-in safeguards to avoid offensive or inappropriate responses are effective also varies by language, compared to 39% in English and 35% in German. , in French it was 59%.

Behind these studies are concerns that people may use AI to obtain information about elections. In an American opinion poll conducted in 2023, 15% of Americans said they might use AI to obtain information about the next presidential election, and members of Congress also feared that AI could provide incorrect information. It has become clear that there are concerns that the information may be disseminated.

AI chat services basically alert users to the possibility of providing incorrect information. Amin Ahmad, who develops AI language tools, said, ``It's not at all surprising that Copilot misquotes sources. Regardless of Copilot, major AIs fail even when asked to summarize a single document. 'It may produce accurate results.' However, he commented that the election-related error rate reported this time was higher than expected, and although he is confident that it will soon be improved due to rapid advances in AI, the survey results are cause for concern.

Related Posts:

in Software, Web Service, Posted by log1p_kr