Guidelines showing how judicial institutions should use AI emerge

With the increasing use of AI across society, the Judiciary, which oversees judges in England and Wales, has published guidelines setting out how AI should be used in the judiciary.

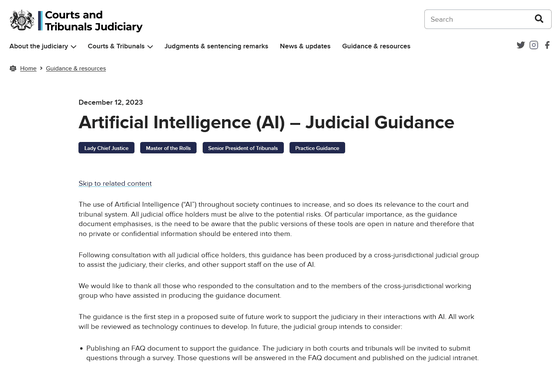

Artificial Intelligence (AI) - Judicial Guidance - Courts and Tribunals Judiciary

The guidelines are broadly divided into seven sections, and their contents are roughly as follows.

◆1: Understanding AI and its applications

Before using AI, you need a basic understanding of its capabilities and potential limitations.

For example, publicly available AI chatbots use algorithms to generate text based on the prompts they receive and the data they are trained on. The text that the model predicts as the 'most likely combination of words' is generated, and the exact answer is not output.

Also, like information available on the Internet, it is useful for finding out what you know is correct, but you don't have it at hand, but it is useful for research to find new information whose accuracy cannot be verified. It is not suitable for use.

The quality of the answers is also variable and depends on the nature of the prompts entered and how the relevant AI tools are used. Additionally, even if you enter the best prompts, the information you provide may be incomplete, inaccurate, or biased.

Also, it should be noted that many large-scale language models are trained based on materials published on the internet, so the output opinions are often based on American law. is.

◆2: Protection of confidentiality and privacy

Do not enter information into publicly available AI chatbots that are not in the public domain. In particular, please do not enter any personal or confidential information. All information entered into the AI chatbot is considered publicly available to the world.

Currently (as of December 12, 2023 local time) publicly available AI chatbots remember all of the user's questions and input information, and that information is used to respond to queries from other users. Masu. As a result, information you entered in the past may become public.

If you have a choice, you should disable your AI chatbot history. You can choose to disable history in ChatGPT and Google Bard, but not yet in Bing Chat.

Please note that some AI platforms, especially smartphone apps, may ask for access to various information on your device. In such circumstances, all permit applications should be refused.

If confidential or personal information is unintentionally disclosed, you should contact your supervising judge or the judiciary. If personal information is involved, please report it as a data incident. For information on how to report a data incident to law enforcement, please see the data breach notification form on our intranet.

In the future, AI tools designed for use in courts and tribunals may become available, but until that happens, all AI tools should assume that their input is public. must be treated with care.

◆3: Ensuring accountability and accuracy

Information provided by AI tools may be inaccurate, incomplete, or outdated. Even if it says 'on behalf of English law', it may not be true.

Some of the issues that arise when using AI tools include:

-Make up fictitious examples, references, or statements. or refer to any non-existing law, provision or legal document.

- Provide false or misleading information about the law or how it applies.

・Make factual mistakes.

◆4: Be aware of bias

AI tools based on large-scale language models generate responses based on the datasets they are trained on. This means that you are not immune to mistakes and biases contained in the dataset used for training.

Always consider the possibility of this error or bias and the need to correct it.

◆5: Maintaining security

Please do your best to maintain the security of yourself and the court/courthouse.

When accessing AI tools, please use a business device rather than a personal device. Also, please use your work email address.

Paid services are generally found to be more secure than free services, so if you have a paid subscription plan, use it. However, avoid using third-party companies that license AI platforms from other companies, as they may not be reliable when it comes to handling user information.

◆6: Take responsibility

Employees of judicial institutions are personally responsible for materials produced in their name.

Judges are generally not required to describe the research or preparatory work that might have been done to reach their decision using AI. If these guidelines are properly followed, generative AI is a potentially capable auxiliary tool.

If clerks, legal assistants, or other staff use AI tools in their work, you should talk to them to ensure they are using the tools appropriately and taking steps to reduce risks. .

◆7: Beware of the possibility that court/tribunal users are using AI tools

Some AI tools are already being incorporated into the judicial community, such as TAR (Technology Assisted Review), which is already used in electronic discovery.

All legal representatives have a duty to ensure that the materials they submit to the court are accurate and appropriate. If AI is to be used responsibly, there is no reason why a legal representative should mention whether it was used, but it depends on the context.

An example of an example of AI being used in a judicial setting is when a lawyer used ChatGPT to create a document that cited non-existent precedents or included a non-existent presiding judge's opinion. there is.

Related Posts:

in Note, Posted by logc_nt