Meta launches AI safety tool “Purple Llama”, providing tools and evaluation system to increase safety of open generative AI models

Meta announced the launch of Purple Llama, a project that will provide tools and evaluation systems to help developers build products using open generative AI.

Introducing Purple Llama for Safe and Responsible AI Development | Meta

Announcing Purple Llama: Towards open trust and safety in the new world of generative AI

https://ai.meta.com/blog/purple-llama-open-trust-safety-generative-ai/

In the world of cybersecurity , a red team is a team that emulates an attacker's tools and techniques to ensure security effectiveness, and a blue team is a security team that defends systems against real attackers or red teams. I call. Meta brought this idea to the risk assessment of generative AI, and named the project ``Purple'' to make it clear that attack and defense cooperate to reduce risk.

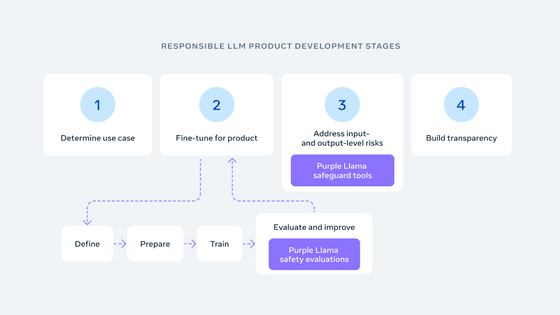

The purpose of Purple Llama is to help developers deploy generative AI models responsibly, following the best practices shared in Meta's Responsible Use Guide . ' CyberSec Eval ' and ' Llama Guard ' were released at the same time as the project was launched.

CyberSec Eval is a set of cybersecurity safety evaluation benchmarks for large-scale language models, including ``metrics for quantifying the cybersecurity risk of large-scale language models,'' ``a tool for evaluating the frequency of suggesting unsafe code,'' ``malicious 'Assessment tools to make it harder to generate code and carry out cyberattacks,' said to help reduce the risks outlined in

In addition, Llama Guard is a tool that detects potentially dangerous or completely out-of-control content, and can support filtering that checks all inputs and outputs to large language models.

Purple Llama takes an open approach and works with AI Alliance, AMD, AWS, Google Cloud, Hugging Face, IBM, Intel, Lightning AI, Microsoft, MLCommons, NVIDIA, Scale AI, and many others to improve・In addition to continuing development, it is stated that the tools provided will be available to the open source community.

Partners Together.AI and Anyscale will be demonstrating and providing technical details of the tool at the NeurIPS workshop from December 10th to 16th, 2023.

Related Posts: