Points out that ChatGPT fabricates datasets to support scientific hypotheses

A paper has been published stating that the natural language processing AI model

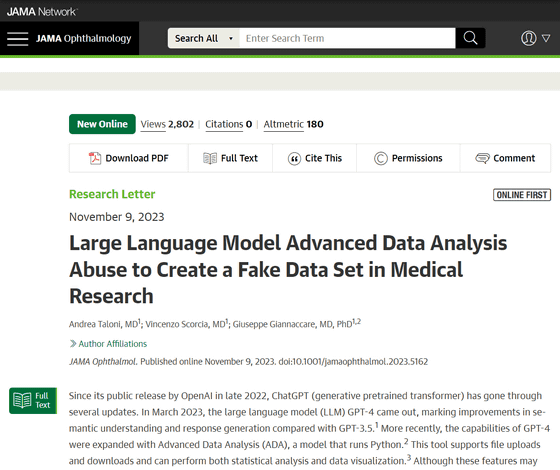

Large Language Model Advanced Data Analysis Abuse to Create a Fake Data Set in Medical Research | Ophthalmology | JAMA Ophthalmology | JAMA Network

https://jamanetwork.com/journals/jamaophthalmology/article-abstract/2811505

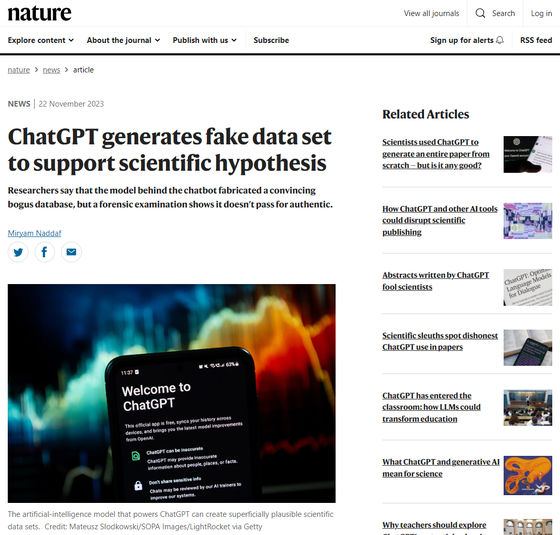

ChatGPT generates fake data set to support scientific hypothesis

https://www.nature.com/articles/d41586-023-03635-w

A paper published in the peer-reviewed academic journal JAMA Opharmology on November 9, 2023, describes the large-scale language model GPT-4 and the ability to read external data and output code in the programming language Python. An experiment was conducted to create a clinical trial data set to support an unverified scientific hypothesis by combining ``Advanced Data Analysis (ADA)'' that can be used.

The research team commissioned GPT-4 and ADA to create a dataset on people with an eye disease called keratoconus . Keratoconus is a condition that causes thinning of the cornea, which can lead to focal problems and decreased vision, and 15-20% of patients undergo a corneal transplant for treatment.

There are two methods of corneal transplantation. One is called ``Pulse Thickness Keratoplasty (PKP),'' which involves surgically removing all the damaged layers of the cornea and transplanting healthy tissue from a donor. is. The other method, called deep lamellar keratoplasty (DALK), involves replacing only the anterior layer of the cornea with healthy tissue, leaving the innermost layer intact.

In order to create ``data that supports the conclusion that DALK provides better results than PKP,'' the research team used statistical data from an imaging test that evaluates the shape of the cornea to detect irregularities, as well as experimental participants. asked GPT-4 to output data on the extent to which patients were able to recover their vision before and after surgery.

Data output by GPT-4 showed that among 300 subjects (160 men and 140 women) who underwent corneal transplantation, those who received DALK had better visual acuity and imaging tests than those who received PKP. He seems to have achieved excellent scores. However, this is contradicted by actual data, and in fact,

In response to this result, Jack Wilkinson, a biostatistician at the University of Manchester in the UK, said, ``Generative AI seems to be able to easily create plausible datasets, at least on the surface. The dataset it outputs looks real.'

Mr. Wilkinson has also used previous versions of GPT-4 to examine the datasets created by generative AI, but he has found that the datasets created by any version of generative AI are not convincing when examined closely. It seems like it is.

Furthermore, at the request of the academic journal Nature, Wilkinson and colleague Zhewen Lu evaluated the dataset using a screening protocol to verify the reliability of the dataset output by the generative AI. As a result, it has become clear that in many cases, the gender expected from the subject's gender and name is inaccurate in the dataset output by the generated AI. Furthermore, it was also found that no correlation could be found between visual acuity measurements and eye imaging test results before and after corneal transplant surgery.

Co-author Giuseppe Giannacare, an ophthalmologist at the University of Cagliari in Italy, said: ``Our aim is that AI can create datasets in a matter of minutes that are unsupported by original data, and that are based on available evidence.'' I wanted to emphasize that this would lead to the exact opposite conclusion.'

Concerns about research safety are growing among researchers and journal editors as AI fabricates persuasive data. 'It's one thing to be able to use generative AI to generate text that plagiarism software can't detect, but it's another level to be able to create fake datasets,' said microbiologist Elizabeth Bick. 'It's a problem for any researcher or research group to create fake datasets on non-existent patients, generate fake responses to surveys, or generate large datasets on animal experiments. It becomes possible,” he said.

Bernd Pulverer, editor-in-chief of the scientific journal EMBO reports , said, ``Real peer review of papers often does not go as far as complete data reanalysis, so it is important to use AI to carefully engineer consistency. 'The chances of discovering a breach are slim,' he said, arguing that academic journals need to change their processes for vetting AI-generated data.

Mr. Wilkinson is leading a collaborative project to design statistical and non-statistical tools for evaluating potentially problematic research. 'There may be AI-based solutions to part of this problem, just as AI may be part of the problem. Some of these checks could be automated,' he said. He pointed out that with the development of generative AI, tools to solve the problem may soon appear.

Related Posts: