AI-generated image detection system misjudges Israeli-Palestinian war photos as ``fake'', experts point out that they were not generated by AI

In the large-scale conflict that occurred on October 7, 2023, when Hamas, an Islamic fundamentalist organization that controls the Palestinian Gaza Strip, attacked Israel

Please note that this article contains graphic photographs and links to them, so please use caution when viewing them.

AI Images Detectors Are Being Used to Discredit the Real Horrors of War

https://www.404media.co/ai-images-detectors-are-being-used-to-discredit-the-real-horrors-of-war/

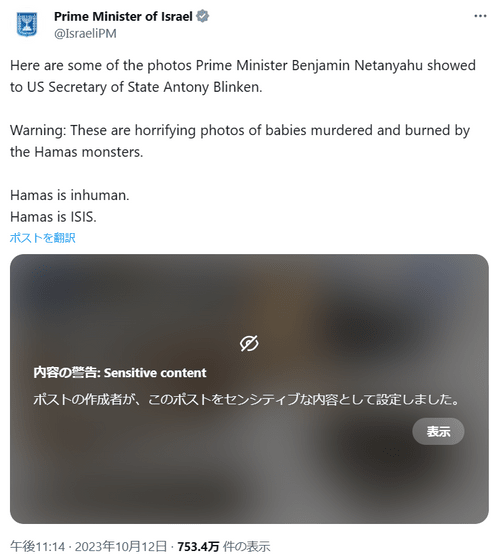

The photos in question were posted by the Israeli government on the social networking site X, saying, ``These are horrifying photos of babies murdered and burned by the monsters of Hamas.''

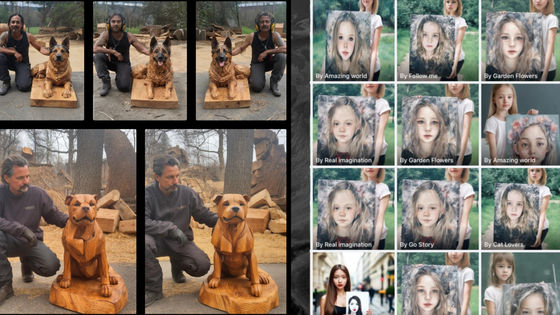

Speculation that this photo was fake began to spread when Ben Shapiro, a conservative Jewish American commentator,

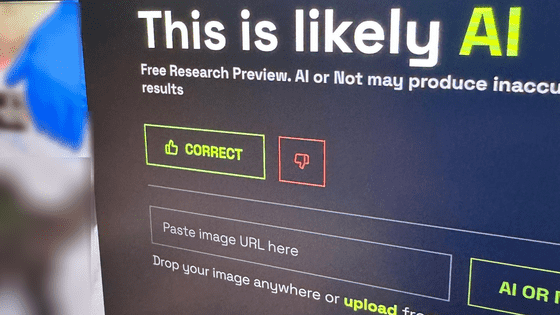

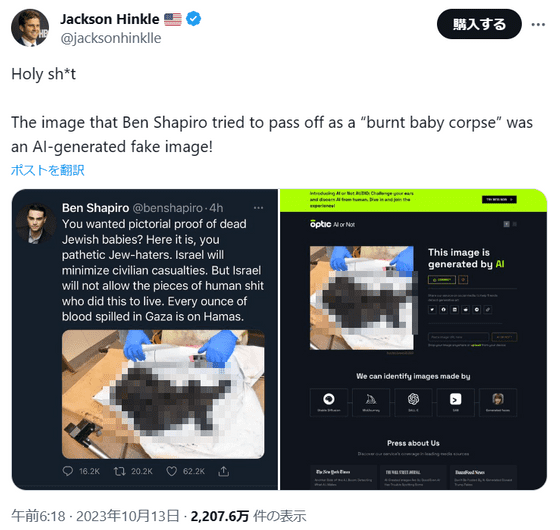

Jackson Hinkle, another X user with 500,000 followers, posted on X a screenshot of a photo of Shapiro's post run through AI or Not, a free AI image detection tool, and wrote, ``Baby burns.'' The photo of the corpse was synthesized by AI,'' Shapiro said, accusing him of trumpeting. If you click on the image below, a screenshot of Mr. Hinkle's post without the mosaic will be displayed.

The claim that ``the photo of a baby's burned corpse is fake'' quickly spread on SNS and was used as ``proof that the official account of the Isral government is disseminating false information generated by AI.''

However, Professor Hany Farid of the University of California, Berkeley, who specializes in analyzing digital images and detecting digitally manipulated images such as deepfakes, points out that there is no evidence that this image was generated by AI.

Mr. Farid analyzed the consistency in the positional relationship between shadows and light sources in photographs, and said, ``Structural consistency, accurate shadows, and image distortion that is common in images generated by AI.'' Since there are no

Just because the photo was not generated by AI, it cannot be determined whether the photo is real or not. This is because the date and time of the photo and the location cannot be determined from just this single photo, and it is impossible to determine whether the subject is really the burned corpse of a human baby without the coroner's opinion.

On the image bulletin board 4chan, a composite of this image with a puppy is being circulated as an ``original photo''. Farid points out that disseminating fake images as real is one way to make photos that convey facts questionable.

Regarding the many AI image detection tools available on the Internet, Farid said, ``Most of these automated tools prepare a large number of real images and AI-generated images and then try to distinguish between them. They are created by training another AI system to distinguish between them. However, they are a black box with no transparency. They may work with up to 90% accuracy, but they may not be able to detect images or resolutions that are out of their range. Errors are more likely to occur when images with low quality or untrained materials are used.'

Related Posts:

in Software, Posted by log1l_ks