Released AI model 'Japanese InstructBLIP Alpha' that Stability AI recognizes images and answers in Japanese

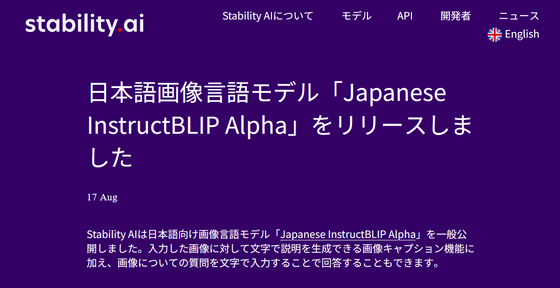

Stability AI, which develops the image generation AI 'Stable Diffusion', has announced that the image language model ' Japanese InstructBLIP Alpha ' for Japanese has been released to the public. It is equipped with an image caption function that can generate a description in Japanese for the input image, and a function that responds in Japanese when you enter a question about the image in Japanese.

Japanese Image Language Model “Japanese InstructBLIP Alpha” Released — Stability AI Japan

stabilityai/japanese-instructblip-alpha Hugging Face

https://huggingface.co/stabilityai/japanese-instructblip-alpha

'Japanese InstructBLIP Alpha' is an extension of the Japanese language model 'Japanese StableLM Alpha' released in August 2023.

Stability AI releases Japanese language model 'Japanese StableLM Alpha' - GIGAZINE

As the name suggests, Japanese InstructBLIP Alpha uses the image language model InstructBLIP , and consists of an image encoder, query converter, and Japanese StableLM Alpha 7B. However, in order to build a high-performance model with a small Japanese dataset, part of the model is initialized with InstructBLIP pre-trained with a large English dataset and tuned using the Japanese dataset. thing.

The training dataset used is as follows.

・Japanese version of the image dataset ' Conceptual 12M '

・Image dataset ' COCO ' and its Japanese caption dataset ' STAIR Captions '

・Japanese image question and answer dataset ' Japanese Visual Genome VQA dataset '

For example, if you load a photo as follows, it will respond in Japanese with the result of recognizing the image as 'Sakura and Tokyo Skytree' at Output.

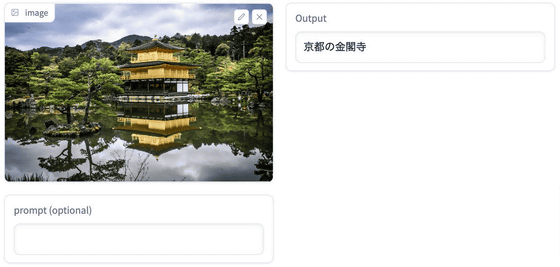

When I load a picture of Kinkakuji, it returns the answer 'Kyoto's Kinkakuji'.

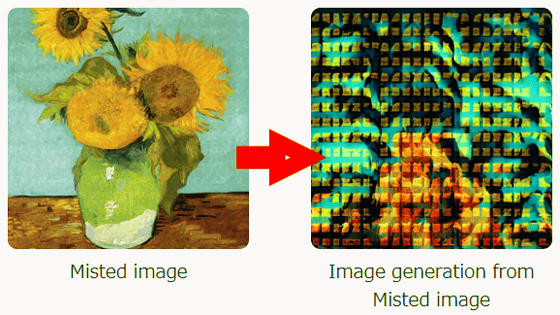

In the image below, I answered, 'Two people sitting on a bench looking at Mt. Fuji.' The point is not just to simply arrange nouns, but to show the relationship between the things in the photo.

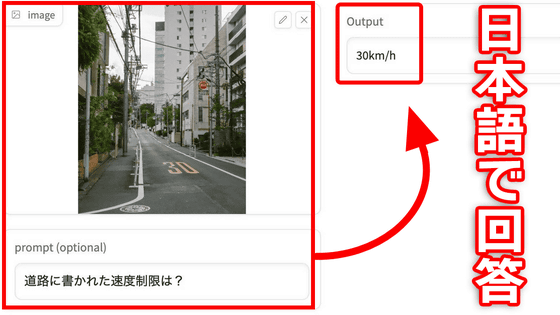

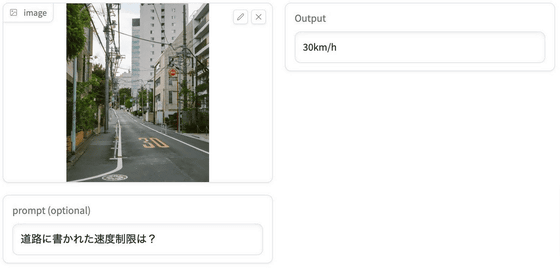

Next time, after loading a landscape photo in the city, prompt (text input) asks 'What is the speed limit written on the road?', Answer '30 km / h'.

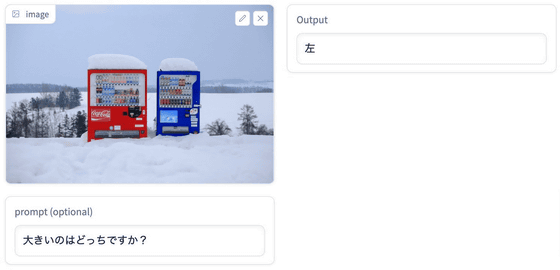

When asked about the size of the two vending machines lined up in the snow, he answered 'left'. Images have a notion of left and right that is not written directly.

In response to the question 'What is the color of the yukata of the person on the far right?', I answered 'purple'. As far as the image is concerned, it seems that it is more like light ink than purple, and it seems that the accuracy of distinguishing colors is not so high.

Stability AI says, 'Examples of using this model (Japanese InstructBLIP Alpha) include a search engine using images, a scene explanation and question-and-answer session in front of you, and textual explanations of images for the visually impaired, etc. can be considered, ”he commented.

In addition, Japanese StableLM Alpha is released on Hugging Face Hub based on ' JAPANESE STABLELM RESEARCH LICENSE AGREEMENT ' in a format compliant with Hugging Face Transformers.

Related Posts:

in Software, Posted by log1i_yk