An easy-to-understand explanation of the differences between 'CPU', 'GPU', 'NPU', and 'TPU' is as follows

Machine learning, which is essential for AI development, uses processing chips such as GPUs, NPUs, and TPUs, but the differences between them are difficult to understand. Google and Backblaze, which develops cloud storage services, have summarized the differences between CPU, GPU, NPU, and TPU.

AI 101: GPU vs. TPU vs. NPU

https://www.backblaze.com/blog/ai-101-gpu-vs-tpu-vs-npu/

Cloud TPU overview | Google Cloud

https://cloud.google.com/tpu/docs/intro-to-tpu?hl=ja

◆What is a CPU?

CPU is an abbreviation for 'Central Processing Unit' and is used for a variety of purposes such as creating documents on a PC, calculating the trajectory of a rocket, and processing bank transactions. Although it is possible to perform machine learning with a CPU, the CPU has the characteristic of ``accessing memory every time a calculation is performed'', and memory communication speed is the bottleneck when executing the large amount of calculations required for machine learning. This will slow down the processing speed.

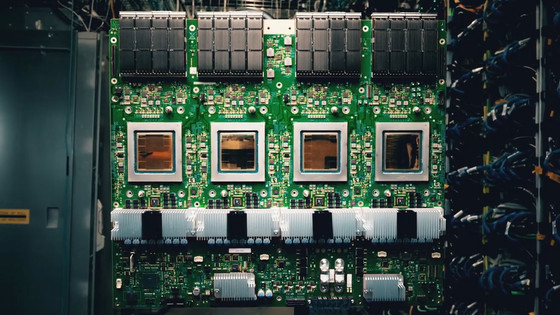

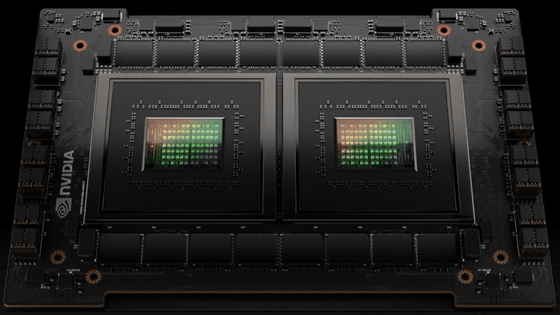

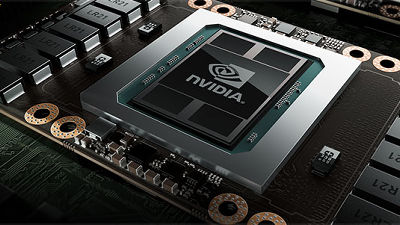

◆What is GPU?

GPU is an abbreviation for 'Graphics Processing Unit,' and graphics boards that include GPUs, memory, input/output devices, etc. are widely available on the market. GPUs are equipped with several thousand arithmetic and logic units (ALUs), and are good at processing large amounts of calculations in parallel, allowing for machine learning that is overwhelmingly faster than CPUs. is. However, since GPUs are general-purpose chips that can also be used for games and CG processing, they are less efficient than chips designed specifically for machine learning.

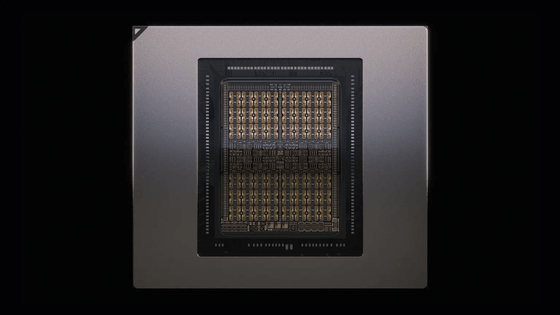

◆What is NPU?

NPU is an abbreviation for ``Neural Processing Unit'' or ``Neural network Processing Unit,'' and like GPU, it specializes in the operation of ``processing large amounts of calculations in parallel.'' In addition, NPUs are chips specifically designed for machine learning, so they can perform processing more efficiently than GPUs. On the other hand, NPUs are chips designed specifically for machine learning, so they cannot be used for a variety of purposes like CPUs and GPUs.

Many smartphones that have appeared in recent years are equipped with an NPU, and the iPhone series is equipped with it under the name 'Neural Engine', and there are also models of the smartphone SoC 'Snapdragon' equipped with an NPU. doing.

Qualcomm announces the latest SoC for smartphones 'Snapdragon 8+ Gen 1', manufacturer changed from Samsung to TSMC - GIGAZINE

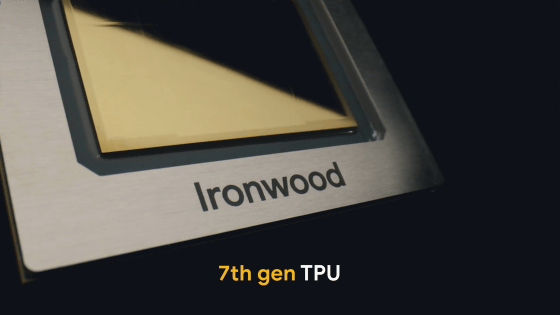

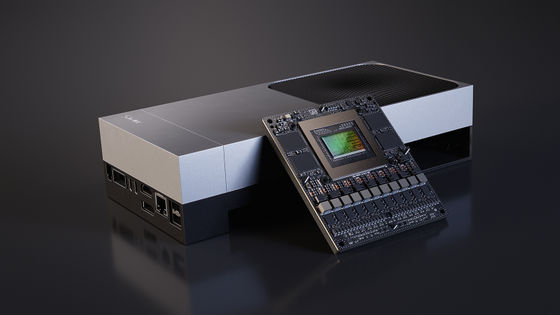

◆What is TPU?

TPU is an abbreviation for 'Tensor Processing Unit' and is a type of NPU developed by Google. Google provides users with TPU processing power through its cloud computing service 'Google Cloud,' allowing users to perform machine learning-related processing with high efficiency without having to prepare their own hardware. .

In addition, Google claims that the ' TPU v4 ' it provides is 1.2 to 1.7 times faster and 1.3 to 1.9 times more energy efficient than NVIDIA's machine learning-related processing specialized GPU 'A100'. Masu.

Google researchers claim that Google's AI processor 'TPU v4' is faster and more efficient than NVIDIA's 'A100' - GIGAZINE

Related Posts:

in Free Member, Hardware, Posted by log1o_hf