A method that can convert movies into high-quality 3D data has appeared

Using Neural Radiance Fields (NeRF), a technology that converts images and videos into 3D images, it was difficult to handle long images taken while the camera was moving. Researchers at National Taiwan University, Meta, and the University of Maryland-College Park have devised a method that will allow them to render high-quality 3D data even from longer footage.

[2303.13791] Progressively Optimized Local Radiance Fields for Robust View Synthesis

Progressively Optimized Local Radiance Fields for Robust View Synthesis

https://localrf.github.io/

Immersive 3D Rendering from Casual Videos?-YouTube

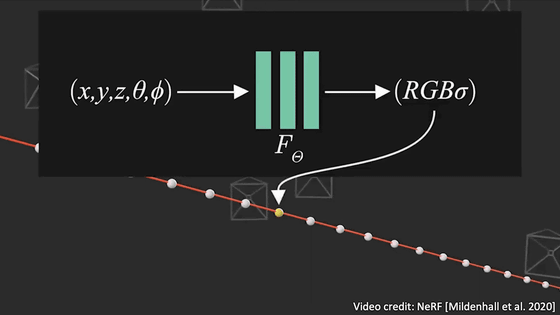

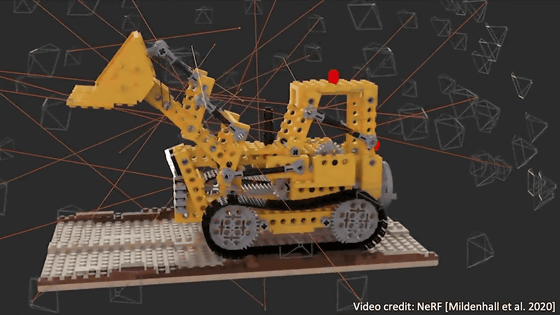

Neural Radiance Fields (NeRF), a technology that converts images and videos into 3D objects, optimizes volume expression by matching 3D position information and viewing direction to density and color.

Then you can use volume rendering to render the pixels. This rendering process is differentiable and the scene representation can be optimized by minimizing the reconstruction error due to rendering all rays.

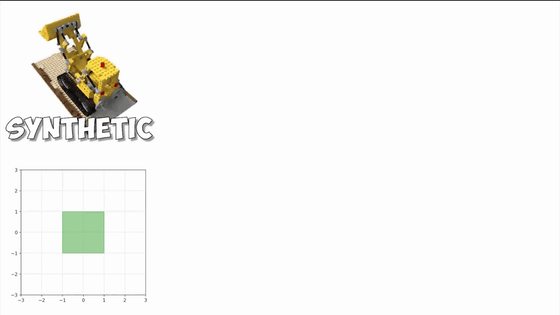

This method works very well because compound objects can be easily represented in

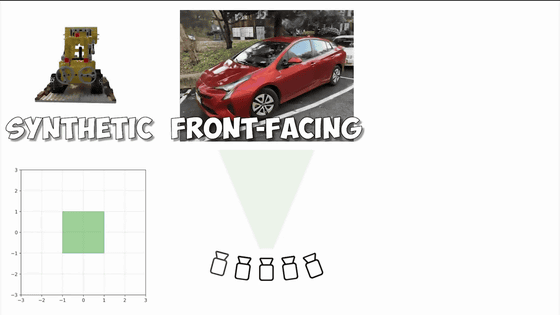

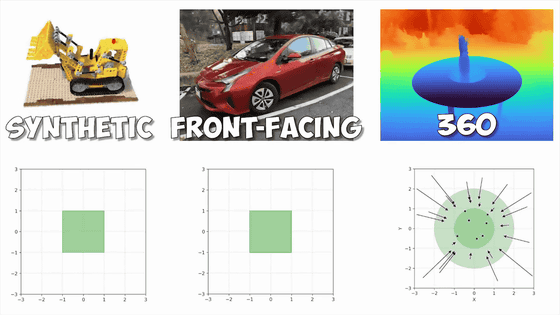

A real world scene is a bit more difficult as it includes objects both near and far from the camera. If all cameras are pointing in the same direction,

For 360-degree photos with the camera facing inward, ' Mip-NeRF 360 ' provides an erosion operation to smoothly transform the space into a bounded area.

However, it is difficult to model large scale scenes captured with long trajectories. Shrinkage techniques are still applicable.

The rendering looks good at first, but the quality degrades away from the center.

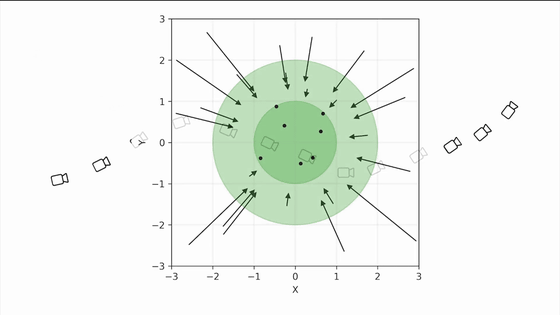

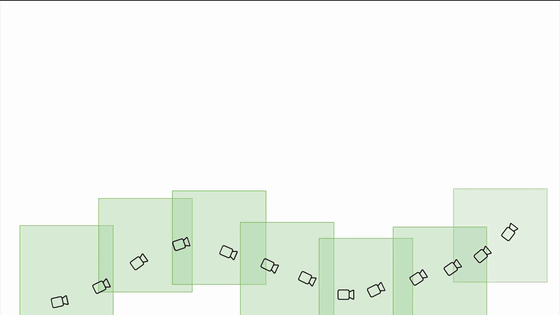

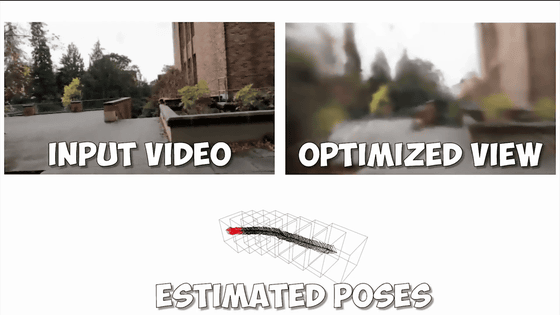

Therefore, the research team devised a method to create multiple local radiance fields along the trajectory.

This makes it possible to process very long sequences while maintaining high-quality reconstruction and free-viewpoint synthesis results.

The remaining issue is camera composition.

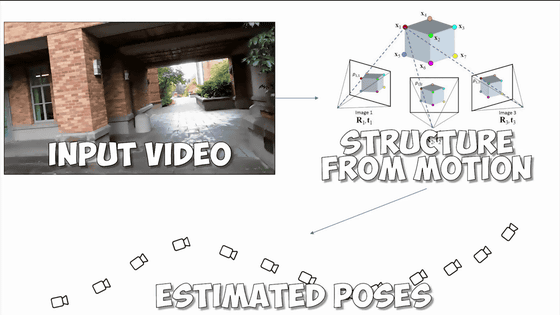

Most NeRFs assume that camera composition can be reliably estimated via Structure from Motion (SfM) algorithms.

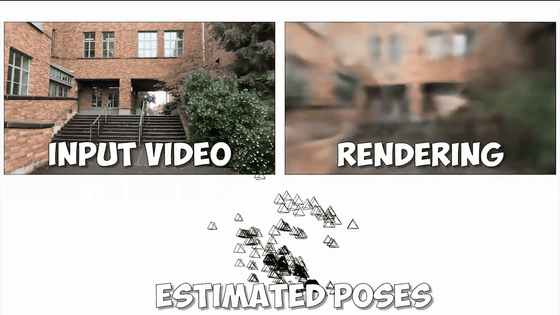

However, SfM algorithms are often not robust and have no remedy if composition estimation fails.

Therefore, we came up with a method to predict the camera process together with the radiance field.

This works fine for short sequences, but not for long sequences.

Therefore, the research team decided to progressively estimate the camera process in the framework of creating a local radiance field. It was decided to provide more robustness by doing local and incremental estimation. Rendering achieves seamless transitions by blending the results of adjacent radiance fields.

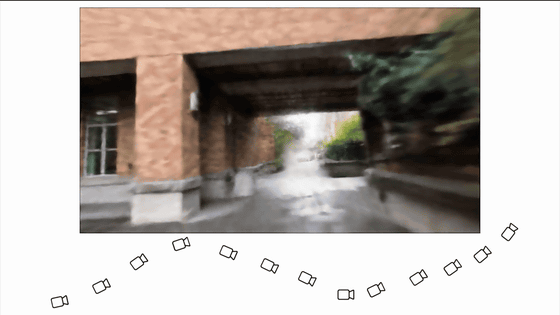

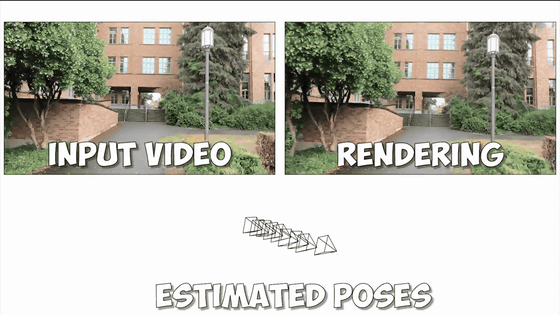

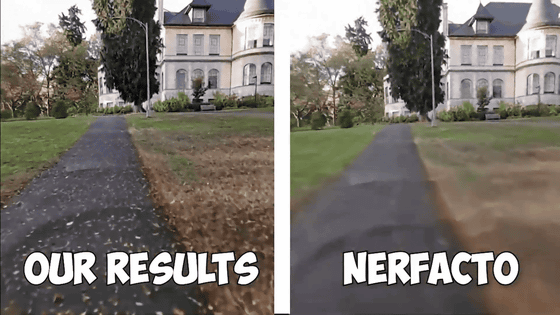

In the second half of the movie, comparisons with other NeRFs are made, and you can see that the research team's method has succeeded in smooth rendering even in long sequences.

Related Posts:

in Video, Posted by logc_nt