A movie that controls the image generation AI ``Stable Diffusion'' with Multi ControlNet and ``animates live-action images faithfully'' is amazing

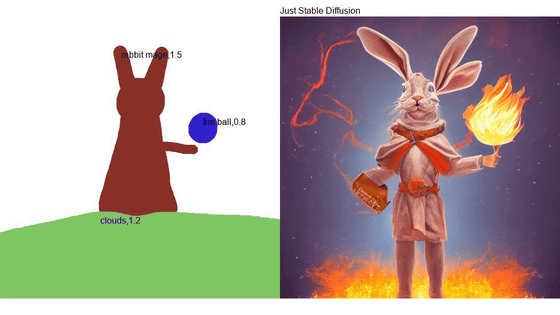

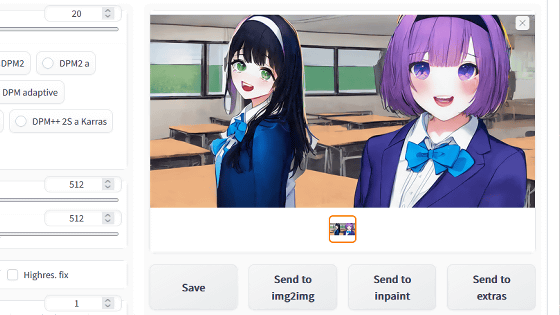

'ControlNet' is a technology that supports output by adding contour lines, depth, image area division (segmentation) information, etc. to pre-trained models such as Stable Diffusion . By using this ControlNet, it is possible to strongly reflect the line drawings and the posture of the person in the separately read image in the output result. A movie that faithfully animates live-action images with 'Multi ControlNet' that uses multiple ControlNets has been released on the online bulletin board Reddit.

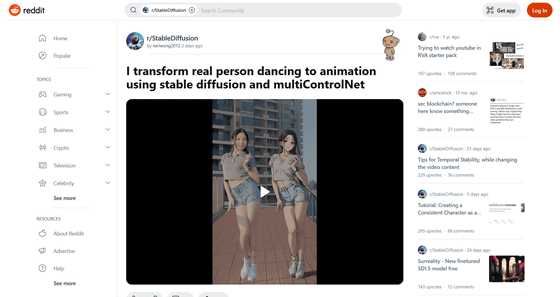

I transform real person dancing to animation using stable diffusion and multiControlNet : r/StableDiffusion

https://www.reddit.com/r/StableDiffusion/comments/12i9qr7/i_transform_real_person_dancing_to_animation/

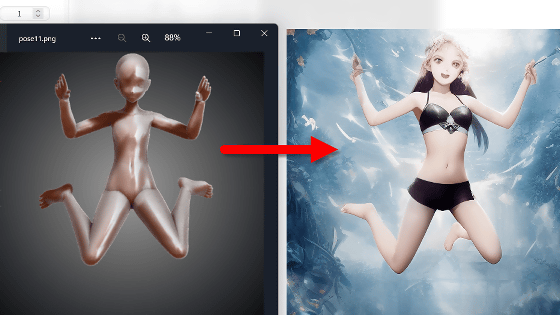

By using Stable Diffusion and ControlNet together, it becomes possible to specify poses and compositions with high accuracy. You can understand how accurately the pose and composition can be reflected in the output image by reading the following article that actually used ControlNet.

I tried a combination technique of ``ControlNet'' & ``Stable Diffusion'' that allows you to specify poses and compositions and quickly generate your favorite illustration images Review-GIGAZINE

You can see the movies published on Reddit from the following. On the upper left is a live-action dance video added by ControlNet, and the animation video is dancing with the same composition and choreography.

A movie that automatically animates live-action images with image generation AI ``Stable Diffusion'' and multi ControlNet-YouTube

According to neilwong2012, the user who published this movie, it is generated using four ControlNets and the parameters are adjusted. The animation is very smooth, but neilwong2012 says that the fact that the background is fixed is a big success. Also, the model data ' animeLike25D ' used for animation seems to be characterized by being able to animate a real person beautifully even if the noise removal strength is low. However, according to neilwong2012, animeLike25D is not suitable for animating images that move the body violently.

Mr. neilwong2012 has also released an animated video using Multi ControlNet on YouTube. For example, below is an animated version of Rick Astley's ' Never Gonna Give You Up ' MV.

《Never Gonna Give You Up》AI remake-YouTube

It is like this when Elsa sings 'Let It Go' in the 3DCG animation movie ' Ana and the Snow Queen ' as a 2D animation movie.

'Let it go' AI remake-YouTube

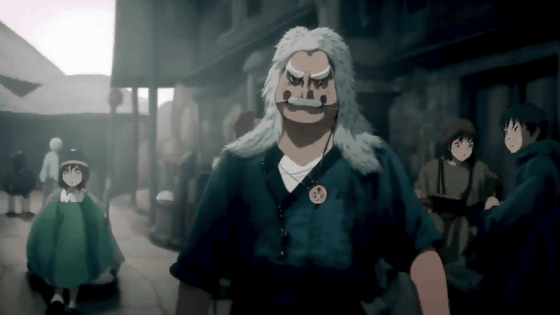

The following movie is an animation of one scene of the drama ' Game of Thrones '. Even scenes where many people are reflected on one screen and scenes where dragons fly in the sky, we have succeeded in converting them into animation properly.

'Game of Thrones' AI animation-YouTube

It looks like this when you animate the video you tried dancing the VOCALOID song 'Positive ☆ Dance Time'. The production of written characters that appear in the original movie is output as a mysterious character-like effect through Stable Diffusion. Also, there were moments when I felt uncomfortable with the movement of the clothes worn by the characters in the anime, probably because of the intense dance movements.

[AI animation] Positive ☆ Dance Time (3d to 2d)-YouTube

Related Posts: