Microsoft Research develops AI ``BioGPT'' specialized in the biomedical field, capable of answering questions with accuracy comparable to human experts

Modern science is built on the vast amount of scientific knowledge that has been accumulated so far, and it is extremely important to extract important information from the vast amount of previous research. So a team at

[2210.10341] BioGPT: Generative Pre-trained Transformer for Biomedical Text Generation and Mining

https://arxiv.org/abs/2210.10341

GitHub-microsoft/BioGPT

https://github.com/microsoft/BioGPT

BioGPT, a domain-specific generative model pre-trained on large-scale biomedical literature, has achieved human parity, outperformed other general and scientific LLMs, and could empower biologists in various scenarios of scientific discovery. Learn more: https://t. co/rWokasnQu1

—Microsoft Research (@MSFTResearch) January 26, 2023

BioGPT is a Microsoft language model trained for biomedical tasks

https://the-decoder.com/biogpt-is-a-microsoft-language-model-trained-for-biomedical-tasks/

Microsoft Research Proposes BioGPT: A Domain-Specific Generative Transformer Language Model Pre-Trained on Large-Scale Biomedical Literature - MarkTechPost

https://www.marktechpost.com/2023/01/31/microsoft-research-proposes-biogpt-a-domain-specific-generative-transformer-language-model-pre-trained-on-large-scale-biomedical- literature/

Researchers have long suspected that pre-trained language models could be useful in efficiently extracting relevant knowledge from the vast medical literature. However, the accuracy of applying a normal language model to the biomedical field as it is was not sufficient, so a method of ``training a language model with medical literature'' was devised, and so far, ` `BioBERT '' and ` `PubMedBERT '' have been developed. A pre-trained language model has been developed specifically for the biomedical domain of

BioBERT and PubMedBERT were based on BERT , a natural language processing model developed by Google that excels in contextual understanding, but the performance of OpenAI's GPT model, which excels at generative tasks, in the biomedical field is still under scrutiny. He said he didn't. Therefore, the research team at Microsoft Research decided to develop a language model trained on a wide range of biomedical literature based on `` GPT-2 '' announced in 2019.

In developing a language model specialized in the biomedical field, the research team collected text-based English papers published before 2021 from the

The AI developed in this way, specialized in the biomedical field, is ``BioGPT,'' and the research team says that it is superior to past models based on BERT in answering questions in the biomedical field and extracting end-to-end relationships. claim. Below is an example of a sentence generated by the research team entering a question sentence into BioGPT.

Input:

The treatment of COVID-19

input:

Treatment for COVID-19

Output BioGPT:

The treatment of COVID-19 with remdesivir (remdesivir for COVID-19) has been approved by the US Food and Drug Administration (FDA) for the treatment of severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) infection in patients with confirmed SARS-CoV-2 infection and is currently being evaluated in clinical trials for the treatment of COVID-19 in patients with confirmed SARS-CoV-2 infection, as well as in patients with suspected or confirmed SARS-CoV-2 infection.

BioGPT output:

Treatment of COVID-19 with remdesivir is approved by the U.S. Food and Drug Administration (FDA) for the treatment of severe acute respiratory syndrome coronavirus 2 in patients with confirmed SARS-CoV-2 infection. It is currently being administered to patients with suspected or confirmed SARS-CoV-2 infection as a treatment for COVID-19 and is being evaluated in clinical trials.

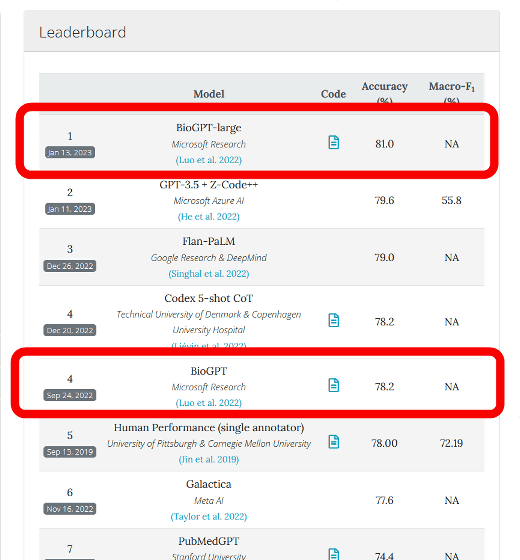

In addition, BioGPT scored 78.2% accuracy on the PubMed-provided dataset PubMedQA benchmark, beating the 78% performance of human experts. In addition, 'BioGPTLarge', which scales up BioGPT to the largest available GPT-2 architecture, has an accuracy of 81%, which ranks first in the ranking.

The number of parameters of BioGPTLarge is 1.5 billion, which is significantly lower than ' Flan-PaLM ' with 540 billion parameters and ' Galactica ' with 120 billion parameters. The Decofer, an AI-related web media, said the results show that relatively small, domain-specific language models can compete well with much larger general language models. I said.

Related Posts: