What kind of improvements did the topic 'NovelAI' make to the original Stable Diffusion that it can generate ultra-high-precision illustrations?

NovelAI's development team explains on its blog about the AI model of the image generation AI service 'NovelAI' that can generate illustrations with much higher accuracy than Stable Diffusion.

NovelAI Improvements on Stable Diffusion | by NovelAI | Oct, 2022 | Medium

NovelAI is a SaaS-model paid subscription service whose beta version was released on June 15, 2021, and is operated by Anlatan in the United States. Originally, as the name suggests, it was an AI that automatically generated novels, but on October 3, 2022, an image generation function was implemented.

NovelAI's Image Generation, #NovelAIDiffusion is live on https://t.co/UTsnpZKa6W now!

— NovelAI (@novelaiofficial) October 3, 2022

NovelAI Diffusion Anime image generation is uniquely tailored to give you a creative tool to visualize your visions without limitations, allowing you to paint the stories of your imagination.pic.twitter.com/WZEpQ5idgI

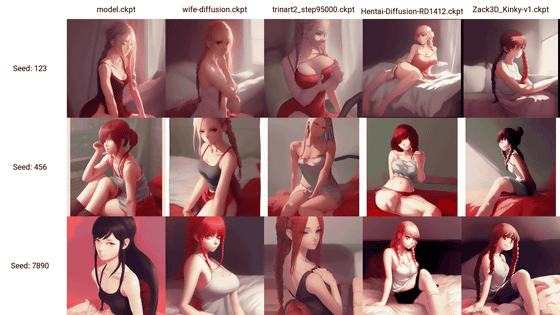

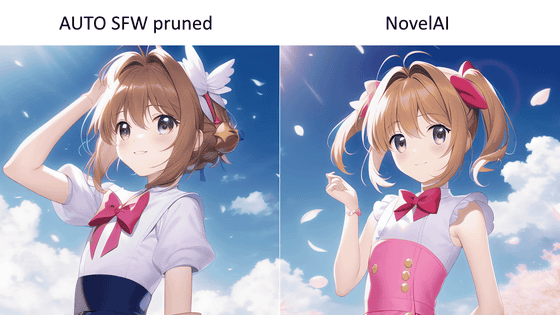

The model used by NovelAI for image generation is said to be the same latent diffusion model as the open source model Stable Diffusion, which was released to the public in August 2022. However, NovelAI can generate 2D illustrations with much higher accuracy than the original Stable Diffusion, and the dataset used for its learning includes many images from the overseas 2D image site 'Danbooru'. I am making it clear that

Since we are training on Danbooru, it also learns character names and their visuals. You can prompt for 'masterpiece portrait of smiling rem, re zero, caustics, textile shading, high resolution illustration' and get this: pic.twitter.com/2wqDmAxCJa

— NovelAI (@novelaiofficial) September 25, 2022

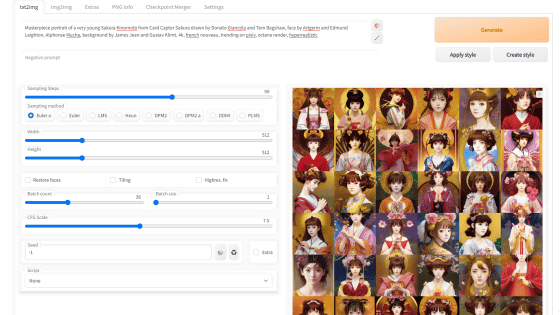

Most of the illustrations on Danbooru are reprinted without permission from SNS such as Pixiv and Twitter. big feature. Datasets are originally not just images, they need to be tagged for recognition by the model, but Danbooru's tag system can be used for tags of this dataset, so a huge dataset can be prepared at a relatively low cost. That's the point. Therefore, image generation AI models such as Waifu-Diffusion , which specialize in illustrations, are trained on a dataset based on Danbooru.

NovelAI learns from the same Danbooru-derived dataset as Waifu-Diffusion, but we were able to generate high-precision illustrations at a level that is distinct from Stable Diffusion and Waifu-Diffusion.

img2img was interesting and this spell was just right though

— Ajiko Toa @Vtuber (@Ajiko_channel_v) October 5, 2022

I feel like I pulled an SSR

I really like it with the thumbnail #NovelAIDiffusion #novelAI pic.twitter.com/yCxoQgzmgK

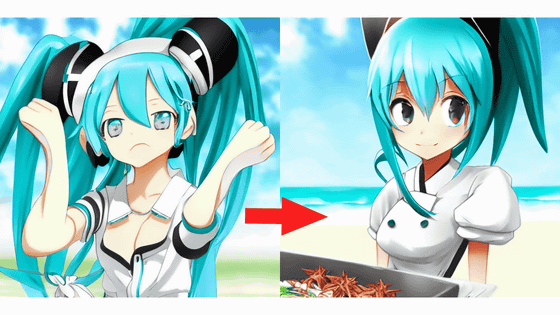

Hatsune Miku drawn by AI Too dangerous #NovelAI #NovelAIDiffusion pic.twitter.com/YHhf84QRoo

— Zukimikan ???? (@39anartwork) October 4, 2022

However, unlike open source Stable Diffusion and Waifu-Diffusion, it was unclear how NovelAI, which is a SaaS-based subscription and the model is not open to the public, actually works.

And on October 6, 2022, NovelAI's official Twitter account announced that Anlatan's GitHub repository was hacked and NovelAI's source code was leaked.

[Announcement: Proprietary Software & Source Code Leaks]

— NovelAI (@novelaiofficial) October 7, 2022

Greetings, Novel AI Community.

On 10/6/2022, we experienced an unauthorized breach in the company's GitHub and secondary repositories.

The leak contained proprietary software and source code for the services we provide.

After that, NovelAI's development team revealed on October 11 that NovelAI ``has changed the model architecture and training process of Stable Diffusion''.

Stable Diffusion uses a model called 'CLIP' to connect text and images. We have improved this CLIP to make more effective use of tag-based prompts and to improve the accuracy of generated images for input prompts.

Also, Stable Diffusion generates an image with an aspect ratio of 512 x 512 pixels by default, but sometimes it looks strange as if the 1:1 image was forcibly clipped from an image with a non-1:1 aspect ratio. An image of the composition may be generated. This is because multiple training images are cropped to an aspect ratio of 1:1 at once to optimize GPU efficiency when training AI models.

Cropping to this aspect ratio 1: 1 is often done based on the center of the screen as shown in the image below, for example, even though it is an image of 'a knight wearing a crown', the image after cropping contains a crown It can happen that you are not. Therefore, NovelAI seems to have succeeded in improving this problem a little by using random cropping instead of central cropping.

Furthermore, in order to solve this aspect ratio problem, NovelAI has implemented a custom batch generation code for the dataset. Stable Diffusion defines a maximum image size of 512 x 512 pixels, while NovelAI defines a maximum dimension size of 512 x 768 pixels and a maximum dimension size of 1024. Increasing the maximum image size requires a lot of VRAM, so it seems that the algorithm is adjusted to improve the efficiency of GPU processing.

And by extending the maximum length of the prompt in the original Stable Diffusion by 3 times and packing more information into the prompt, it is possible to control the image more finely.

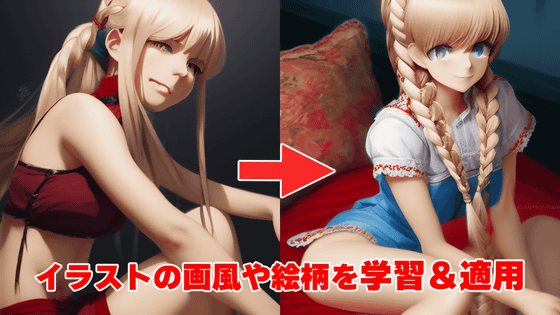

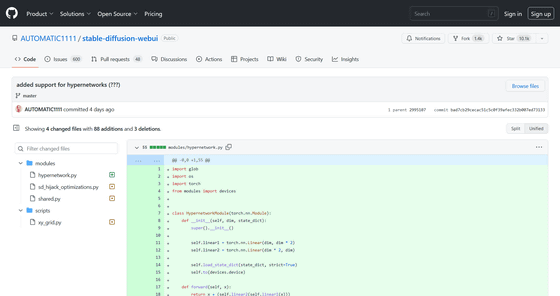

In addition, NovelAI is developing a module called 'HyperNetworks' as a new way to control model generation. This HyperNetworks is ``applying a single small neural network at multiple points in a large network'', originally as a text generation transformer model for automatically generating novels, repeated experiments on various configurations of neural networks. It was done. However, HyperNetowrks said that the problem was that the model could not be generalized sufficiently, and the overall learning capacity was very small.

However, by applying this HyperNetworks to Stable Diffusion of image generation AI, it seems that it was possible to maintain performance that is sufficient in the production environment while having more learning ability. As a result, NovelAi's image generation accuracy is much higher than Stable Diffusion and Waifu-Diffusion because the network configuration of the model has been fundamentally improved.

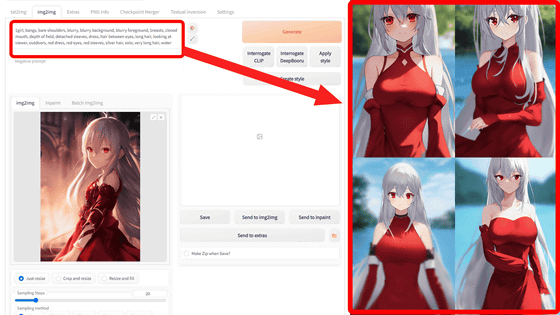

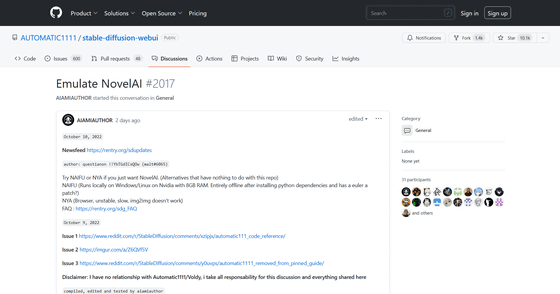

Immediately after the leak of NovelAI, the 'Hypernetwork' function that can read the leaked NovelAI model was added to the ' AUTOMATIC 1111 version Stable Diffusion web UI ', which is updated at a terrible speed with Stable Diffusion's unofficial webUI tool. This makes it possible to reproduce NovelAI with the AUTOMATIC1111 version Stable Diffusion web UI.

added support for hypernetworks (???) AUTOMATIC1111/stable-diffusion-webui@bad7cb2 GitHub

https://github.com/AUTOMATIC1111/stable-diffusion-webui/commit/bad7cb29cecac51c5c0f39afec332b007ed73133

Emulate NovelAI · Discussion #2017 · AUTOMATIC1111/stable-diffusion-webui · GitHub

https://github.com/AUTOMATIC1111/stable-diffusion-webui/discussions/2017

Related Posts:

in AI, Software, Web Service, Creation, Web Application, Posted by log1i_yk