Is the claim that 'Facebook has successfully removed hate speech' a lie?

by Mike Mozart

Facebook's AI can't even distinguish between fighting chickens and car accidents, and Facebook's claim that it 'removes hate speech and violent content' is a deliberate mistake, according to an article dated October 17, 2021. It was published in the Wall Street Journal of the economic newspaper. Facebook has published a blog post that strongly argues against this.

Facebook Says AI Will Clean Up the Platform. Its Own Engineers Have Doubts. --WSJ

https://www.wsj.com/articles/facebook-ai-enforce-rules-engineers-doubtful-artificial-intelligence-11634338184

Hate Speech Prevalence Has Dropped by Almost 50% on Facebook --About Facebook

https://about.fb.com/news/2021/10/hate-speech-prevalence-dropped-facebook/

Facebook disputes report that its AI can't detect hate speech or violence consistently --The Verge

https://www.theverge.com/2021/10/17/22731214/facebook-disputes-report-artificial-intelligence-hate-speech-violence

On October 17, 2021, the Wall Street Journal published an article stating that 'Facebook's AI has had little success in removing content such as hate speech and violent images.'

Facebook has consistently stated that it is actively removing policy-violating content such as hate speech and violent content. It also reports that it uses machine learning to detect hate speech.

However, the Wall Street Journal found that Facebook was able to remove less than 3-5% of all posts containing hate speech and less than 1% of all content containing violence from the internal documents it obtained. I reported. An internal document states that Facebook reduced its human resources for hate speech in 2019 and increased its reliance on AI, which statistically says 'hate speech is decreasing.' He said he was able to see it. It has also been pointed out that Facebook's AI is at a level where it is not possible to identify the difference between cockfighting and car accidents, and there are technical issues.

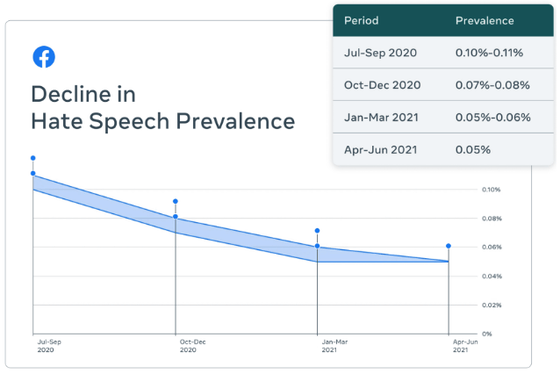

In response to a report from The Wall Street Journal, Facebook's vice president of integrity, Guy Rosen, said, 'The technology Facebook uses in the fight against hate speech is inappropriate, and Facebook deliberately makes false progress. Published an article explaining that 'the story that tells the story' is wrong. According to Rosen, 'removing content' is just one way to reduce hate speech, and Facebook 'focuses on the spread of hate speech and doesn't use a variety of tools to spread hate speech. He is thinking about 'how to do it'. In a recent survey , content contained hate speech at 0.05% of the total, in other words 5 times per 10,000, a 50% decrease over the last three years, Rosen said.

Facebook argues that 'the percentage of content that contains hate speech', not the number of content deleted, is the way to objectively assess the progress of hate speech measures. 'These documents show that our work has been done over the years. We can't be perfect, but our team is constantly evolving the system and identifying problems. And we continue to come up with solutions, 'Rosen said.

Related Posts:

in Software, Web Service, Web Application, Posted by darkhorse_log