MIT develops 'universal decoder' for all kinds of error detection and correction

Data that travels on the Internet, such as emails and YouTube movies, cannot avoid being mixed with noise due to radio wave interference during transmission, so the data receiving side must perform noise removal processing on the received data. A research team at the Massachusetts Institute of Technology has succeeded in developing a

A universal system for decoding any type of data sent across a network | MIT News | Massachusetts Institute of Technology

https://news.mit.edu/2021/grand-decoding-data-0909

Noise is inevitable when transmitting files, and a mechanism called error detection and correction is used to send and receive correct data that does not contain noise. There are many types of error detection and correction, each with its own decoder, but according to MIT, the algorithm used for the decoder is so complex that it is dedicated to each decoder. It is said that it is necessary to develop hardware. Therefore, the research team worked on the development of decoders and hardware for all kinds of error detection and correction.

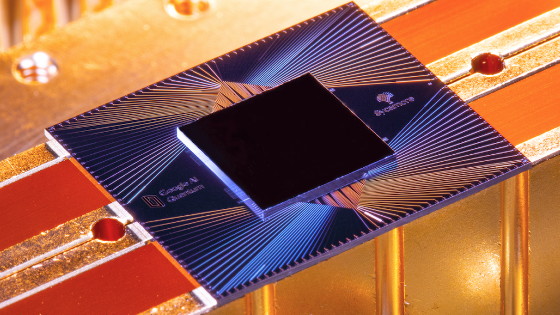

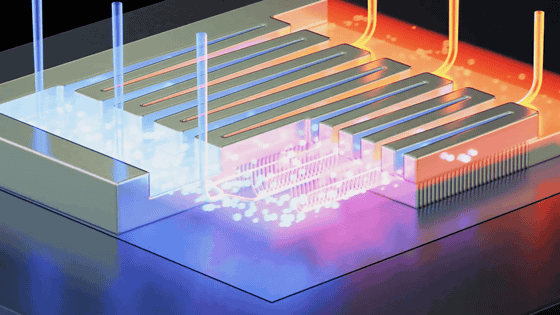

The development announced this time is a hardware decoder that adopts the universal decoding algorithm 'Guessing Random Additive Noise Decoding (GRAND)' and GRAND. In the normal decoding algorithm, decoding was started after analyzing the received data and determining the corresponding decoder, but in GRAND, 'By checking the parts where noise is likely to occur for each error detection and correction,' , Execute error detection and correction quickly ”is used. Regarding this method, the research team applied GRAND's characteristics to car repair, saying, 'When repairing a car, no one bothers to transcribe the blueprint. First, check the remaining amount of gasoline, then the battery is dead. We will check for the presence. '

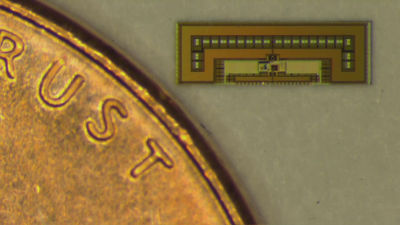

In addition, the newly developed hardware decoder uses a three-layer structure for the chip, and one layer analyzes simple noise patterns, and the remaining two layers analyze complex noise patterns. These layers operate independently and are said to contribute to improved power efficiency. Furthermore, in a demonstration experiment conducted by the research team, it was possible to decode codes up to 128 bits in about 1 microsecond, demonstrating the high performance of the GRAND-adopted chip.

The research team will continue to work on improving power efficiency by expanding the code size that GRAND can support and optimizing the structure of the chip, with the goal of improving decoding efficiency in fields such as 5G networks and IoT.

Related Posts: