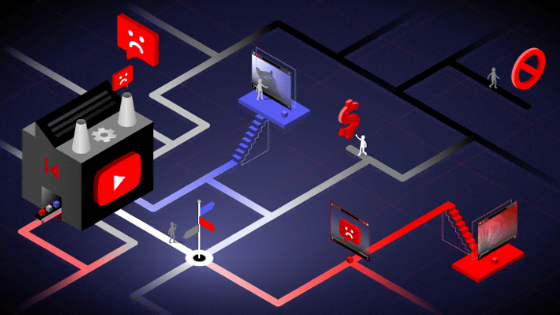

It turns out that YouTube is displaying content that violates the policy as a recommended video

Mozilla, which develops Firefox as a web browser, conducted a 10-month survey on 'videos that appear in the recommended video section of YouTube' based on user information, and YouTube recommends videos that are inappropriate for users. I found that it is displayed as. This tendency is especially noticeable for non-English speaking users, and Mozilla points out that there is a 'problem with the algorithm.'

YouTube Regrets Report --Mozilla_YouTube_Regrets_Report.pdf

(PDF file)

Mozilla Foundation --Mozilla Investigation: YouTube Algorithm Recommends Videos that Violate the Platform's Very Own Policies

https://foundation.mozilla.org/en/blog/mozilla-investigation-youtube-algorithm-recommends-videos-that-violate-the-platforms-very-own-policies/

YouTube's Search Algorithm Directs Viewers to False and Sexualized Videos, Study Finds --WSJ

https://www.wsj.com/articles/youtubes-search-algorithm-directs-viewers-to-false-and-sexualized-videos-study-finds-11625644803

In 2019, Mozilla released a study showing that videos that YouTube algorithms recommend to users have content that leads to racism, violence, and conspiracy theory. Although we proposed improvements to YouTube, there was no change in algorism even after about a year, and in September 2020, Mozilla will investigate the tendency of videos displayed as recommendations on YouTube, so for Firefox / Chrome browsers The add-on ' RegretsReporter ' has been released. Mozilla was trying to find recommended video patterns by asking users to report inappropriate videos through this add-on.

Mozilla releases dedicated add-on to investigate YouTube video recommendation algorithm-GIGAZINE

On July 7, 2021, Mozilla published a survey by Regrets Reporter based on data from approximately 37,000 people. According to Mozilla, 71% of the videos that users deem inappropriate are those that are displayed as recommendations, for a total of 3362 videos. In addition, users in non-English-speaking countries, especially Brazil, Germany and France, were 60% more likely to see inappropriate videos than English-speaking users.

The most common genre of videos that were judged to be inappropriate was 'wrong information,' which also included violence, offensive language, and sexual content. Although 200 of these have already been deleted from YouTube, it has recorded a total of 160 million views.

YouTube says, 'Users spend more than 60% of their daily viewing time on videos that are shown as recommendations, and in 2020, they will generate $ 19.7 billion in revenue from recommended videos. We are focusing on the profitability of recommended videos. In response to this survey, a YouTube spokeswoman said, 'YouTube is constantly working to improve the user experience. Over the past year alone, we have undertaken more than 30 different transformations to reduce harmful content. YouTube's system detects 94% of videos that violate our policy and removes them by the time they are played 10 times. '

Brandi Geurkink, a Mozilla representative, said, 'YouTube not only does not remove videos that violate the policy, but also actively recommends it. We need to make YouTube's algorithm more transparent. I hope to convince the general public and lawmakers. '

Related Posts:

in Posted by log1p_kr