Research results that people who pointed out fake news on Twitter 'on the contrary, their opinions are biased'

Perverse Downstream Consequences of Debunking: Being Corrected by Another User for Posting False Political News Increases Subsequent Sharing of Low Quality, Partisan, and Toxic Content in a Twitter Field Experiment

(PDF file) https://dl.acm.org/doi/pdf/10.1145/3411764.3445642

A research team led by Mohsen Mosleh of the University of Exeter has spread 11 fake news known to be false to study the impact of fake news corrections on subsequent behavior, with 2978 Twitter users. Identified. Approximately 2000 Twitter users who seem to really believe in fake news were selected as subjects for this experiment, excluding those who spread with the intention of questioning fake news.

The research team then created multiple accounts on Twitter to correct fake news, saying, 'Every time a subject posts a retweet that spreads fake news, the fake news correction account links to the fact-checking site Snopes.com. We conducted an experiment called 'Send a reply including.' In addition, in order to make the correction account look like a real Twitter user, the research team created an account 3 months before the start of the experiment and added 1000 or more followers with a real profile to each account. He said he was preparing for the event.

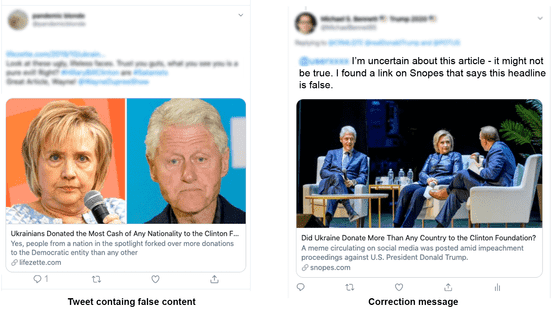

The following is an example of the interaction between the subject and the correction account. Fake news (left) shared by subjects in retweets complains that 'Ukraine is the top country to contribute to the Clinton Foundation ', but the correction account reply (right) is Snopes. Quoting the com page , he points out that the news is incorrect.

After the experiment was completed, the 'quality score of the news source' created by a professional fact checker was used to investigate the 'quality of retweets' and 'bias' of the subjects after being corrected by the fact check. It was said that it had deteriorated significantly. In addition, as a result of analyzing the 'harmfulness of words' of the subjects using Google Jigsaw Perspective API , which is a Google service, it was confirmed that the subjects who received the correction had increased rants and rough language. I did.

Regarding this result, the research team said, 'In the previous study, we found that correcting an error could improve our thinking about fake news, but in this study, on the contrary, the correction gave a negative result. The potential for this has become clear: corrections can reduce the quality of content that users spread and increase the bias and harm of speech, which is wrong with others. It suggests that those who are pointed out will turn away from the truth problem, which will be an important issue for the social correction approach. '

Related Posts:

in Web Service, Posted by log1l_ks