Cloudflare's kernel improvement record regarding 'disk encryption' that gives a glimpse of 'the deepest part of the OS'

Encrypting data is essential for companies that provide services over the Internet, but in some cases the server's disks are not encrypted because of performance degradation.

Speeding up Linux disk encryption

https://blog.cloudflare.com/speeding-up-linux-disk-encryption/

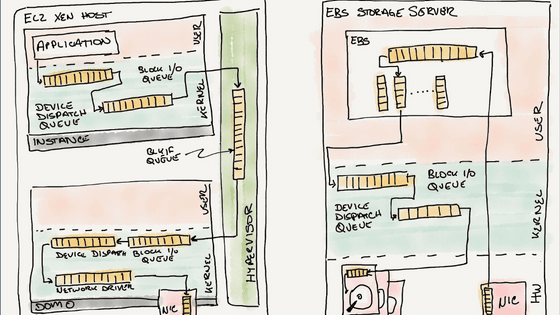

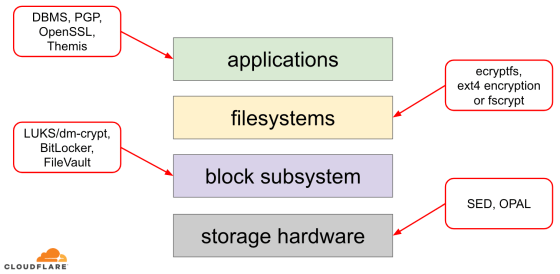

The storage structure can be divided into layers such as 'application', 'file system', 'block subsystem', and 'physical storage', and is similar to the OSI reference model in networks. The processing in the low-dimensional layer is abstracted from that in the high-dimensional layer, and encryption can be performed at each layer. Normally, encryption at the higher layers has a high degree of flexibility, such as per-file encryption, but tends to consume machine resources. In contrast, encryption at the lower layers is less flexible, consumes less machine resources, and is easier to manage.

In general, application or file system encryption is preferred for flexibility, but for cloud companies like Cloudflare, simplicity and security are important, and flexibility such as per-file encryption is important. Since it is unnecessary, it seems that all disks are encrypted at the block level. In addition, since encryption by hardware is often

At one point, Ignat noticed that the server's disk read / write speed was slower than expected and investigated. As a result, it was found that the cause was the Linux encryption system ' dm-crypt '. dm-crypt is a function of the Linux kernel device mapper that encrypts data write requests and decrypts read requests.

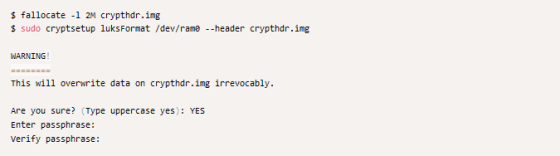

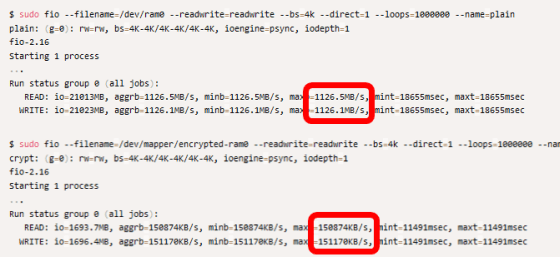

In order to accurately investigate the performance of the dm-crypt command itself, we decided to benchmark on a disk emulated in RAM instead of an HDD with individual differences. First, create a 4GB disk in RAM. The created disk is mounted on '/ dev / ram0'.

Encrypt the created disk using the 'cryptsetup' command.

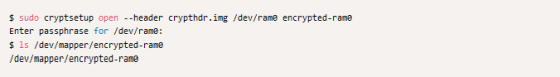

Open the encrypted disk and mount the decrypted disk on '/ dev / mapper / encrypted-ram0'. By comparing the read / write speed of '/ dev / ram0' and '/ dev / mapper / encrypted-ram0', you can see the performance of dm-crypt.

A quick comparison shows that after encryption, only one-seventh the read / write speed before encryption comes out. The read / write speed before encryption is about 1126MB / s, but it drops to about 147MB / s after encryption.

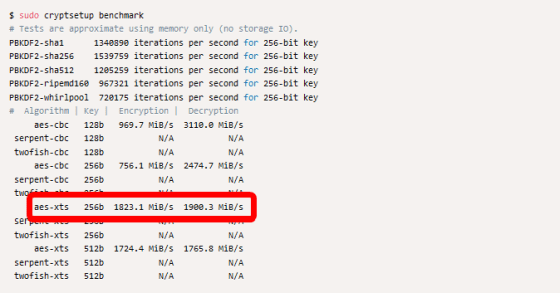

Running the 'Available Cryptographic Algorithm Benchmarks' implemented in cryptsetup showed that 256-bit 'aes-xts' was the fastest. However, even with aes-xts, reading and writing speed after encryption did not increase. Theoretically, reading and writing should total 696MB / s, but in reality it is only 294MB / s.

So Ignat decided to experiment with options that might be related to dm-crypt's performance. I tried an option that seems to be related to performance, 'Encrypt with the same CPU that issued the IO instruction', but the reading / writing speed did not improve. The option to disable the offloading of encrypted writes to another thread also did not improve read / write speed.

I posted a question to the

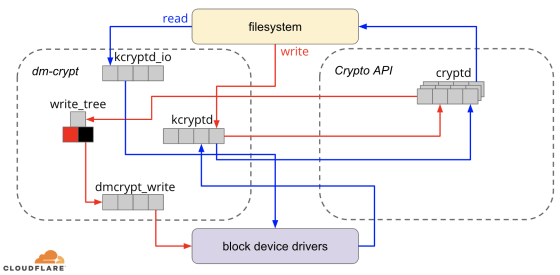

When Ignat confirms the flow of processing from the source code of dm-crypt, dm-crypt is not simply a proxy- like structure that performs encryption and decryption, but processes data via queues several times. Turned out to be. For example, in the case of writing, once the data is held in the queue “kcryptd”, the data is encrypted with the Crypto API , the encrypted data is stored in the red-black tree “write_tree” queue, and then the “dmcrypt_write” queue Is stored in the file. There are four queues at the time of writing and three queues at the time of reading, and it has been started to consider whether this queue can be removed to improve speed.

The implemented queues should have some meaning, so let's follow Git's change history. kcryptd was commented that 'it is necessary to decrypt in an

In a 2006 patch, kcryptd began to be used for IO requests as well as encryption. The purpose of the change was to reduce kernel stack usage, but Ignat speculates that the Linux kernel stack was expanded for the x86 platform in 2014 and may not be a problem now. .

kcryptd_io is a queue added in 2007 and seems to have added another queue because a large number of requests waiting for memory allocation could not be handled by one queue. However, it seems that some people have previously complained about the performance degradation caused by the queue implemented by dm-crypt, and in 2011, `` dm-crypt does not keep reading and writing blocks in the queue if there is enough memory. '' Has been changed.

In 2015, dm-crypt begins holding writes to dmcrypt_write before sending it to the stack. In multi-core processors, write data encryption ends in a different order, so it is necessary to rearrange write requests. Previously, sequential disk access was much faster than random disk access, and it made sense for dm-crypt to reorder out-of-order requests for sequential access. Write_tree is implemented for the same reason. However, this is a story about HDDs, and it is said that there is not much need to worry about the speed difference between sequential disk access and random disk access with SSDs that adopt recent NVMe. And while this change was optimized for CFQ, one of the IO schedulers , in 2018 CFQ was removed from the Linux kernel and replaced by a higher performing IO scheduler.

After investigating, we found that the design of dm-crypt was not suitable for Linux today, so we eliminated unnecessary queues and asynchronous operation of the Crypto API, and changed dm-crypt to 'encrypt read / write requests.' And decoding 'to improve the form suitable for the original purpose. To improve it, we have adopted a new option to dm-crypt so that all queues and threads can be bypassed.

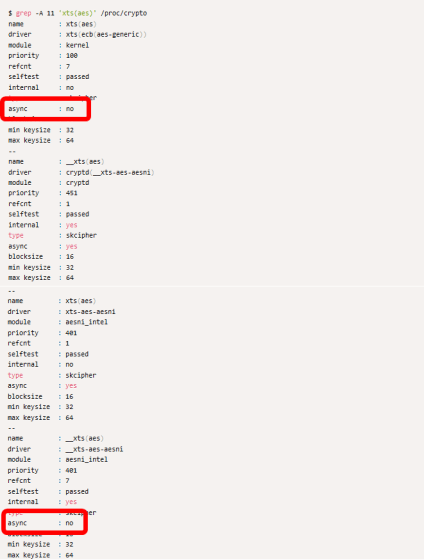

There were some problems during the improvement work. In order to make the Crypto API asynchronous, it is necessary to select an asynchronous processing type cryptographic driver, and among the available cryptographic drivers, '__xts-aes-aesni' is the fastest driver of the asynchronous processing type I knew that.

However, it turns out that __xts-aes-aesni cannot be used without wrapping the code inside the Crypto API. In addition, you need to add an option to dm-crypt to call the wrapped code.

Also, '

As a solution to these two problems, Ignat wraps the code inside the Crypto API as follows: 'If the FPU is available, use __xts-aes-aesni, otherwise use xts.' A new kernel module ' xtsproxy ' has been created.

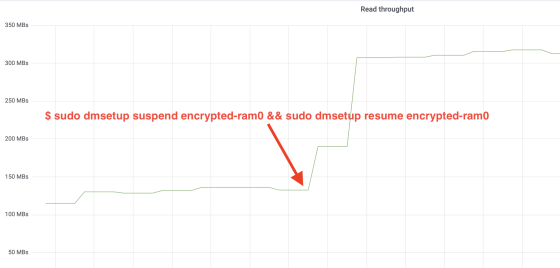

By loading the modified dm-crypt and the home-built kernel module xtsproxy and applying the settings to the encrypted disk, both read and write throughput improved dramatically.

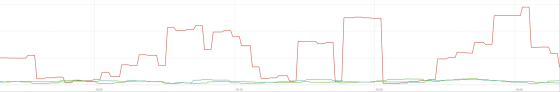

Also compare the cache response speed of the server. The speed when not encrypting is represented by a green line, the speed when performing encryption before improvement is represented by red, and the speed when performing encryption after improvement is represented by a blue line. You can see a significant improvement in speed.

This case clearly shows that rethinking the software structure can significantly improve system performance. Ignat commented on his blog, 'If you are having similar symptoms, please apply the patch released by Cloudflare and give us feedback.'

Related Posts:

in Software, Posted by darkhorse_log