Researchers of Amazon and MIT are in conflict over 'the discrimination of the face recognition software of Amazon'

by

Amazon has developed a low-cost face recognition API ' Amazon Rekognition ' and is providing it to law enforcement agencies such as the US police. Researchers at the Massachusetts Institute of Technology have announced experimental results that 'Amazon's face recognition software has lower recognition accuracy for black women than other products', and Amazon immediately opposes it, and MIT will revisit it the next day The opposition is deepening, such as refusing.

Actionable Auditing: Investigating the Impact of Publicly Biased Performance Results of Commercial AI Products — MIT Media Lab

https://www.media.mit.edu/publications/actionable-auditing-investigating-the-impact-of-publicly-naming-biased-performance-results-of-commercial-ai-products/

Face Recognition Researcher Fights Amazon Over Biased AI-The New York Times

https://www.nytimes.com/aponline/2019/04/03/us/ap-us-biased-facial-recognition.html

The face recognition system using machine learning is being developed in such a way that large companies with big data will compete, but Google's photo application ' Google Photos ' tags black and white women and men as 'Gorilla' As a result, developers have been sorry for the situation, and the situation continues to be difficult for each company.

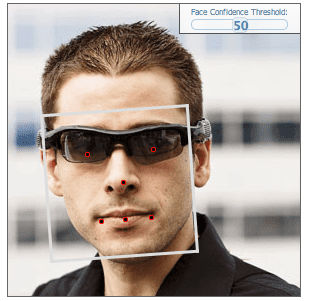

Amazon's face recognition system ' Amazon Rekognition ' is not the case either, and it is frequently criticized for its accuracy, such as misidentifying American congressmen as a criminal. In addition, Amazon Rekognition has been actively adopted by the government, the police, and other public agencies, so concerns have been raised by Amazon shareholders and employees , US Congressmen, etc., but Amazon's CEO Bezos “I will continue to provide customers including law enforcement agencies in the future”.

Amazon executives explain to employees that they will continue to provide face recognition software to law enforcement agencies

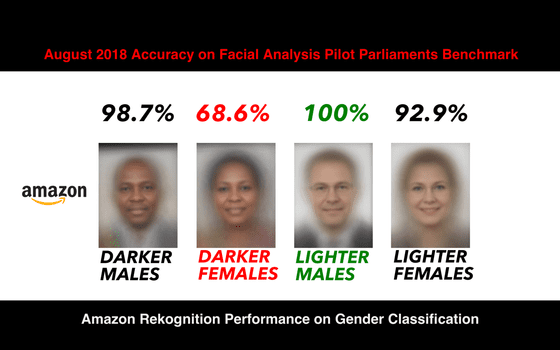

Meanwhile, it was Joy Buolamwini and Inioluwa Deborah Raji, who study information science at the Massachusetts Institute of Technology, who presented the research results that Amazon Rekognition has a racist bias. In the experiment conducted by the two people, the face photos of 1,270 people with different gender and skin color are classified into four of 'skin white men' 'white skin women' 'black men' 'black skin women' Then, it is read into the face recognition system developed by Microsoft, IBM, China's Megvii, and the accuracy of the discrimination result of male or female is checked. As a result of experiment, all three certification systems answered “100% correct” for “white skin male” while the correct rate for “black female on skin” is lowest, 98.48% for Microsoft, 95.9% for Megvii, and IBM It was 83.03%. However, the result is that while the same survey conducted three months ago by Buolamwini has dramatically improved the response rate compared to 30% for all three companies, the bias has been substantially reduced. There is an evaluation.

On the other hand, Kairos and Amazon are new targets of experiments in addition to the above three companies. According to the experimental results, the correct rate for women with black skin is 77.5% for Kairos and 68.63% for Amazon, which is far from Microsoft's 98.48%, the highest rate for correct answers.

by

For this result announcement, Amazon is instantly refuted . Matt Wood, who is in charge of artificial intelligence research at Amazon Web Services, is a technology blog that experiments used older versions of Amazon Rekognition, and experiments misled 'face recognition' and 'face analysis' It is pointed out that it is a thing. It is pointed out that it is a method of 'face analysis' that analyzes the attributes by reading photos, but in the experiment, Amazon Rekognition uses the function of 'face recognition' to recognize individuals. The experimental results are not correct because the basic technology and data used for machine learning are completely different between 'face analysis' and 'face recognition'. In the same post, Mr Wood said, 'We know that if face recognition technology is used irresponsibly, we are at risk. It should not be disseminated in news media that the controversy that leads to

To this objection, in Buolamwini he said his blog, 'terms that are used in this field is not always consistent' with the re-rebuttal . Also, about the fact that Wood says 'the latest version of Amazon Rekognition was able to analyze face data of 1 million people with an accuracy of over 99%', 'If you do not know the breakdown of face data, bias to Amazon Rekognition There is no way to judge if there are For example, Facebook is their face authentication system in 2014 that it is more than 97% of accuracy published but you ever were, in 77% of the data of 1680 persons that you used when this is man, was a Caucasian 80% About.

by Aaron Parecki

Buolamwini has raised concerns that law enforcement agencies will use discriminative misuse if they use a face recognition system that is biased in their analysis of gender and skin color, 'Amazon said law enforcement agencies and government agencies. It is irresponsible to continue to provide this technology.

Related Posts:

in Software, Web Service, Posted by log1l_ks