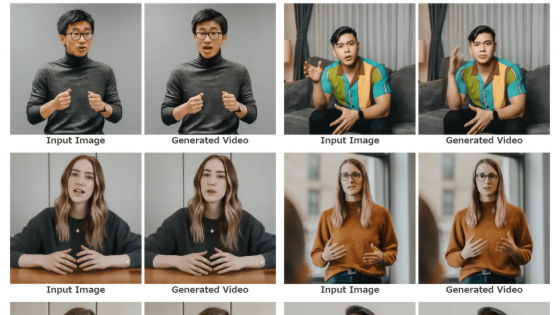

Amazing movie editing technology 'Deep Video' using deep learning allows you to port a person's facial expressions, head / eye movements, and even blinks to another person.

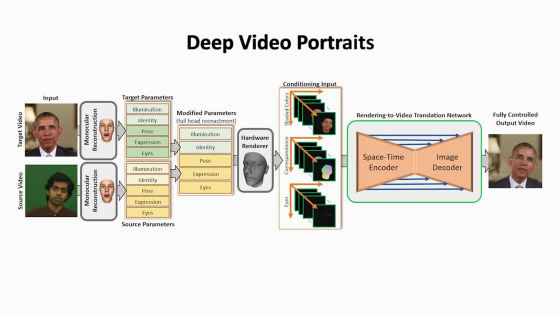

Michael Zollhöfer and his colleagues at Stanford University have announced a new technology, Deep Video, that uses deep learning algorithms to edit characters in existing movies. With this technology, the appearance and gestures of a person appearing in one movie speaking words can be completely transplanted to a person in another movie.

The new Deep Fakes are in and they're spookily good / Boing Boing

Deepfake Videos Are Getting Impossibly Good

https://gizmodo.com/deepfake-videos-are-getting-impossibly-good-1826759848

You can check what kind of technology Deep Video is from the following movie.

Deep Video Portraits --SIGGRAPH 2018 --YouTube

In December 2017, 'fake pornography' in which AI synthesizes celebrities on the face of AV became a hot topic, and it became a problem that fake news could be created by abusing technology. In fact, the technology of transplanting the facial expressions of another movie into the footage of former President Barack Obama has been announced at this point. On the other hand, the new point of the technology developed by Associate Professor Zollhöfer and others is that it can be transplanted not only to facial expressions but also to head position and movement, eye movement, and blinking.

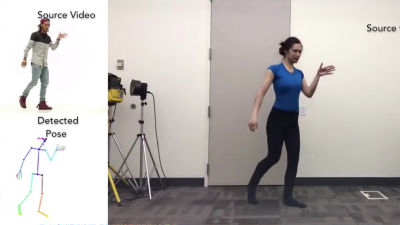

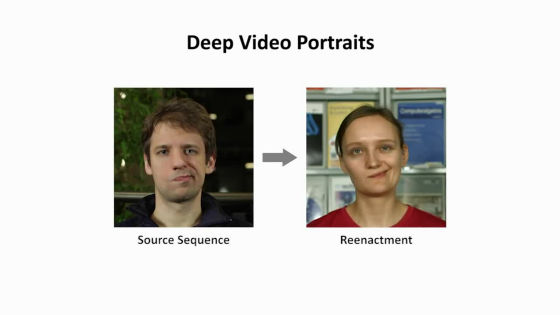

The technology of Associate Professor Zollhöfer and others requires a movie that contains the movement of the actor who is the 'source' such as the facial expression to be transplanted, in addition to the movie that wants to change the facial expression. The source actor can be of any age or gender.

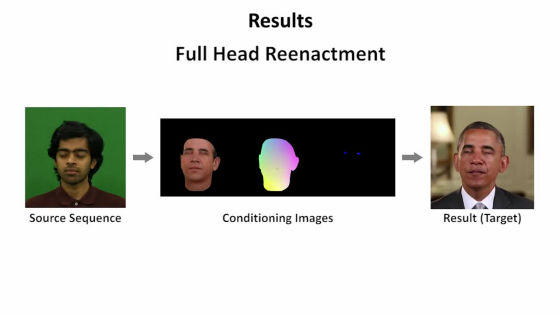

As shown below, not only facial expressions but also face orientation and movement can be transplanted.

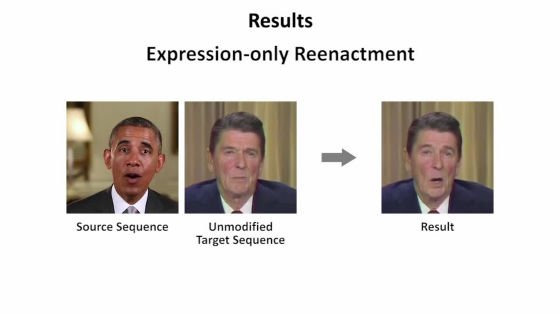

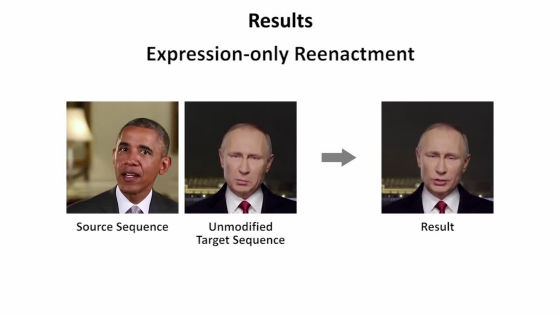

Of course, it is also possible to transplant only the facial expression while keeping the movement.

In other words, if you can prepare the audio, you can make a video that lets President Putin speak as it is, what former President Obama is saying.

If you take a picture of the voice recording, you can create a dubbed version that matches the movement of your mouth. It is quite possible that it will be used for movie production.

Also, in conventional video editing, when you move a person, you have to process the background accordingly, but in Deep Video, the background is optimized without permission. From the left, the movement of the actor's head as the source, the middle is the state of transplanting the movement of the actor to former President Obama with Deep Video, and the right side is the state of editing using the technology so far. It is easy to see that the background was distorted when the movement of the head was transplanted with the conventional technology.

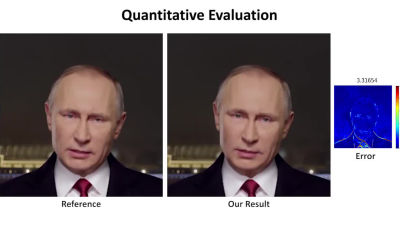

The completed video is so real that it can be mistaken for the real thing. The left side is the movie actually shot, and the right side is made with Deep Video, but the changes and movements of the facial expressions are similar, and it is not possible to tell which is the fake by just looking at it.

If you combine a facial image synthesis system like this one with a voice imitation or voice synthesis system, there is a risk of slandering people or being used to create so-called 'fake news.' Associate Professor Zollhöfer said, 'At this point, many of the edited movies can be seen as'artificial', but when the'fake' movie comes out, which the human eye cannot tell whether it is real or fake. It's a very difficult place to predict. ' On the other hand, there is also a view that it is possible to determine whether it is genuine or fake by using a digital watermark or using AI technology.

'In my personal opinion, the most important thing is for the general public to be aware of the capabilities of modern movie-making and editing techniques.' 'This makes us critical of the movie content we consume every day. You will start thinking, 'said Associate Professor Zollhöfer.

Related Posts: