A technique capable of super sharp correction of pitch dark pictures photographed in dark places by machine learning is developed

University of Illinois at Urbana-ChampaignThe researcher group presented powerful image processing technology on machine learning basis. With this technology, it is possible to convert images taken at low illuminance with ordinary cameras into sharp images with less noise.

SID

http://web.engr.illinois.edu/~cchen156/SID.html

See in the Dark: a machine learning technique for production astoundingly sharp photos in very low light / Boing Boing

https://boingboing.net/2018/05/09/enhance-enhance.html

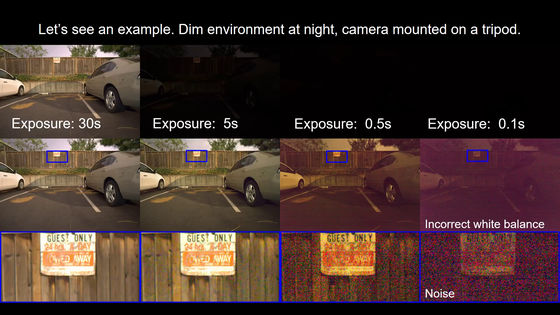

When taking pictures in a dark place, open the iris of the lens, extend the time to open the camera shutter,ISO sensitivityIt is necessary to raise it. However, if the shutter speed is lengthened, the camera shake may be reflected in the photograph, or if the sensitivity of the sensor is increased, the color tone of the photograph may change significantly, so photographing with low illuminance may be difficult.

Although it is possible to correct pictures taken by photographs with image processing software, it is most likely that a large amount of noise sticks to the image and becomes a photograph which is not usable.

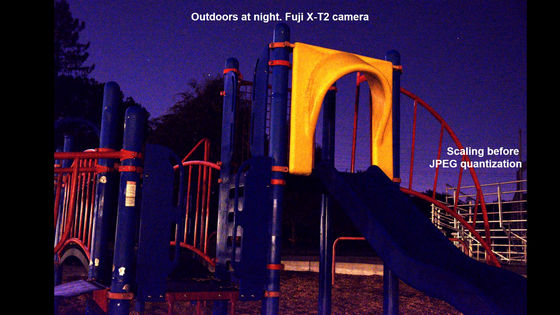

The researchers thought that it is difficult to make a photograph taken with low light intensity to be a clear image with the conventional image processing technology, and developed a new image processing technology based on machine learning base. The following image is a picture of a park play equipment taken at low light intensity.

The same image is corrected by the new image processing technology developed by the research team is the following image. There is no noise, and colors are clearly displayed vividly.

Pictures taken at night on the iPhone 6S are also quite noisy, and it is hard to understand what is dark and what is reflected ......

Fixing with new technology makes it a photograph that understands the color of flowers and leaves, the shadows falling on the road, it is clearly clear compared to the original photograph.

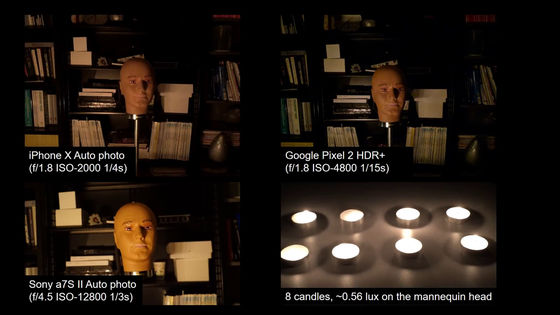

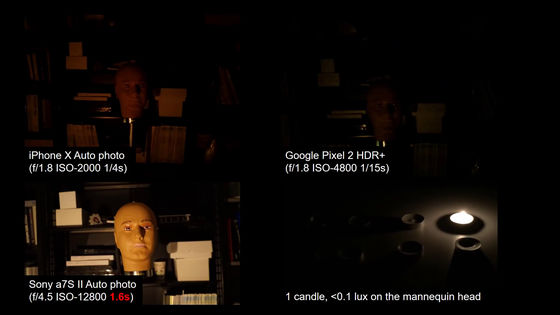

Continue to change the bookshelf and the mannequin head with Sony's "α 7 S II"·"iPhone X"·"Google Pixel 2"We shoot and compare it. There are eight candles on the desk, and adjust the illuminance by reducing the number of fires.

Approximately 0.56 candles with 8 candlesluxIt is like this when shooting under the illuminance of. Smartphones Two kinds of pictures are dark, but it is a level to understand what is shown.

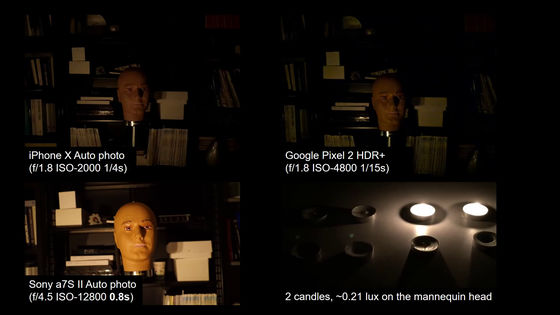

It took just two candles and it was like illuminance of about 0.21 lux. Smartphones Two kinds of pictures are considerably dark, but with α 7 S II with a 35 mm full size sensor, the part that is hit by light is still bright enough to not need correction yet.

One candle with less than 0.1 lux illuminance, smartphones The two pictures are pretty dark, especially the picture on the upper right taken with Google Pixel 2 is dark at a level that you do not know what is on the picture. Although the α7S II was able to shoot cleanly, this is because the shutter speed is automatically 1.6 seconds. When shooting with a shutter speed of 1.6 seconds, if you do not use a tripod, it will become a photograph with bigger camera shake.

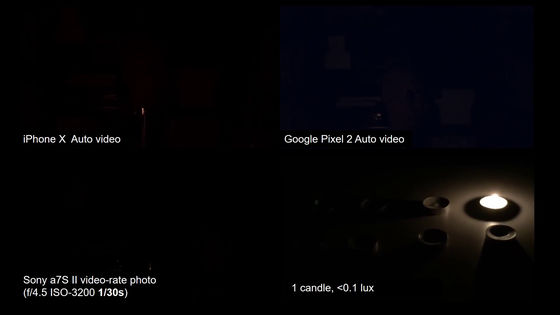

So, when I set my smartphone camera to movie shooting mode and fixed the shutter speed of α 7 S II to 1/30, they were all dark and almost no picture was displayed.

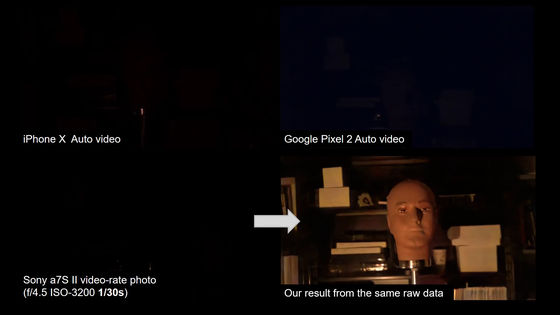

The picture in the lower right of the following image is a picture processed by α 7 S II with a new image processing technology. The pictures which were nearly dark are clearly corrected so that the mannequins and the back bookshelf are reflected firmly.

The data set and code of the new image processing technology developed by the research team is published in GiftHub.

GitHub - cchen 156 / Learning - to - See - in - the - Dark

https://github.com/cchen156/Learning-to-See-in-the-Dark

Related Posts: