Hacking technology "Robust Physical Perturbations (RP 2)" putting a seal on the sign and putting the automatic driving car into confusion

The competition for the development of automatic driving cars is active, and it is expected that they will be able to move freely in the absence of a driver in the near future. However, there are indications that cyber attacks trying to put the traffic into confusion arise by vulnerable to the vulnerability of the image recognition technology of automatic driving cars, and it is requested to cope with those attacks to ensure safety It is done. Researchers at the University of Washington warn that simply sticking a sticker on a road sign can confuse an automatic driving car.

[1707.08945] Robust Physical-World Attacks on Machine Learning Models

https://arxiv.org/abs/1707.08945

Researchers Find a Malicious Way to Meddle with Autonomous Tech | News | Car and Driver | Car and Driver Blog

http://blog.caranddriver.com/researchers-find-a-malicious-way-to-meddle-with-autonomous-cars/

Various sensors are installed in the automatic driving car, and external information is recognized. The surrounding automobiles, pedestrians, road signs etc. are photographed with cameras, and image recognition processing is performed by using a machine learning system prepared in advance, so that the road conditions are grasped instantaneously. However, at the University of WashingtonTada Yoshi · KonoDr. et al.'s research team clarified that pasting the sticker on the road sign can mislead the recognition of the automatic driving car and named this cyber attacking method "Robust Physical Perturbations (RP 2)".

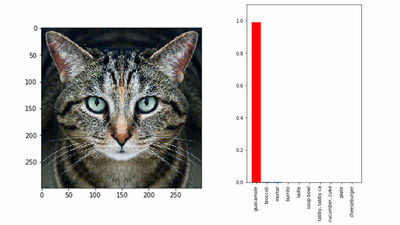

Automatic driving car image recognition system is mainly divided into "object detection" function and "classification" function. While the former detects "the existence of an object" such as a car, a pedestrian, a signal, etc., the latter judges the "content" of what the detected object is, against data. It is the latter classification function to judge what the road sign means. However, "custom image" that gives erroneous recognition to the automated driving car by using algorithms and images that penetrate the system by penetrating the vulnerability of the deep neural network being used to classify the images and controlling the classification function Dr. Kono assumes there is a danger of generating it.

The research team has demonstrated that it is possible to misunderstand the system of automatic driving car by creating a model of the same size as the actual road sign, pasting the sticker. The following image is a sign of speed limitation "speed 45 mile (72 kilometers) limit" by sticking a seal on a road sign meaning "right turn", it is said that the automatic driving car system misrecognized Thing.

In addition, he said he was able to mislead the "pause" sign as '45 mph limit (72 kilometers)'. These image recognition mistakes were verified at various angles at a distance of 40 feet (about 12 meters), and it seems that both succeeded in confusing the automatic driving car system.

This attack is dangerous because the sticker sticking to the road signs is merely a nuisance to the human eye. If the detection is delayed, there is a danger that it will be difficult to prevent the occurrence of traffic accidents because it is impossible to notice the work on road signs on the street.

Dr. Kono believes that even if the automatic driving car system itself can not be accessed, a malicious attacker could verify system feedback and reverse engineer it. The technology that should be completed to bring the automated driving car into practical use seems to indispensable viewpoints on how to prevent cyber attacks.

Related Posts: