Waseda University's laboratory develops a terrible neural network technology that puts pens in rough images automatically into line drawings

A new technology that automatically converts a complicated rough sketch into a line drawing as if it had been put in by hand as a pen was announced by Waseda University's laboratory.

Automatic drawing of Simosela · Edgar Rough sketch

http://hi.cs.waseda.ac.jp/~esimo/ja/research/sketch/

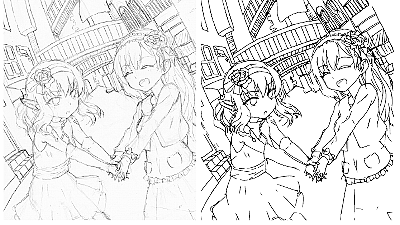

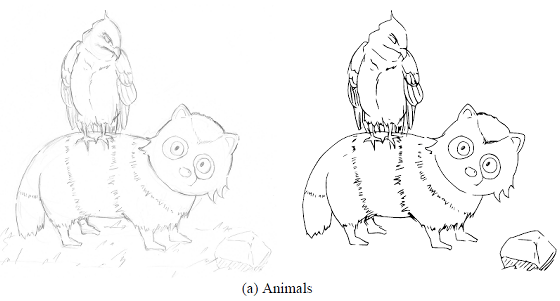

Waseda University'sSimosela · EdgarAssistant Professor at the Institute of Technology developed a technique that automatically creates a line drawing of rough images drawn with a pencil at a single shot. For example, in the following image, the left side is a rough sketch and the right side is a line drawing of a neural network model.

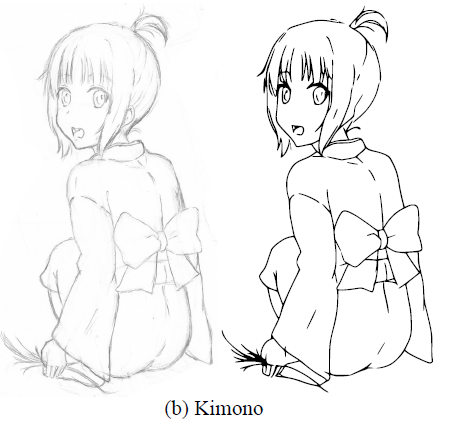

Kimono girls and ... ...

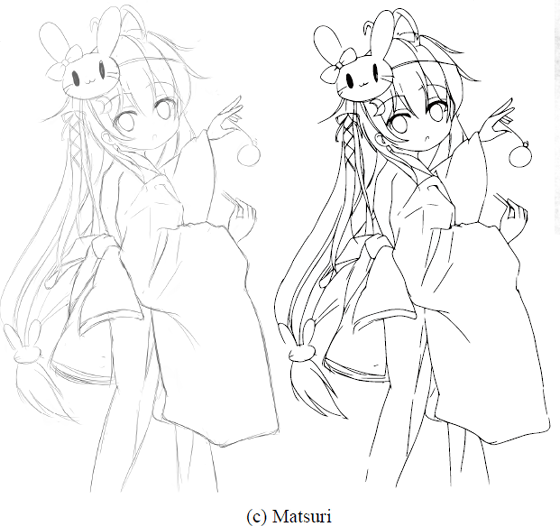

A girl in a festive atmosphere.

This sketch of the side which seems to be considerably overlapping lines is also this street.

Even with complicated sketches, you can see that it is a line drawing with considerable precision.

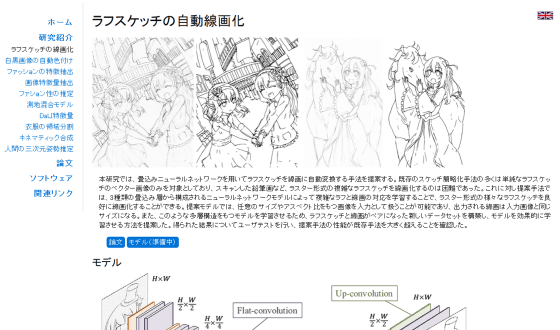

So far, it has been extremely difficult to create a line drawing of complex rough sketches such as scanned pencil drawings. However, in the new method, a neural network model consisting of three types of convolutional layersConvolution neural network) Has made technology possible by making learning correspondence of complicated rough and line drawing by learning. In this method, regardless of the input image size, the output line drawing seems to be the same size as the input image.

The model consists of three kinds of convolutional layers "down-convolution", "flat-convolution", and "up-convolution". In down-convolution, the resolution of the map is halved In flat-convolution, map resolution is not changed, up-convolution doubles the map resolution by halving the stride number.This will initially compress the image into a small feature map and process And finally return to the original resolution and output a clean line drawing. "

In addition, rough sketch,Potrace,Adobe IllustratorThe live trace function of Waseda University and the new technology of Waseda University laboratory are compared and it is like this. The second Potrace from the left has too much extra lines left, the third from the left Adobe's live trace function is that lines are being simplified or disappearing. On the other hand, the new technology using the neural network on the far right has a choice of lines and has the impression that he put a pen in hand.

The laboratory also announces a technology that automatically colors monochrome photos as color photographs.

Automatic coloring of black-and-white photographs by learning of global features and local features

http://hi.cs.waseda.ac.jp/~iizuka/projects/colorization/ja/

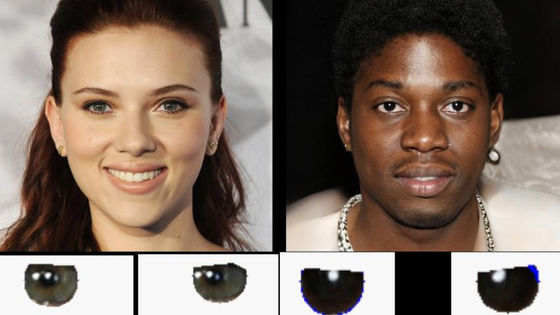

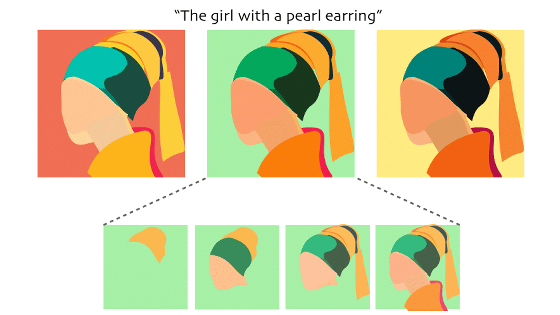

Using a deep network, it automatically outputs the input black and white image as a color image, using natural tanning considering the structure of the whole image by using tatami folding network like the automatic line drawing technology It is possible to do. As a mechanism, first extract features of the whole photo using artificial intelligence, filter by element and make appropriate coloring.

You can see that monochrome photos taken in the 1930s are transformed into vivid color pictures as if they were taken recently.

Other examples are as follows.

The source code of technology to automatically colorize monochrome photos as color pictures is now available on GitHub for free.

GitHub - satoshiiizuka / siggraph2016_colorization: Code for the paper ': Joint End - to - end Learning of Global and Local Image Priority for Automatic Image Colorization with Simultaneous Classification'.

https://github.com/satoshiiizuka/siggraph2016_colorization

Technology to convert black-and-white photographs to color photographsThe VergeBut it is getting noticed enough to be an article.

These old black-and-white photos were colorized by artificial intelligence | The Verge

http://www.theverge.com/2016/4/27/11519272/these-old-black-and-white-photos-were-colorized-by-artificial

Related Posts:

in Software, Web Service, Posted by darkhorse_log