``White-box-Cartoonization'' that decomposes real photos into expression elements unique to animation and reconstructs it like an animation

In recent years, a number of apps that process pictures and movies of real landscapes into cartoon and animation-like images have appeared, and many people process the pictures and post them on SNS. Meanwhile, a paper on a new method called ' White-box-Cartoonization ' that transforms a photo into an animation style focusing on the expression elements unique to animation has been published on GitHub.

GitHub-SystemErrorWang/White-box-Cartoonization: Official tensorflow implementation for CVPR2020 paper “Learning to Cartoonize Using White-box Cartoon Representations”

White-box-Cartoonization/06791.pdf at master · SystemErrorWang/White-box-Cartoonization · GitHub

(PDF file) https://github.com/SystemErrorWang/White-box-Cartoonization/blob/master/paper/06791.pdf

In addition to creating an imaginary world from scratch, animation also incorporates techniques for drawing backgrounds that are easy to handle as animation, based on real-world photos and movies. Some apps will be able to process the photos seems to manga and anime in the image, but still when the various problems remaining, to operate the TikTok short movies sharing app ByteDance and the University of Tokyo researchers paper Pointed out in.

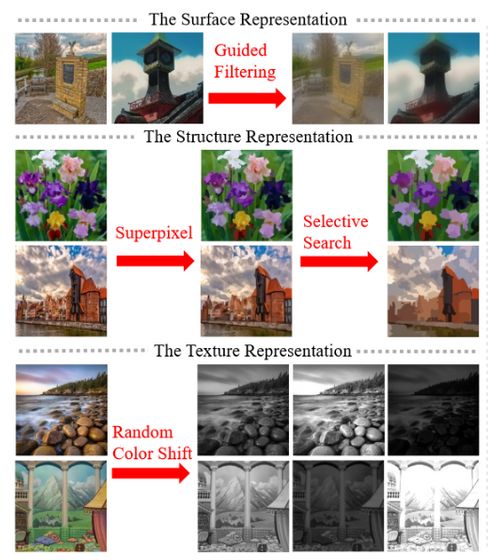

The research team who actually observed the animation pointed out that the three elements 'surface', 'structure' and 'texture' make up the expression of animation. The research team claims that the input image can be decomposed into these three animation-specific elements, processed, and combined to produce an animation-like image that is superior to conventional methods.

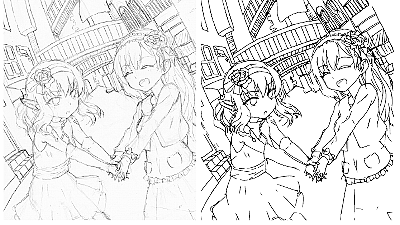

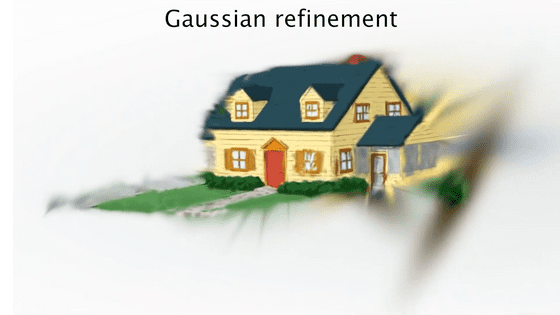

'Surface' is a rough surface part extracted from the image and the whole image is smoothed, and it seems to be an image like a draft of a picture. 'Structure' is an image that is represented by a polygon with a large and uniform color tone, which is a combination of recognized object areas and imitates the clear contour and uniform color of the cel image . The research team explains that 'texture' is an element related to details and contours that ignores color and brightness, and was inspired by the contour line that the artist draws before putting the color.

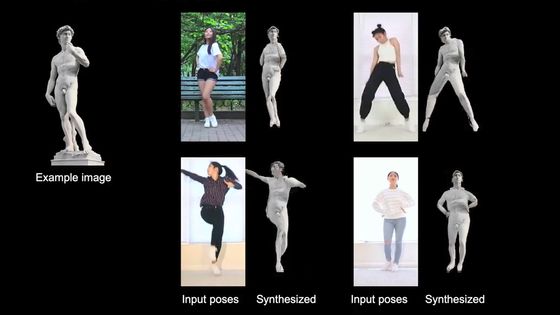

Based on the above thoughts, the research team constructed a system that converts real-world photos into animation based on a hostile generation network using Tensorflow , a library for machine learning developed by Google.

The data set used by the research team for machine learning includes landscape scenes in animation movie works by Hayao Miyazaki , Mamoru Hosoda , Makoto Shinkai , as well as facial images of characters in Kyoto animation and PAWORKS works. It is said that.

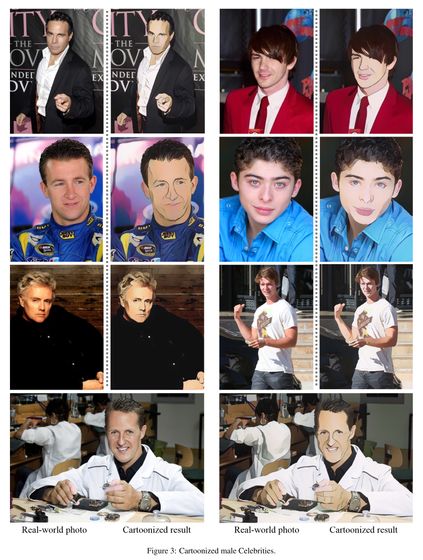

This is a system actually constructed by the research team, which transforms a male face photo into an anime style. The image on the left is a real photo, and the image on the right is the one after conversion.

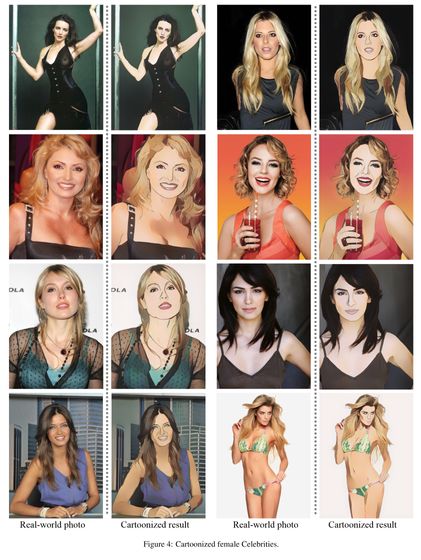

It's like this for a woman.

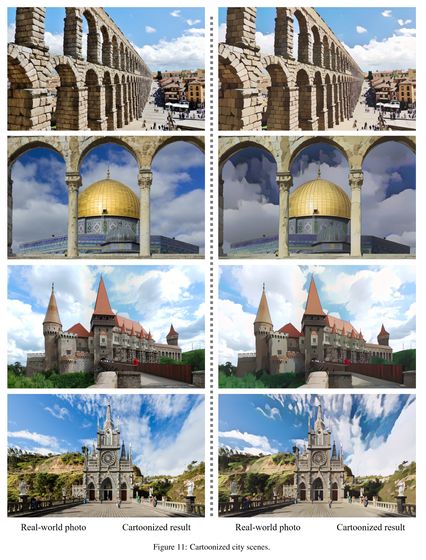

Various buildings and...

The scenery in the town was well converted into anime.

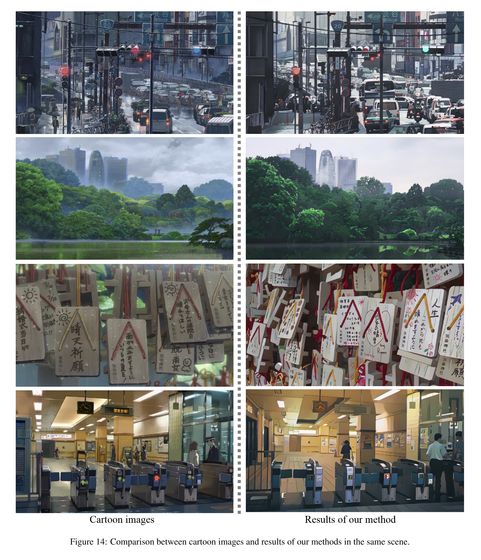

In addition, the research team has also published 'Comparison of the landscape actually drawn in the animation and the image of the real photo taken at the same place animated by 'White-box-Cartoonization''. The image on the left is an animated image, and the image on the right is an image of a real photo converted into anime.

Related Posts:

in Software, Posted by log1h_ik