Amazon counterclaims "urban legend" on the cloud, what is "the truth of AWS"?

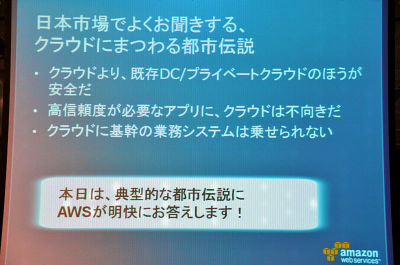

According to Amazon "existing data centers and private LANs are more secure than clouds" "Cloud can not be used in consideration of high reliability and fault tolerance" "The business system of the core can not be put on the cloud" He said that he believes that the discourse as "urban legend". That is, it is incorrect.

this is"How to make use of the Amazon cloud to succeed next-generation disaster recoverySo, at the Tokyo International Forum held "Cloud Computing World Tokyo 2011"&"Next Generation Data Center 2011"It is a lecture given in the lecture, promotion of AWS such as Amazon EC2 etc. It is a very helpful content.

The following is a reproduction of the lecture.

A lot of people

Slice

The attendance rate is unusually high again as that Amazon lecture

Announcement We entrusted to "Mr. Ken Tamakawa, Evangelist / Technology Promotion Manager of Amazon Data Service Japan Ltd." entitled "How to utilize the Amazon cloud for successful next-generation disaster recovery"

Hello everyone

Today, thank you very much for gathering so many people in the midst of a typhoon heading there might be rain.

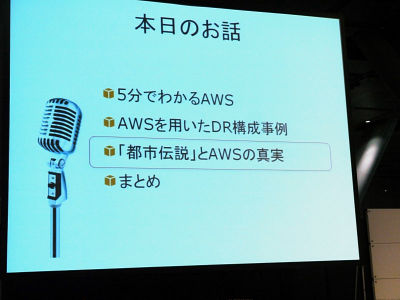

As for the contents of today's talk, how to realize disaster recovery using the cloud as an IT service plan attracting attention since the earthquake by saying, "How to utilize the Amazon cloud for successful next-generation disaster recovery" I would like to talk about somewhat.

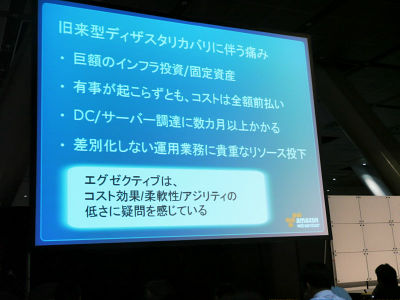

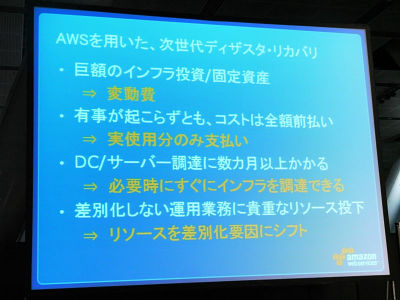

Although disaster recovery has been done so far, you do not know whether you have to invest in huge infrastructure for disaster recovery and have fixed assets, or if the earthquake happens Regardless, we have to make investments in advance, even in procurement it took months or six months to procure data centers and servers, and one year and two years as long as they were needed, and in addition to the core work From such a point of view, considering from a management point of view, it is often told to me that the disaster recovery solution at the moment is not very flexible and there is a doubt about cost effectiveness there is.

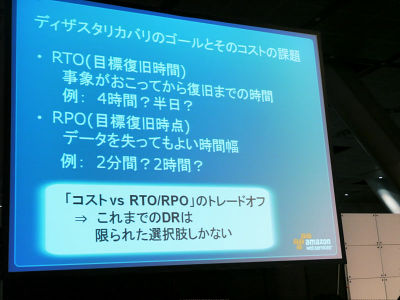

First of all, there is a thing to decide "goal" as a thing to check when doing a disaster recovery, but what to point to say "goal" is "target recovery time", event It is a time width that you can lose and how much time you can recover from the time you wake up. For example, can you allow for 5 minutes to recover data? Or if it can be forgiven for two hours? There is an optimum cost according to the goal such as if you make very high demands, even if it costs high, there is no choice. If we can tolerate it slightly, we want to realize it with less cost.

However, the solution that meets such requirements is very limited. Among them, for small and medium-sized enterprises, it is said that "if you do not want to spend too much money, you do not have to do it if that amount of money is taken", large enterprises will invest huge costs to realize disaster recovery I think that's the reality.

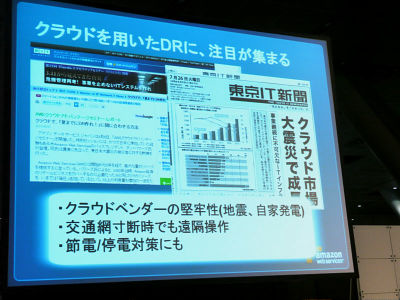

In such circumstances, the cloud is now receiving great attention. Not only the flexibility of the cloud but also the robustness of the data center that the cloud vendor intensively invested and remote control from the remote network even if the traffic network is shut off due to some event, Along with the expectation that the cloud can be used very much for disaster recovery, we have received many projects.

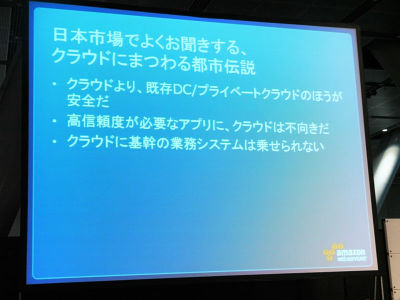

However, various skeptical opinions also exist in the Japanese market, and we call it "urban legend", but for example, "If you are using an existing data center or a private LAN rather than a cloud In addition, "We believe that cloud can not be used in consideration of high reliability / fault tolerance", "We can not put back the business system of the backbone into the cloud" We believe that this is urban legend.

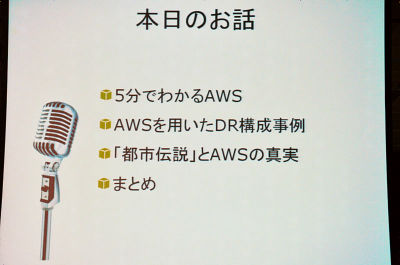

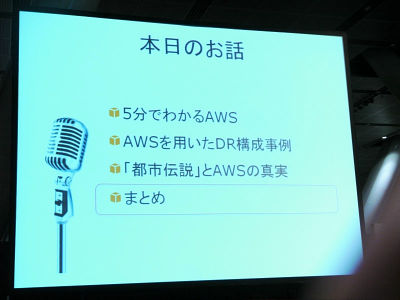

First of all, I explained to those who do not know about Amazon Web service for about 5 minutes and explained how to configure disaster recovery system using Amazon cloud To do. Finally I would like to tell you the truth about "urban legend" and "urban legend".

So, quickly, how long have you been bought books in Amazon? (Multi-person raising hands at the venue)

Thank you very much. Those who did not raise their hands are OK even if they think "Rakuten school"? (Lol)

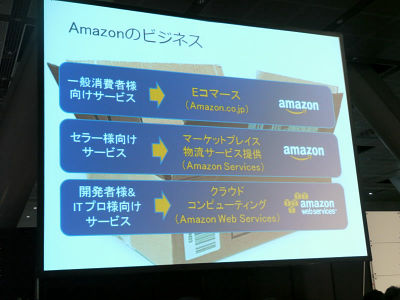

Amazon has come up with retail business from 1995 and has been doing three businesses. Another business exists as a business that lends a place to sell e-commerce, also this is Amazon service . Another one has operated the data center which has been retailed independently, but utilizing computer knowledge accumulated through the Internet to use computer resources over the Internet, as well as using a fully-charged rate system like electricity, gas and water use We are doing things like using computer resources as if to do.

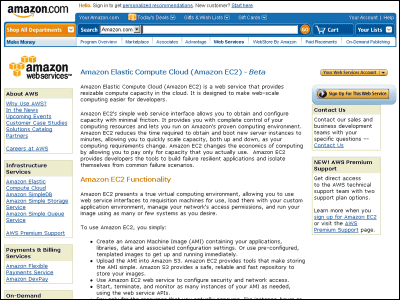

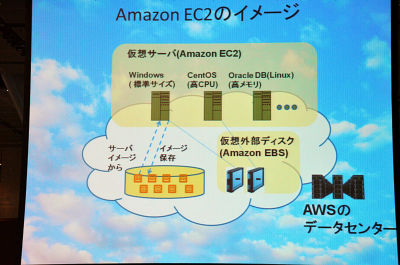

This is what I do as a business from 2006 as Amazon Web Service, abbreviated AWS. For those who do not know much, I would like to talk about the most famous service, Amazon Elastic Compute Cloud, abbreviated Amazon EC 2.

First of all, how to use it, just as you buy a book at Amazon, you can use the server as soon as you create an account on the web and click on it. Specifically, in Amazon's data center, like a server copy, we call this machine image, but a lot of machine images are prepared, we choose it, then As soon as you choose the specifications of the server you will be able to use it as soon as it is up and running within a few minutes, whether on Windows or Linux. As for the amount, initial investment is not necessary, it will be charged according to the time spent.

Even one, ten, 100, Windows, Linux and Oracle database, things such as these can be used immediately. And there is a cloud service like an external hard disk as well as a server, it exists in gigabyte units from 1 giga to 1 tera, and the data written here will not disappear even if the server is stopped We are.

Another interesting thing is that you guys will install various software on your own when you start up the server, but you can create an image of that server and copy that server image to another server It is also possible to use it, and it is also possible to put a machine image in a server of another specification using this machine image and restart it with its specifications.

Here, I would like to procure a server on this occasion for those who do not know.

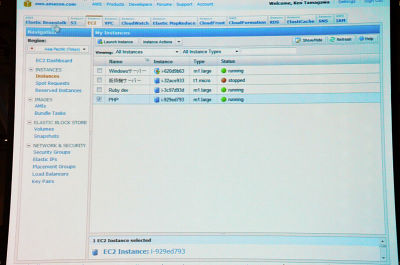

This is what is called Amazon management Web management tool, management console, but various services such as EC 2 can be chosen.

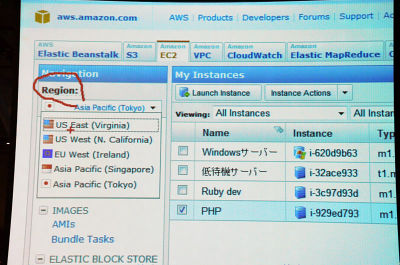

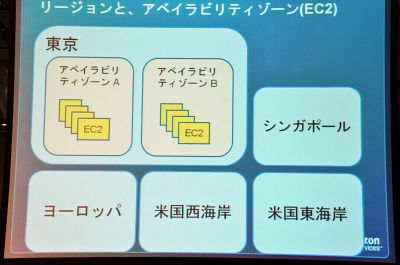

Region (region) can be chosen among EC 2, and "West America" "East America" "Europe" "Singapore" "Tokyo" can be chosen. This time, I will choose "Tokyo".

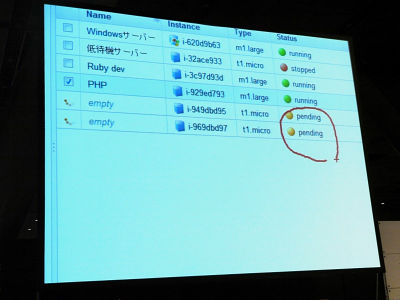

If you do, the servers that I currently have are displayed and you can see that they have 4 units at the moment.

Some things are running, others are stopped.

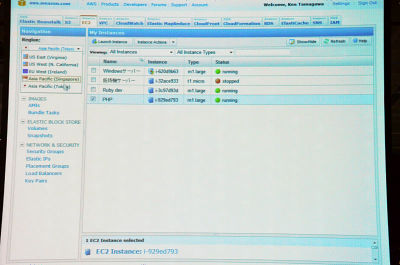

Now we will procure the machine from now. Press the button to select the machine image.

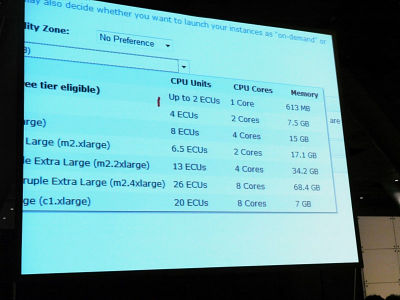

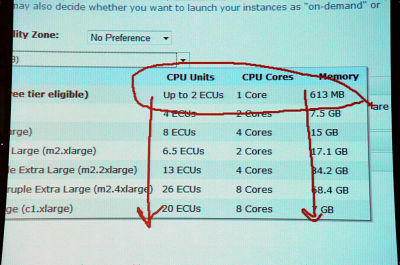

Either Windows or Linux, licenses are included in the hourly billing. The next choice is machine spec. The hard disk is also 2 giga, 4 giga, 8 giga, 20 giga, and memory can be chosen like this.

By the way, the time billing cost when choosing the one with the lowest spec of one server is 1.6 yen. It is possible to use it freely by that amount. Next, select the number of servers. This time we will choose two for the demonstration.

Then, as a detailed setting, set the secret key and the firewall, and finally press the "launch" button. Then the server starts to charge from now and the server can be used.

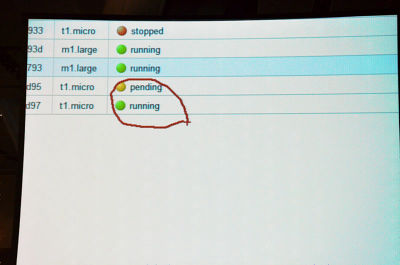

Actually checking it now, the two units are in Pending status, but now the server is about to be running.

(The next moment) It has become Running already. You will be able to use the server at this speed.

In the past, time-consuming tasks such as ordering servers, delivering them, installing them, securing power, pulling internet lines, etc., have been completed in this short time. Moreover, no initial investment is charged, it is charged only for the time spent, and it is charged as much as you used for each month later.

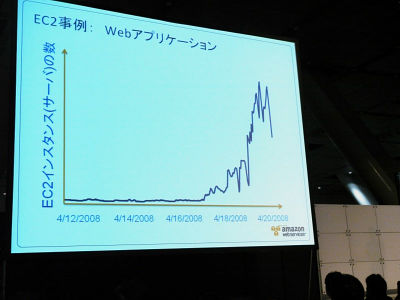

By the way, the company that made use of such technology is doing this kind of thing. Although it is an American company, it is offering the web application service to customers, it is extremely popular after publishing on Facebook, and we are procuring and using 5000 servers.

Moreover, we have raised 5000 units for three days from April 18th to April 20th. This is possible because it is a cloud, and infrastructure has been increasing as customers increase. Even if any popular service goes beyond the peak, popularity will fall, so you can quit immediately.

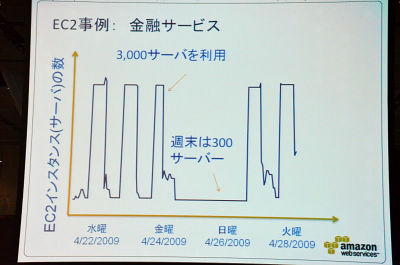

Another example is Wall Street's financial company, but in this case, we analyze the stock market, but only in the evening, to make an analyst report. As its use, 3000 units in the evening and 300 in the day are dropped in the daytime and the use fee is dropped. This kind of thing becomes possible.

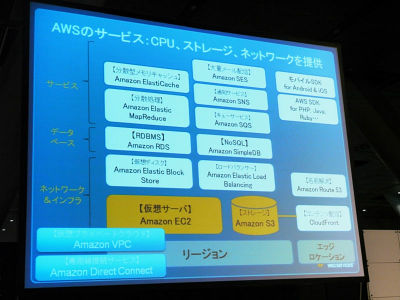

This service has been offered since 2006, incorporates the needs of various customers, now we are also enhancing the services such as storage service and content distribution service, we now offer more than 10 kinds of services to our customers I will.

Also, if you look at the Amazon logo, Amazon Web Services has a different logo, like a block logo, but when you make a business or an application, we will only provide our services as you like If you want us to choose such an application quickly and cheaply, it is becoming a logo with such feelings.

It has been used by hundreds of thousands of customers worldwide in more than 190 countries and has been used by over 20 ministries and agencies in the case of the US government, also used by manufacturers and financial institutions, Bank of Spain, the top bank But I have you use it.

Of course, we used widely from small business to venture, and in Japan we built the Tokyo Data Center on March 3, 2011,

For customers in the Japanese market, we can use high-speed networks from Japan domestically to safely manage data in Japan, and we also have support for 24 hours 365 days in Japanese.

Actually, this Tokyo data center has been used by many customers in Japan before it was made, but it has already been used by thousands of customers at this time, this is a list of examples We do. As a representative example, Mitsui & Co. got used as SAP development verification. Olympus uses it as a photo sharing service, Recruit is used as a residential website called Suomo, Toshiba uses it as firmware distribution of home appliances. It is understood that various companies are using it. Also, there is the Suita City Tourist Web of Osaka Prefecture at the bottom, but it is also used as a web server of the government agency.

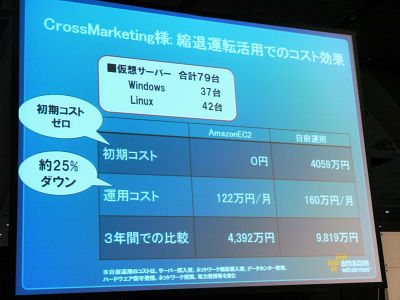

Here is a case where concrete price range comes out. In an example of cross-marketing, it is an example that tried to procure 80 Windows and Linux servers. Early investment is required when preparing for yourself first, but even if you look at the operation cost furthermore, it is actually that EC 2 was cheaper and there are results such as electricity bill, network fee, In addition, it was the result that such numerical value appears even if trial calculation of personnel expenses etc.

At the end, if you summarize it, it will be very effective as cash flow as merit, quick startup of business, quickly when the peak comes up, it will be possible to withdraw quickly when you quit I will.

From here I would like to talk about dish recovery which is the main subject.

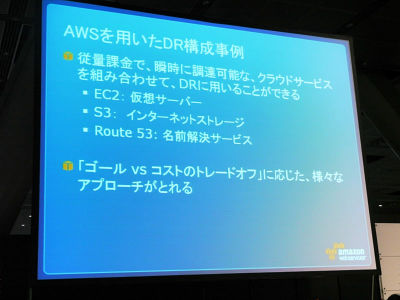

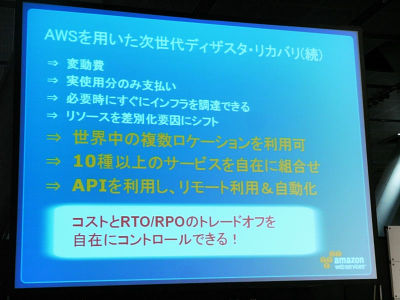

Although it is disaster recovery, a very flexible disaster recovery configuration can be obtained by combining services that can be instantly procured with pay-as-you-go. Until now, it was only choice whether to procure other data centers for disaster recovery, but since various combinations can be selected, flexible selection according to customer's goal cost It becomes possible.

Before introducing this, I will explain the services that are important for disaster recovery easily.

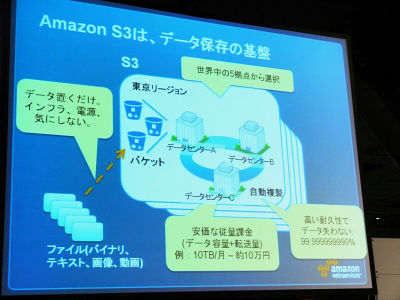

First of all, "S3" is a service called simple storage service, called Internet storage. If you explain what it is like, you can select regions like Tokyo including EC2. And by making a box called a bucket in it, it is possible to save any file whether it is an image or an HTML file. Just uploading it on the net is possible, of course you can encrypt it and save it.

Since it is only uploading from the net, it is possible to upload without paying particular attention to infrastructure etc at all. The data saved here is automatically copied to the data center distributed in Tokyo if it is Tokyo, it is 11 dollar durability of 99.999999999% and it is extremely durable. It is durable enough to preserve 10,000 files for 10 million years, and it actually keeps over 400 billion files so far, but it has never been done before I have a track record that I never lost.

Another feature is very cheap metered billing. For example, if you purchase 10 Terabytes of storage and back up, it costs the storage you purchased, but in S3 you will be charged for the saved capacity and duration. In the case of 10 terabytes, it will be about 100,000 yen in one month, and it will be around 50,000 yen in half a month. I think that it is a very effective method because I do not think that it will not save 10 terabytes of backup from the beginning when purchasing storage.

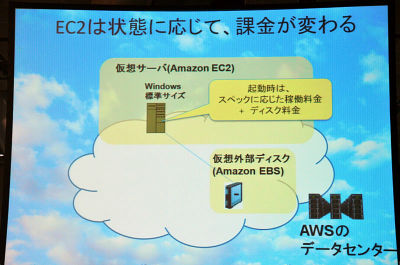

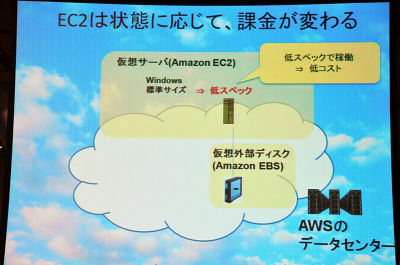

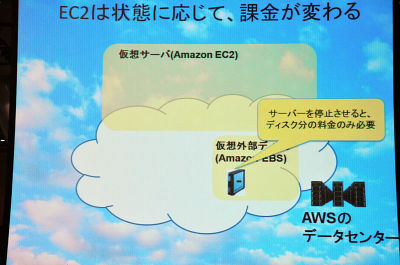

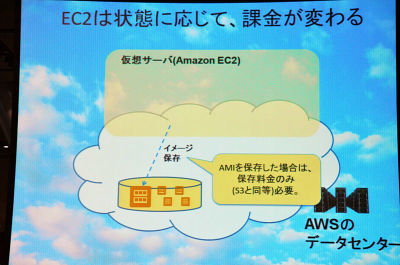

Next EC 2, but EC 2 is also a service of the virtual server, and when it is in the normal startup state, it will be charged at startup and it will be 1.6 yen per hour, so if you have a high specification server it will cost 10 yen per hour I will become. When it becomes the charge of storage capacity, roughly it is about the same charge as S3, which is about 10 yen / gigabyte. When it is running, it will be in its charge form, but it is possible to instantly switch to a server with a low spec, so it is possible to switch from a server of 10 yen to a server of 1.6 yen. Furthermore, stopping the server will cost only the disc, so it is also possible to launch the server immediately even if the server is stopped.

So it is also possible to pay only the usage fee of the disc after temporarily stopping it. As another method, the server completely stops, but it is also possible to create an image of the backup and start up from there, so you can choose these various options as well can.

Another important service of disaster recovery is this DNS service. If the name resolution system stops, it will be in a state where it can not be switched even if switching is done. This Route 53 service is a name resolution service distributed to cache locations that Amazon has in 19 places around the world. Since the utilization rate is 100% and it is 1 dollar per domain, it will be about 80 yen if you revise it to Japanese yen.

By using such EC2, S3, Route 53 service in combination, it is possible to construct various disaster recovery systems.

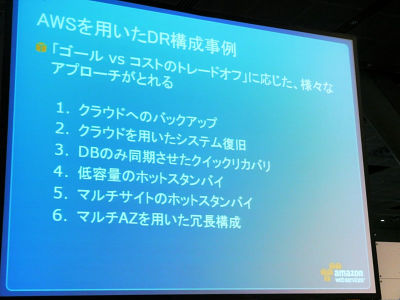

Today I tried organizing what kind of scenario there are according to cost and what goal.

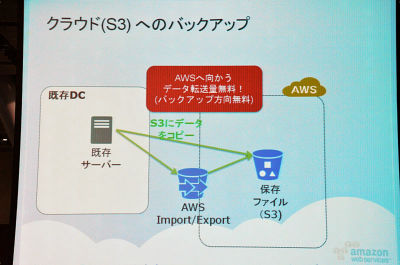

First of all, the purpose is only to backup the data.

There is data in existing data center. Just save the data in Amazon's S3 server. Also, if it is difficult to upload large amounts of data across the Internet, you can upload the data by connecting directly to the (Amazon) server if you send the hard disk using the import / export service.

Currently, when transferring data towards AWS of Amazon, we have announced that it will be free from several months ago, and it is free for just backing up. About 1 terabyte of data is saved for 1 month and it is about 10,000 yen, which is quite a profitable state.

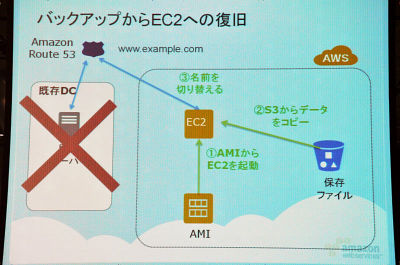

Next, even if you back up data, the data center itself can not recover. That is, I will explain the case of using EC 2 when data can not be returned from backup. To do this, first resolve the name using Route 53 beforehand. I will go on to switch this immediately. Next, save the data in S3 beforehand and prepare the machine image on EC2. If something happens, you can start up EC 2 from AWS, transfer data from S3 to EC 2, switch name resolution, and even if the server crashes, you can return to EC 2 It will be like. Moreover, since it usually stops EC2's server, it costs almost no charge and only S3 data storage amount is charged. It is a mechanism that it costs only when you actually use EC2 server.

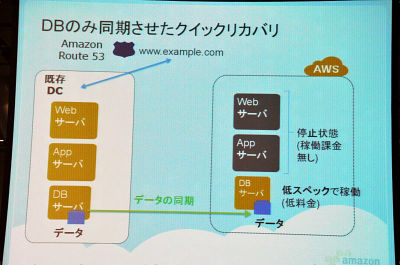

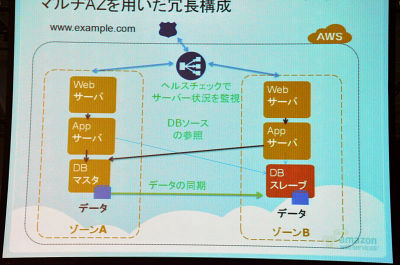

In the case of customers who want to recover a little more quickly, I think that the database part is often concerned. It is a case where you always want to back up the database part in its latest state, right? Suppose you have an application server, a web server, and a database server in an existing data center. Then, set up a database server in AWS, and stop the application server and web server. The specifications of the database server are quite low and are prepared for synchronization purposes only.

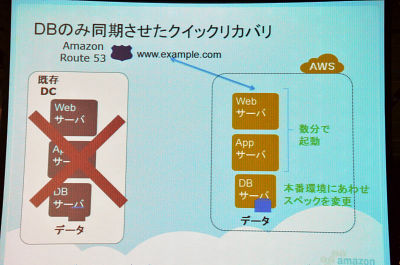

In doing so, keep costs low, and if something happens, start up the application server and web server on AWS and the database server will switch to a spec of high specification according to the production environment. And by switching the name resolution server, it is probably possible to switch in a few minutes.

Furthermore, I think that there is a case that you want to make it hot standby in order to recover more quickly. In this case, let's run the application server and web server already in the state of being started, low specifications. Also, it seems to be good to always use it by load balancing at the higher level. If something happens in this state, it seems that we can immediately respond by switching application servers and web servers prepared on AWS to servers with high specifications.

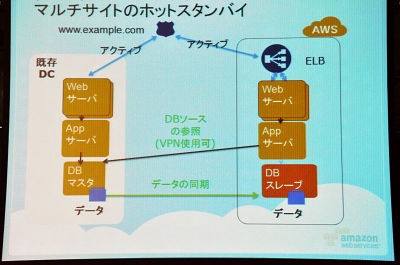

Also, if you want to make it faster to recover than that. By preparing servers of specifications similar to those in existing data centers in AWS and constantly operating at "50%: 50%" usage rate by load balancing, anything can be dealt with immediately.

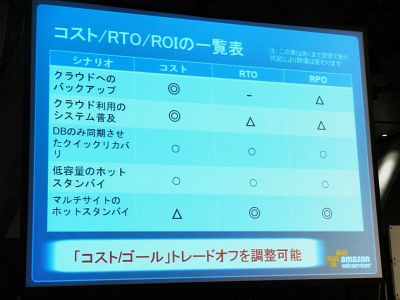

If you check each scenario like this, I think whether features will come up by cost, recovery time, and data recovery time respectively.

In the first scenario, the cost is cheap and it will take up to the restoration time. Conversely, if it is the later scenario, it will cost as much as it will be until the recovery time, and so on. The important thing is cost and goal, where is the optimal trade-off? It comes to the place.

Another concern here is that you do not have to have an existing data center anymore? There are increasing numbers of people saying that if it is possible to actually distribute data to the production environment and the cloud in a distributed manner, is not it okay to switch to the cloud at the timing when the data center contract expires? There are things going on.

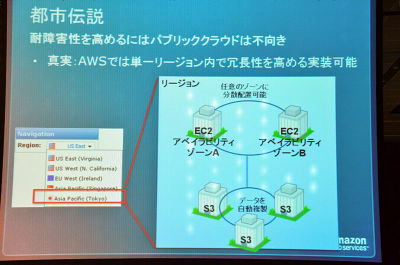

However, in that case, fault tolerance comes to mind. There is a doubt such as "What happens if there is a cloud in the disorder?", But for those customers EC2 has established zones in the region as well, It is a real data center which is distant from the region.

In other words, because there are multiple such data centers, it is possible to set up servers separately among them.

In other words, we already have established a system that geographically disperses and moves the system. So it is already possible to create a system separately from zone A and zone B, distribute it with load balancer, and synchronize only the database, already in AWS.

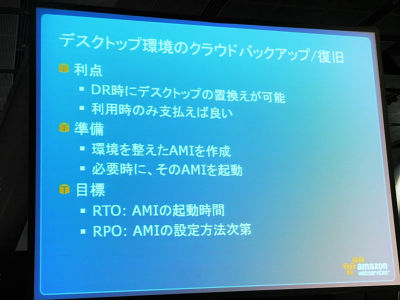

Also, although we have explained the server as an example up to now, you can do similar services even in personal PC environment, because it supports various distributions such as Windows, Linux, Debian, Ubuntu etc, desktop It is also possible to save the environment and, if something happens, you can do business using EC 2.

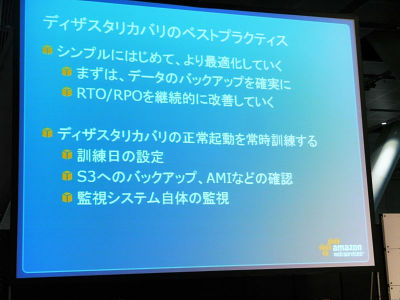

Finally, as a point of disaster recovery I would like to keep it in mind, first and foremost, I think that it will gradually optimize. Suddenly it may be difficult to redundantly operate on multi-site, so first we will take the meters first and then we will continue to improve while considering the RTO I would like to recommend it.

After that, I think that it is necessary to confirm the disaster recovery. I think that it is important to establish something like a training day and check back with your own eyes as to whether the data is okay by actually switching back. Moreover, in the case of AWS, since it costs money only when it is launched, we believe it will be extremely tenuous as it costs only for the training day.

Finally I would like to talk about "urban legend" and "the truth of AWS".

· Existing data centers and private clouds are safer than clouds.

· Cloud is not suitable for systems that handle high reliability.

· I can not put back the core business system.

I would like to talk about each one.

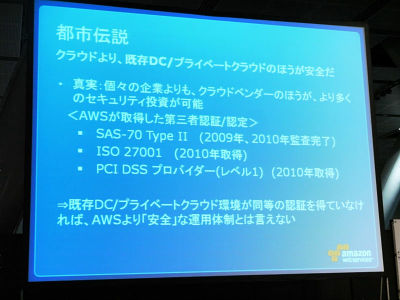

First of all, AWS considers security as top priority. And as a global vendor, we invest heavily in security to improve security. In order to ensure that you can use it with confidence, we will be able to acquire as much certification as possible from a third party organization. SAS 70 Type II, ISO 27001, Last PCIDSS Provider Authentication, this is a certificate that a vendor that handles credit card security information should take, but this is acquired as a cloud vendor.

If a data center that has acquired the same certification as this third party organization certificate as one evaluation viewpoint when "an existing data center is safer" say so, we It may be "That might be the case", but I think that there are few vendors that have obtained equivalent certification. So, I think that this will be a viewpoint of one evaluation.

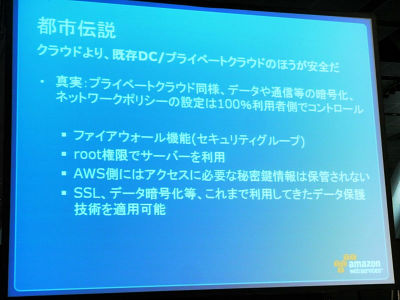

The other is the same as the private cloud, the infrastructure part is AWS 's efforts to push all the effort and keeps safety, but it is the part of the firewall that is the part above it, how to set up the data Whether it is encrypted or not is customer's degree of freedom.

To say that there is a degree of freedom is to set and operate firmly in the same way as before. Since we use the server with the firewall setting, root privilege, conversely, if you lose the secret key of root privilege, we will no longer be able to access that system. But if you can take it as safe it will be saved. Also, SSL and encrypted communication when doing data communication are exactly the same as before. In other words, I think that you can use AWS to make use of the know-how of security that we have cultivated up to now.

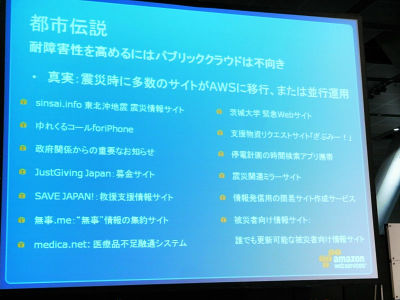

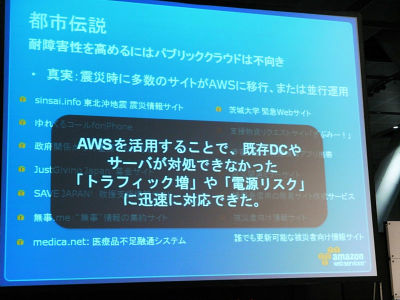

Next is "Urban Legend" such as "Public cloud is not suitable for improving fault tolerance". This is because the word "cloud" is used so extensively that it is a certain mail service or considering the service of SaaS, certainly it is impossible to control the data or stop it There are certainly such things, but in the case of AWS, we have prepared several degrees of freedom to enhance such fault tolerance. Actually, many sites have passed the harsh situation by shifting to AWS or performing parallel operation at the time of earthquake disaster, and by using AWS, existing data center and server could not deal with sudden traffic increase We have a proven track record that we were able to respond quickly.

When looking at contents a little more, the data center where EC2 of AWS is placed can be caught in a region called a region. If you look inside that region, you have placed multiple different data centers for EC2. For S3, we have arranged different data centers.

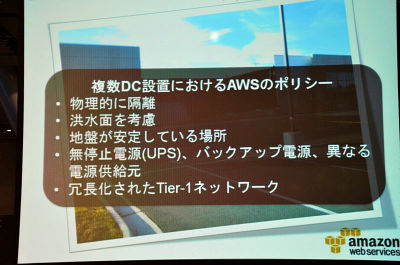

In case of EC 2, you can choose this availability zone as you like, so you can choose as you like, so you can build a solution to make your system more fault-tolerant with redundant configuration is. And it is a policy to put multiple such data centers, but it is physically isolated from each other, it is not located at the same position even on a floody basis, the ground is very stable, no stop We have a data center that combines all of these things, including power supply and backup power supply, priority fuel contract, and having a redundant Tier-1 network.

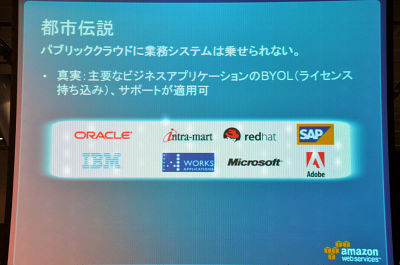

Finally, I can not put the business system on the public cloud, I think that there is such a story, in fact, it is possible to bring the main business application to AWS with the existing license. This is realized by Amazon as a global vendor in collaboration with various major vendors.

For example, Oracle's Oracle application, Oracle DB can be launched on EC 2 and you can bring in an existing license. Also, when using the relational database cloud service called RDS, it is possible to use the Oracle database on a time basis. This is something that other vendors can not do. You can use Oracle's database on a time basis only for validation, bring in an existing license and use it when you become a production environment.

In addition, Microsoft, this is a database built into the system called license mobility. Then, it is possible to bring the commercial server, share point, exchange, and so on used in the existing data center to the AWS.

From SAP, we released a release that guarantees this operation even if we move various solutions on EC 2. This is a very hot topic in the industry that SAP, which is a representative of mission critical business, gave the cloud a seal.

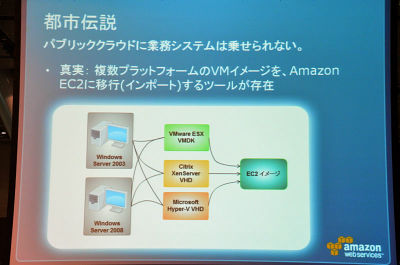

Another, I think that various server images are held in such a form as VM Ware, Xen server, Hyper - V etc. In order to make maximum use of AWS even in such a case, both Widnows 2003 Server and Windows 2008 Server make it possible to import servers of all format combinations into EC2. So, if the customer has such an asset, it can be taken quickly and it can be moved on the EC 2, that is, the trouble of the transition can be saved very much. Of course I am also doing export. Currently, we are making it possible to export the image data of Windows 2008 Server on EC 2 in the image format of VM Ware, but we would like to continue to maximize efforts to increase the combination of import and export .

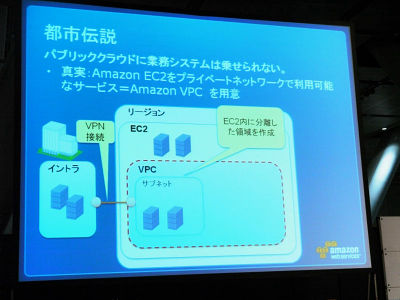

Also, we will receive requests like we said that IP addresses inside the company can not be used because it is difficult to take the in-house system to the public cloud, but we have already started a service called virtual private cloud in the Tokyo region since this month .

Explain what this is, I will isolate a part of EC2. And I will make a server in it. It is a service that you can connect VPS to your domain from your intranet, shake your IP address, and use it in your company's environment.

When expanding an existing data center, there is no expansion of the server, create a VPC using the VPN connection, create a server in it, use 10 and 20 only when necessary , And so on.

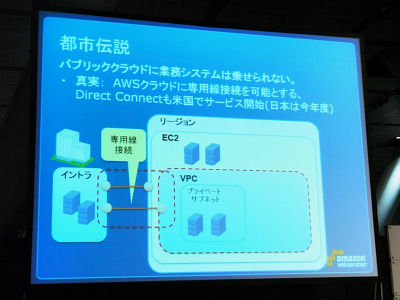

Furthermore, we have also begun the service of leased line connection, and it is possible to do a dedicated line connection from the data center etc. your company has to the web service that Amazon has. We will guarantee the bandwidth by connecting dedicated line with 1 Gbps, 10 Gbps, or a combination of them.

So, even if it is said to be a cloud, it is possible to realize that it is exactly the same security level as the private cloud, and it can be used only when necessary without requiring an initial expense in terms of cost. Service has already begun in the US in private line connection, but in Japan we are planning for this fiscal year.

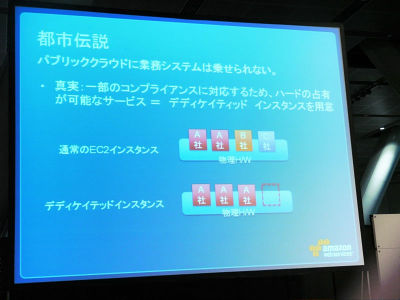

Also, this is also interesting, but even if you know that a virtual server like EC2 is very good security, we can not comply with other companies' servers on a single physical server There is also. For those customers, I also started dedicatedized instances as a hardware occupancy service. In the case of a normal EC 2 instance, a hypervisor is placed on one physical server and a plurality of virtual servers are set up. That is, the server of company B may be aligned with the server of company A.

This is basically completely isolated, so it does not become a problem, but since it becomes a problem in terms of compliance, in order to solve this problem, on a physical server on which Company A is riding, It is a service that you can not put any server of another company.

It is possible to use a dedicated set instance in a VPC when you pay a fee.

I have talked about the truth of AWS against various "urban legends" so far.

Today was a short time of 40 minutes but thank you very much for listening.

Related Posts:

in Coverage, Web Service, Posted by darkhorse