Google handles one search query in 0.2 seconds using 1000 machines

That Google that returns the result immediately if I put the phrase to be searched is that Google, but in order to process that one phrase, in fact it uses 1000 servers indeed, it is very fast processing in only 0.2 seconds to WSDM 2009 It became clear. It was Google Fellow that keynote speechesJeff DeanAt the "Google I / O" conference in June 2008It takes less than 0.5 seconds by 700 to 1000 serversAlthough I said that, in this lecture, it became clear that Google continues to evolve steadily where users do not notice.

The latest information on behind the unknown Google is from the following.

Geeking with Greg: Jeff Dean keynote at WSDM 2009

Single Google Query uses 1000 Machines in 0.2 seconds

First of all from easy-to-understand examples of Google's growth over the past 10 years from 1999 to 2009.

· Now we are processing 1000 times more query than before

· The processing capacity of the machine became 1000 times

· It used to take 1000 milliseconds before, but now it has been speeded up to 200 milliseconds

· When it reached the page update detection, it reached 10000 times, initially it took several months to reflect but now the page is updated and reflected in a few minutes

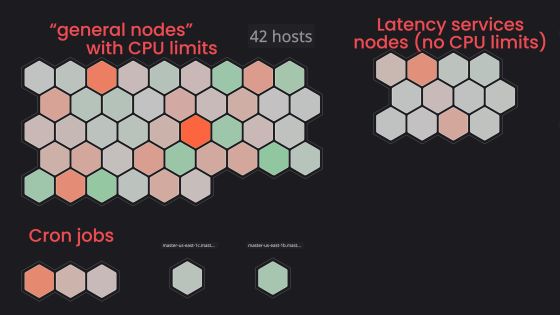

Also, according to Jeff Dean, Google puts the search index all in memory several years ago and displays search results almost instantaneously to the person who is trying to search, so for each query, 2 , It is said that thousands of machines are working in tandem instead of three dozen machines.

Google has developed various index compression technologies over the past several years and finally put it in a format that combines the four deltas of the position in order to minimize the number of replacement work required for decompression I told you it was solved.

Also, Google is paying attention to where their data is located on the disk, and data that needs to be read immediately is placed on the outer circumference of the disk which can read data at a higher speed even in the hard disk , It seems that cold data (data that does not need to be read out quickly, data with low reading frequency) and short data are placed on the inner circumference of the disk.

Also, in usual server applications, we use ECC memory at a higher price than usual which can correct errors themselves, but Google uses non-parity memory, so we created our own program to recover from errors, My own disk scheduler. The Linux kernel has also made a number of corrections to meet the needs.

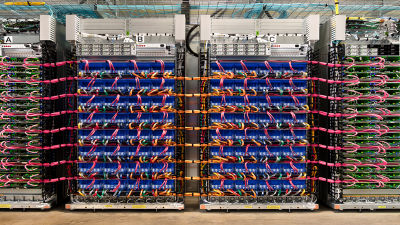

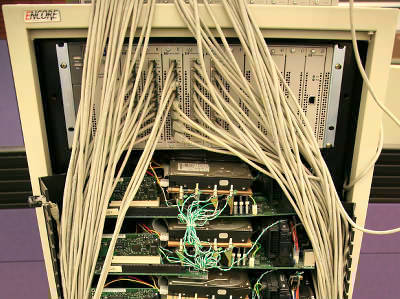

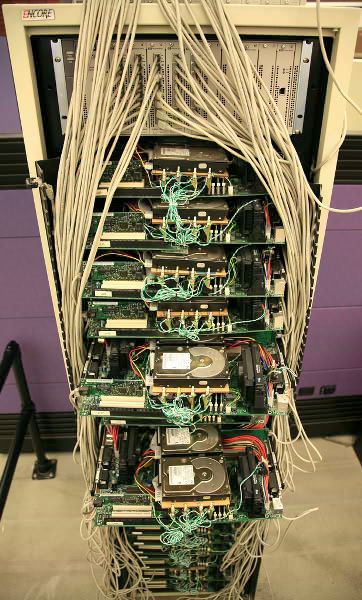

Regarding the physical server as well, in the first phase it was a self-made server without a case, then it became a server to fit in a normal rack, but now it is back to a custom server without case again.

This is the first server

Jeff Dean Mr. Jeff Dean, Google has rolled out seven major re-architectures (rebuilds) over the last 10 years and these changes are often completely different index formats and totally new storage systems like GFS and BigTable It was said that there was sometimes. In all these rollouts, Google says that he did roll back immediately if it did not go well. In some rollouts, new code moved in the new data center, older data centers remained in motion, and switched traffic between data centers.

Also, Google is always doing minor changes, experiments and testing of new code that users searching are unaware of, as these experiments are done quickly and quietly, the user noticed what has changed I will not be able to do it.

We continue to work on language barriers as well, and Google's machine translation system, which is a multi terabyte model to simply translate one sentence, is doing one million automatic checks. Google's goal is to make information available in all languages you can enter and go, regardless of which language you are deciding to speak.

Related Posts:

in Note, Posted by darkhorse