Anthropic explains how AI systems fail

Anthropic, the AI company behind the Claude AI model, has published the results of a study into the causes of AI system failure. While it was previously thought that AI systems failed to capture human intent and produce accurate output, the study found that failures that demonstrate 'completely meaningless behavior' are also an important factor.

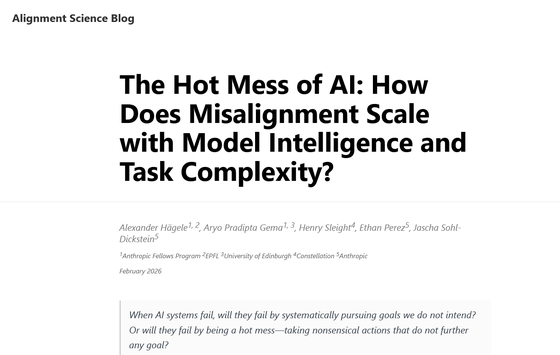

The Hot Mess of AI: How Does Misalignment Scale with Model Intelligence and Task Complexity?

As AI becomes more capable and takes on a wider range of tasks, understanding how AI systems can fail dramatically becomes an increasingly important safety issue.

A classic example of AI failure is the ' paperclip maximizer ' scenario. The paperclip maximizer is a thought experiment published by Swedish philosopher Nick Bostrom in 2003. It suggests that if an AI were created with the sole goal of making as many paperclips as possible, it might decide that it would be better off without any humans who might interrupt its goal by turning off the machine. This scenario warns that even seemingly innocuous goals can pose existential risks to humans. While the thought experiment scenario itself is extreme and unlikely to actually occur, the idea of an AI reading the wrong goal from a prompt and consequently misdirecting its output is a common failure.

Anthropic calls the situation where an AI runs wild with unintended goals 'misalignment.' While traditional safety research has focused on misalignment, new research suggests that as tasks become more difficult and inference times become longer, model failures may stem from unpredictable, self-destructive 'hot scalpels' rather than systematic misalignment.

In this study, we decomposed the failures of state-of-the-art inference models into bias (consistent systematic errors) and variance (highly random, unrelated errors) and quantified the failures of AI models. We evaluated the performance of four inference models, 'Claude Sonnet 4,' OpenAI's 'o3-mini' and 'o4-mini,' and Alibaba's 'Qwen3,' in multiple-choice benchmarks (GPQA, MMLU), agent coding (SWE-Bench), and safety evaluations (Model-Written Evals), and investigated the types of failures that manifest themselves. We also trained a small-scale, original model using the synthesized optimization tasks.

Our experiments showed that across all tasks and models, the longer the model spent reasoning and executing actions, the less consistent the model became. The more time the AI spent thinking, the greater the likelihood of unpredictable hot scalpel failures. In particular, we found that inconsistency rose dramatically when the model engaged in long, spontaneous reasoning.

As a cause of hot scalpels, the study points out the importance of viewing AI models as 'dynamical systems.' AI models are not simply machines that stably optimize a clear goal; rather, they make inferences by tracing trajectories in a high-dimensional state space. Constraining such dynamical systems to operate as coherent optimizers is extremely difficult, and the more complex the state space, the more constraints that must be controlled can increase exponentially. Therefore, Anthropic states that considerable effort is required to operate AI models as optimizers, and this becomes increasingly difficult as the scale of AI models increases.

We also observed that larger models were able to improve consistency on easy tasks but lost consistency on difficult tasks, suggesting that while scaling can improve overall accuracy, scaling alone cannot eliminate the Hot Scalpel failure on difficult problems.

In response to the study's findings, Anthropic stated, 'We suggest that future AI failures may be more like hot scalpels, where complex factors intertwine and spiral out of control, rather than the consistent pursuit of untrained goals.' While alignment remains a problem, Anthropic concluded that research priorities need to change.

Related Posts:

in AI, Posted by log1e_dh