Anthropic investigates the reality of the statement that 'relying on AI doesn't improve skills'

Because generative AI can produce answers that appear correct without requiring deep human thought, it is sometimes said that it does not cultivate human thinking skills. Research by Anthropic, the developer of the AI 'Claude,' investigated the relationship between AI and human skills.

How AI assistance impacts the formation of coding skills \ Anthropic

Previous research suggests that people who receive AI assistance are less engaged in their work and put less effort into it. To investigate the effects of human attempts to reduce their cognitive load on their work, Anthropic conducted an experiment to see whether the speed at which people acquire skills and the level of understanding of skills change with or without AI.

Anthropic recruited 52 beginner-level software developers who had been using Python at least once a week for over a year and were somewhat familiar with AI coding assistance tools, but had not mastered the Python library Trio.

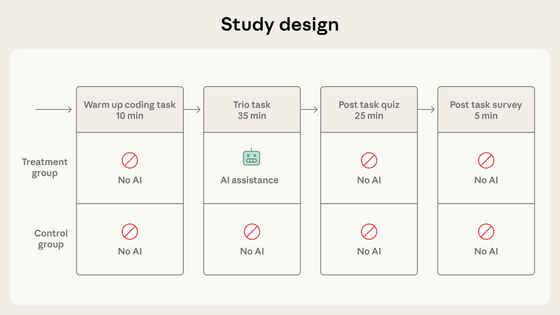

The study was divided into three parts: a warm-up to learn Trio, the main task of coding two different functions using Trio, and a quiz to assess comprehension. Participants were informed that they would take the quiz after completing the task. Participants were split into two groups: one group was allowed to use the AI only during the main task, and the other group was prohibited from using the AI throughout the experiment.

In this experiment, the group using AI completed the main task about two minutes faster on average, but this difference was not statistically significant. There was a significant difference in quiz performance, with the non-AI group scoring an average of 67% compared to 50% for the AI group. The largest difference in scores between the two groups was on the debugging questions, suggesting a significant difference in the ability to recognize code errors and understand the cause of failure.

However, some people in the AI group still achieved high scores despite using AI. These people apparently asked the AI questions after generating the code to deepen their understanding, and attempted to improve their own understanding. On the other hand, people who left the whole process to the AI from start to finish, or who left everything to the AI after asking one or two questions at the beginning, scored lower, and when narrowing down the sample to just these people, the average score was only around 40%.

Anthropic said, 'The active adoption of AI in the workplace, especially in software development, suggests that there are trade-offs. Importantly, using AI doesn't necessarily mean getting a lower score; how we interact with AI while striving for efficiency will influence how much we learn. Under time constraints or organizational pressures, less skilled developers may rely on AI to complete tasks as quickly as possible, even at the expense of skill acquisition and debugging capabilities. Managers should be intentional about designing large-scale adoption of AI tools and consider systems and design choices that allow engineers to continue learning as they go.'

Related Posts: