Introducing the video generation AI 'LTX-2,' an open-source model that runs locally and also features NVIDIA's '4K video generation pipeline.'

AI development company

LTX-2 Overview | LTX Documentation

https://docs.ltx.video/open-source-model/getting-started/overview

LTX-2 is now open source.

— LTX-2 (@ltx_model) January 6, 2026

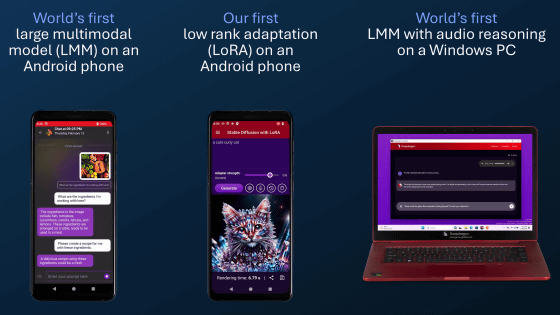

The first truly open audio-video generation model with open weights and full training code, designed to run locally on @NVIDIA_AI_PC RTX consumer GPUs.

Details below 🧵 pic.twitter.com/V8jkQwxjV8

NVIDIA RTX Accelerates 4K AI Video Generation on PC | NVIDIA Blog

https://blogs.nvidia.com/blog/rtx-ai-garage-ces-2026-open-models-video-generation/

The main features of LTX-2 are as follows: Details of the LTX-2 architecture are published in the paper (PDF file) .

-High fidelity generation

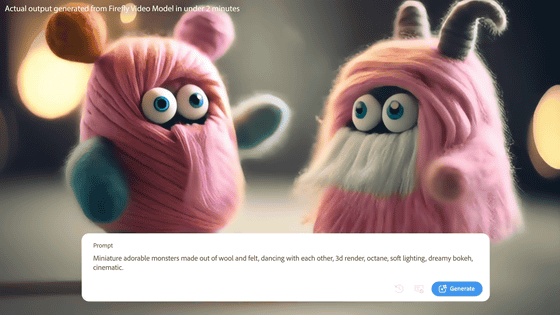

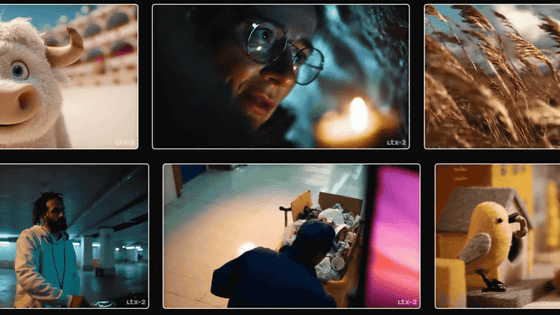

It can generate videos with synchronized audio and video up to approximately 20 seconds in length. Depending on the configuration and hardware, it can also support high resolution and high frame rates, and is designed to be scalable from high-speed generation requiring trial and error to high-quality output that prioritizes quality.

・Simultaneous generation of audio and video in one model

Conversation, lip movements, and environmental sounds are all generated in a single process, eliminating the need for post-processing to adjust audio delays. This creates natural conversation timing and facial expressions, enabling expressive performances.

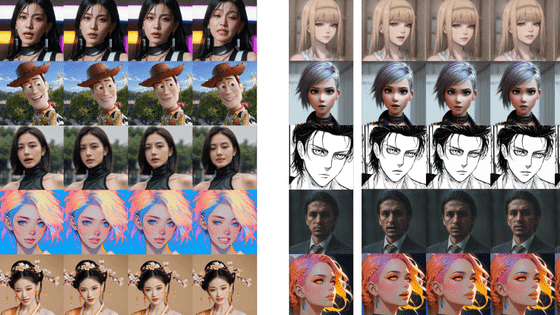

・Motion realism

It allows you to create dynamic scenes that maintain stable movement and the integrity of people and characters while maintaining consistency between frames.The design makes it difficult for people and characters to look unnatural.

・Fine-grained control

In addition to supporting LoRA -based customization, it also supports motion control that takes camera movement into account, and by combining multimodal inputs such as text, images, videos, audio, and depth information, you can precisely specify creative visual expression that matches your intentions.

・Efficient design

Thanks to its compact latent space and improved architecture, LTX-2 runs efficiently on high-end consumer GPUs, enabling high-quality audio and video generation locally without the need for large-scale dedicated infrastructure.

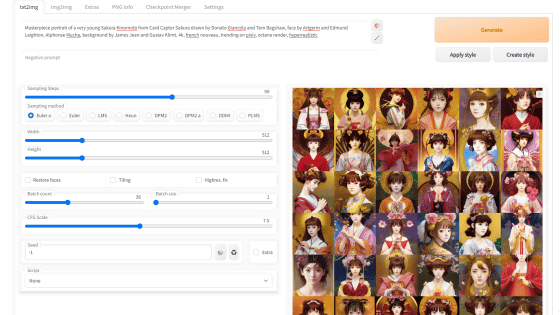

Instructions for using the LTX-2 can be found in the Quick Start Guide. While there are multiple ways to use the LTX-2, Lightricks recommends starting with ComfyUI , an open-source GUI tool that offers the best balance of power and ease of use.

Quick Start | LTX Documentation

https://docs.ltx.video/open-source-model/getting-started/quick-start

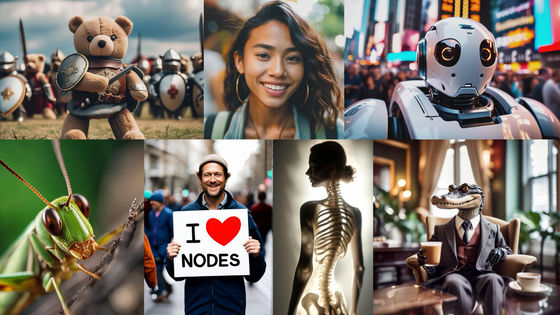

NVIDIA announced a series of AI upgrades for GeForce RTX, NVIDIA RTX PRO, and NVIDIA DGX Spark devices during the 'RTX AI Garage' event held in conjunction with CES 2026. One of the highlights is a video generation upscaling pipeline that enables 4K video generation, which was previously difficult on local PCs, and the LTX-2 is used as the model to realize this pipeline.

Once the clip is generated, it's upscaled to 4K in just a few seconds using ComfyUI's new RTX Video node. This upscaler works in real time to sharpen edges and remove compression artifacts for crystal clear video. This allows the LTX-2 to generate up to 20 seconds of 4K video, delivering results that rival leading cloud-based models. NVIDIA describes the LTX-2 as 'a major milestone in local AI video production.'

On the ComfyUI blog, you can see examples of LTX-2 generating videos from text, images, and control information.

LTX-2: Open-Source Audio-Video AI Model Now Available in ComfyUI

https://blog.comfy.org/p/ltx-2-open-source-audio-video-ai

Related Posts: