Resolve low-bandwidth screen sharing issues by replacing H.264 video streaming with a series of JPEG screenshots

HelixML , an enterprise AI platform that runs autonomous coding agents in a cloud sandbox, includes a mechanism for remotely sharing the screen to monitor the AI assistant's operations. While this screen sharing is typically done by streaming H.264 -encoded video, the development team explained in a blog post that they solved the issue of being unable to share the screen in low-bandwidth environments by taking and sending a screenshot in JPEG format.

We Mass-Deployed 15-Year-Old Screen Sharing Technology and It's Actually Better

https://blog.helix.ml/p/we-mass-deployed-15-year-old-screen

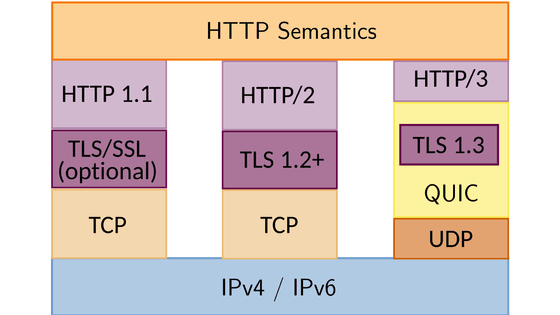

WebRTC is a system for sending and receiving video via screen sharing. However, because UDP is often blocked or deprioritized on corporate networks, and WebRTC uses UDP due to the TURN protocol, it can easily encounter issues such as video not connecting in corporate environments.

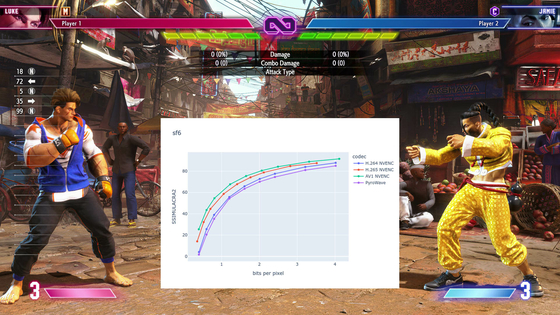

To address this issue, the development team bypassed all proxies and firewalls and switched to a configuration that streams video frames via WebSocket, using GStreamer and undefined for hardware encoding of H.264 and WebCodecs for hardware decoding on the browser side, resulting in video delivery at a frame rate of 60 fps, a bitrate of 40 Mbps, and a latency of less than 100 ms.

However, although this video streaming system worked well in the development environment, it broke down in unstable Wi-Fi environments such as cafes. This is because TCP (WebSocket) guarantees order, and when the network is congested, delays accumulate due to TCP buffering, causing the video to be delayed by several seconds, or even tens of seconds, from the actual operation.

Initially, to solve this problem, the development team tried to create a mode that only sent 'keyframes.'

The H.264 codec commonly used in video streaming does not send all frames as complete images in order to save data, but instead sends 'difference' data, which is information about only the parts that have changed from the previous screen. The basic frame that contains all the information is called a key frame, and the frame that is represented as a difference is called a P frame.

For 60fps video, normally 60 frames are sent per second, but we set it to discard all 59 P frames and send only the remaining one key frame. This makes the video move at 1fps in a jerky manner, but it significantly reduces the amount of data transmitted.

However, when we actually put it into operation, the screen froze completely after displaying only the first frame. The reason for this was due to the specifications of the Moonlight protocol, which was used as the basis for communication. The Moonlight protocol had a rule that said, 'If a frame is not consumed, the next one will not be sent,' so it could not properly handle partial frame discards.

This problem was solved by using a screenshot capture API that was prepared for debugging purposes.

This API retrieves lightweight JPEG images, each about 100KB to 150KB in size, several times per second. Opening it in a browser instantly displays a 1080p desktop JPEG image, and repeatedly refreshing it delivers beautiful still images at around 5fps.

Because JPEG images are self-contained, they are always displayed as a complete image when they arrive, and are less likely to suffer from 'waiting for the next keyframe' or 'decoder state corruption' like video. Furthermore, while an H.264 keyframe is 200KB to 500KB, a 70% quality 1080p JPEG image is around 100KB to 150KB, so in some situations JPEG may actually be lighter in terms of the transfer volume per frame.

So the development team adopted a hybrid configuration: 'On good connections, it uses H.264, and on bad connections, it switches to JPEG polling.' On good connections, with a round-trip time (RTT) of less than 150 ms, it displays smooth H.264 video, but when the RTT exceeds 150 ms, it automatically stops the video and switches to JPEG image transmission.

However, when H.264 streaming is stopped, the WebSocket becomes lighter and the RTT improves, causing the monitoring logic to mistakenly determine that the line has recovered and resume the video, but when it resumes, the line becomes congested again and the display returns to screenshots, resulting in continuous switching every approximately 2 seconds. For this reason, the development team decided that the return to H.264 streaming is not automatic, but requires the user to manually click a button.

Through this experience, the development team concluded that 'old, simple solutions can sometimes be more effective in the harsh environments of the real world than sophisticated, complex technology,' and they praised the fact that an old technology dating back 15 years was the key to solving a current problem as 'paradoxically excellent.'

Meanwhile, on the social news site Hacker News , some opinions were seen such as, 'The root cause of the accumulation of delays is weak congestion control and bandwidth estimation, so controls should be implemented to reduce the bitrate and frame rate by using initial bandwidth probes and increases in transmission delays as congestion signals, ' ' What about delivering chunks of video using HTTP Live Streaming and using adaptive bitrate playback to switch quality according to line conditions, ' ' Rather than sending the screen as a video, why not send it as application data such as text and reconstruct it on the receiving side ?' and 'Even with TCP, designs should be implemented that do not allow frames to be accumulated on the sender side, and transmission controls should be implemented that assume feedback. It is also an option to use low-level libraries such as FFmpeg, whose behavior is easy to understand .'

Related Posts:

in Software, Posted by log1i_yk