What is the technology that supports live distribution that is popular on YouTube and Twitch?

With the advancement of communication infrastructure, distribution software such as

Video live streaming: Notes on RTMP, HLS, and WebRTC

https://www.daily.co/blog/video-live-streaming/

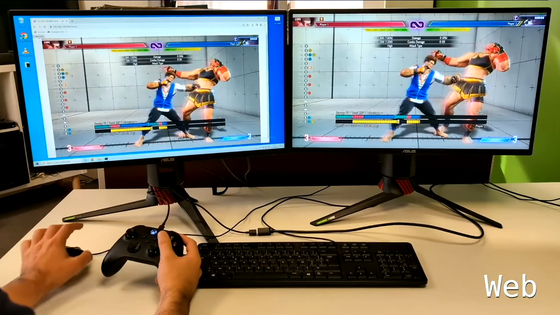

For example, in Twitch, which is known as a live game distribution platform, the data communication protocol differs between 'Distributor to Twitch' and 'Twitch to Viewer'. Real Time Messaging Protocol (RTMP) is almost certainly used when sending video from the distributor to Twitch. On the other hand, if you're watching livestream on Twitch, you're using a communication protocol called ' HTTP Live Streaming (HLS) ' instead of RTMP.

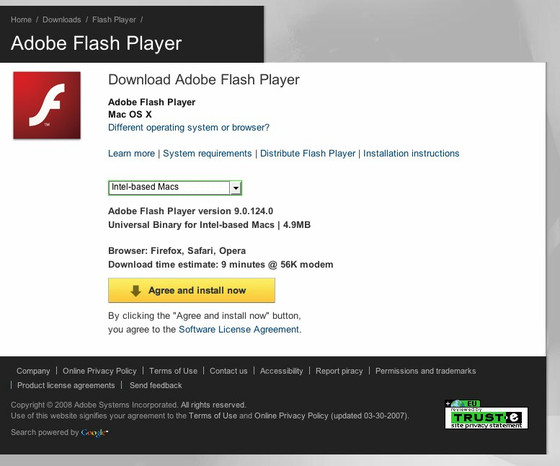

RTMP is a TCP -based communication protocol developed by Macromedia , acquired by Adobe in 2005, for data transmission between Adobe Flash Player and servers. It was developed about 20 years ago, because it has long been used as a way to send live video from one computer system to another, and Adobe Flash Player has long been useful as a video playback plug-in on the web. Therefore, it has become the de facto standard in the industry. Support for RTMP itself has ended when Adobe Flash Player ends on December 31, 2020, but it is often used at the time of article creation as a communication protocol for transmitting video from the distributor side to the media server. It has been.

by

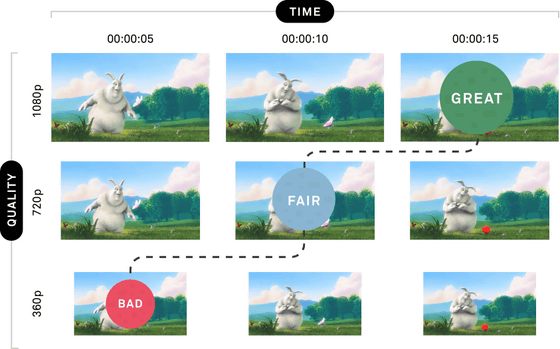

HLS is an HTTP-based communication protocol developed by Apple and released in 2009. HLS supports multiple bitrates, allowing display clients to dynamically switch between multiple bitrates. For example, the bit rate of people watching live streaming from the outdoors on their smartphones is low, and the bit rate of people watching live streaming on the Internet at home is high. You will be able to do it.

The fact that the bit rate changes means that the quality of the live-streamed video seen on the smartphone is different from that of the live-streamed video seen on the home PC. HLS enables Adaptive Bit Rate (ABR) streaming , which packages video together in short time increments and encodes it at different bit rates.

Summary of basic knowledge for handling video digitally, how is video and audio processed on a PC? --GIGAZINE

ABR streaming divides the video into short pieces and encodes them to their respective resolutions, and dynamically switches the bit rate if the receiving device can no longer process the media. Therefore, even if the network environment becomes poor, it automatically switches to low resolution, so HLS is characterized by the fact that watching videos is not interrupted. Note that the short packaged video is a chunk file with a '.ts' extension, and the metadata is stored in a manifest file with a '.m3u8' extension.

The advantage of HLS over RTMP is that it can efficiently deliver HLS chunks through content delivery networks (CDNs) such as Cloudflare, Fastly, Akamai, and Cloudfront. RTMP is a streaming-oriented protocol, so to send video and audio over an RTMP connection, you need to open a TCP socket and push data in RTMP format to that socket. However, HLS has manifest files and chunks cached by the CDN, which makes it easy to receive and watch livestreams anytime, anywhere.

However, just sending and receiving video data does not guarantee the real-time performance of live distribution. In livestreaming, 'capture and encode game footage and webcam footage on the broadcaster's PC, send it to the media server, process the video on the media server, send it to each viewer's viewer, and play it.' With just this series of steps, a latency of 50 to 300 milliseconds will occur. In addition, of these, encoding and HLS packaging incur latency of a few seconds. In addition, the latency is further amplified by fetching the HLS chunk cache from the CDN and unpackaging it.

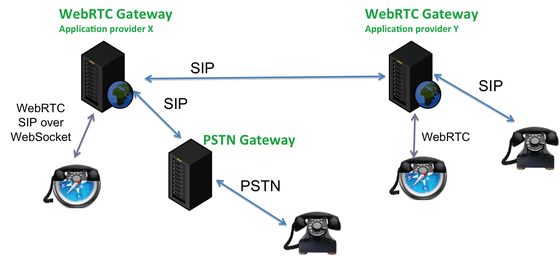

To reduce this latency, the industry standard for ultra-low latency is WebRTC . WebRTC is a standard created for P2P real-time communication. It encodes the video on the sender side and sends the data as a continuous stream. WebRTC uses UDP instead of TCP, does not generate latency in seconds like HLS, and the time required for the playback buffer is suppressed to about 30 milliseconds, so it is often used in chat tools and the like. However, in the case of WebRTC, since the video is encoded on the sender side, the burden on the distributor side increases as the number of viewers increases. As a result, WebRTC is not well suited for situations with a large audience.

For example, suppose you heat popcorn in the microwave while watching a movie on Netflix while connected to a wireless LAN at home. When connected to 2.4GHz band Wi-Fi, the microwave oven will interfere with Wi-Fi and packet loss on the network will increase rapidly. With HLS that divides the video and plays the cache, if the packet loss occurs for about a few seconds, the packet loss can be compensated by retrying TCP, so the player automatically switches to a low bit rate. It is possible to keep the connection.

However, in the case of WebRTC, only video packets of about 50 milliseconds are stored, so if a large packet loss occurs, the player will not be able to continue rendering the video, and the

Also, packet loss and jitter are generally much less when connecting to a nearby server than when connecting to a distant server. Therefore, if your livestream audience is geographically dispersed, it is important to have a distributed infrastructure for your media servers, and you need to build cascading or mesh connections between WebRTC media servers.

In addition to RTMP, HLS, and WebRTC, there is a communication protocol called ' MPEG-DASH (Dynamic Adaptive Streaming over HTTP) '. Like HLS, MPEG-DASH divides the video into smaller pieces, encodes each chunk with various qualities, and distributes it, so ABR streaming is possible in the same way as HLS. Also, HLS limits the codec to H.264 or H.265 , while MPEG-DASH can use any encoding standard. Therefore, depending on the codec, it is possible to perform higher quality live distribution at a lower bit rate.

However, although MPEG-DASH is an international standard as ISO / IEC 23001-6 , it is not supported by Apple devices, and videos delivered by MPEG-DASH cannot be played on iPhones and MacBooks. Apple has not published HLS as an international standard, but since HLS is the only format supported by Apple devices, HLS currently holds the overwhelming share of the stream protocol.

Related Posts:

in Software, Web Service, Posted by log1i_yk