Summary of basic knowledge for handling video digitally, how is video and audio processed on a PC?

Advances in technology have made it possible to enjoy movies and TV shows on smartphones anytime, anywhere. Anyone can easily edit the video and publish it on online sharing services such as YouTube and Vimeo. 'Mux', which develops a video player that runs on a web page, explains the basic knowledge required to process videos digitally.

HowVideo.works

◆ Video

When humans perceive an image with their eyes, the image remains in the recognition as an afterimage for a moment. The principle of video is that if you look at an image that is slightly different from the previous one while the afterimage remains, it will be recognized as moving.

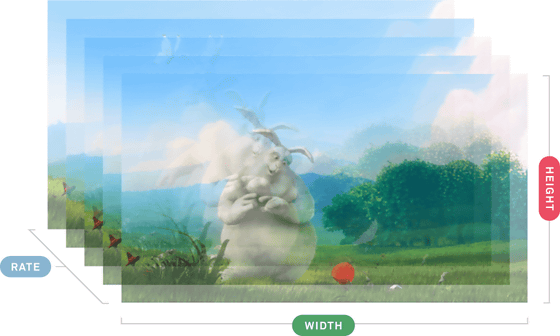

One image that makes up an image is called a 'frame'. In addition, the unit 'fps' that often appears in the world of video means 'frames per second', which means 'frame rate', which is the number of continuous images per second. Generally, 30fps and 60fps are common, and the standard of 24fps is often used in movies.

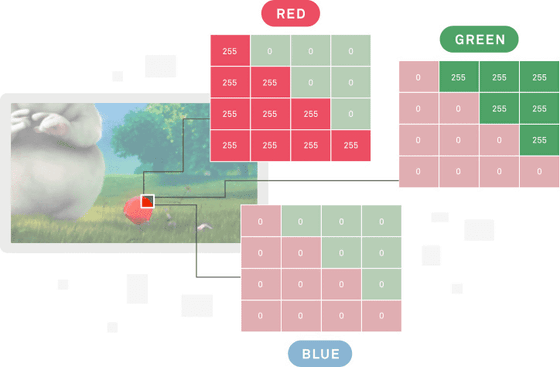

And to display the frame as digital information, we use small dots called 'pixels'. This pixel has

In addition, the amount of pixels that make up a frame is the amount of information in the frame, which is the 'resolution', which is expressed as the number of horizontal pixels x the number of vertical pixels. For example, for a video with a resolution of 1920 x 1080 pixels, the amount of pixels in the frame is 1920 pixels wide x 1080 pixels high = 2,073,600 pixels.

The video data size can be calculated based on the resolution, frame rate, color depth, and video length. For example, if there is a video with a resolution of 1920 x 1080 pixels, a color depth of 8 bits, and a frame rate of 60 fps for 5 seconds, the file size will be 1920 x 1080 pixels x 3 bytes x 60 fps x 5 seconds = 1,866,240,000 bytes (about 1.78 gigabytes). Become.

◆ Voice

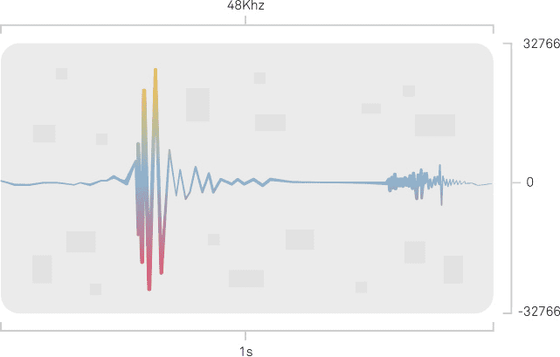

The sound that accompanies the video can be recognized by catching the vibration of the air with your ears. In other words, voice can be expressed as waves. However, in order to digitize speech, it is necessary to convert continuous waves into discrete numbers.

Therefore, the audio wave is regularly sampled at regular intervals and quantized to the closest value. The 'sampling frequency' indicates how much waves can be sampled per second. For example, the sampling frequency used on CDs is typically 44.1kHz. This means that 'sound waves are converted into 44,100 pieces of data per second.' The sampling frequency is, so to speak, the frame rate of the voice, and the higher the sampling frequency, the smoother the sound and the higher the sound quality.

Furthermore, the amount of information that one sampled data has is the 'bit depth'. For example, bit depth is the resolution of sound, and the higher the bit depth, the more expressive the sound and the higher the sound quality.

Audio data size is determined by the number of audio channels, sampling frequency, bit depth, and audio length. For example, if there is 5.1ch surround (6 channels), sampling frequency 48kHz, and bit depth 16 bits (2 bytes) for 5 seconds, the file size is 6 channels x 48,000Hz x 2 bytes x 5 seconds = 2.88 million bytes (about). 2.74 megabytes).

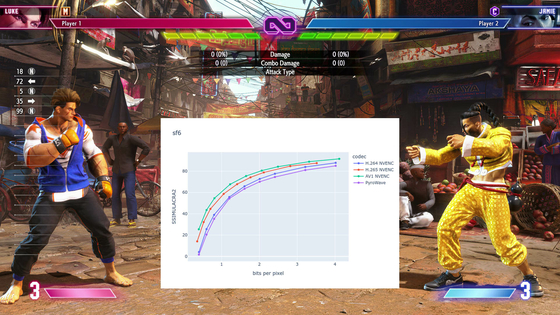

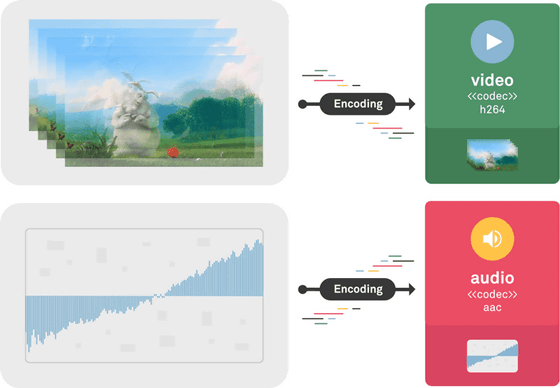

◆ Codec

By digitizing video and audio, you can digitize video. However, if you keep the original, the file size will be too huge. Therefore, what is important is the 'codec,' which is a standard for compressing video and audio. Also, compressing uncompressed data based on the codec is called 'encoding'.

Playing back the compressed data is called 'decoding'. In order to decode video and audio encoded with a specific codec, the playback device must have that specific codec installed.

There are three types of codecs: lossy compression, lossless compression, and lossy compression. The uncompressed codec saves the original data, so you can play videos that are comparable to the original, but the file size is larger.

The lossless compression codec can restore the compressed data to almost the original, and can only reduce the file size. However, if you repeat encoding many times, the quality of the video will gradually deteriorate. Also, the data compression ratio is not that large.

The lossy compression codec prioritizes the convenience and compatibility of video, and shows high compression by simplifying a part of the data. However, the quality of the original video remains lost and cannot be undone. As you repeat encoding, the quality of the video will be greatly lost.

◆ Container

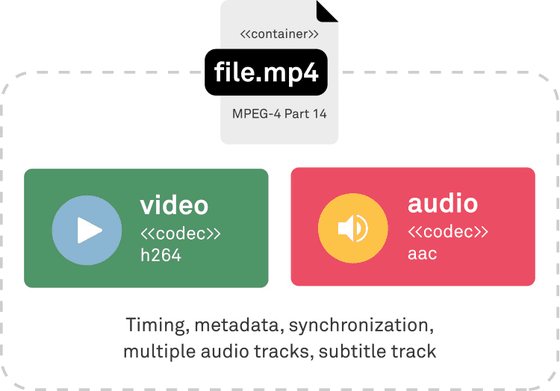

Encoding video and audio doesn't end there, you need to combine the compressed video and audio into a single file. A file format that stores multiple data together is called a container. This container stores not only video and audio, but also synchronization information,

Not everything can be stored in a container, limited by codec patents and availability, playback device specs, recording media types, and standards such as

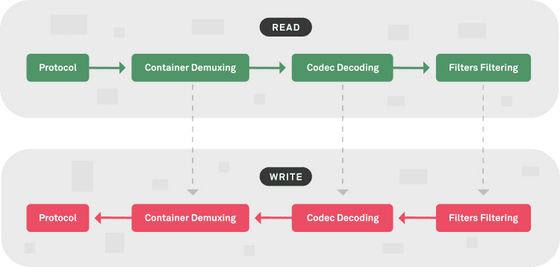

When playing a video stored in a container, the media is read via a protocol such as a file or HTTP, the data stored in the container is expanded, and the video and audio are played using the codec.

◆ Transcoding, translating, transsizing

'Transcoding' is the process of transcoding a video codec for economic reasons such as compatibility and storage costs. For example, transcoding to convert an MP4 format video file containing

Also, in order to reduce the file size, 'translating' is to change only the bit rate without changing the encoding of the video. The bit rate is the amount of data per second, and suppressing this bit rate also reduces the file size. Translating allows you to play in poor network quality environments and low spec devices, as well as saves on storage and line bandwidth requirements, making it more economical.

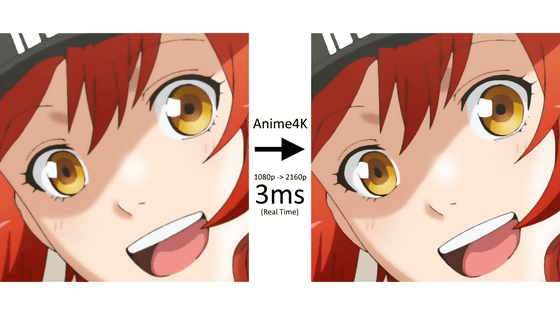

'Transsizing' is to change the resolution of the video. Increasing the resolution of a video is called up-conversion, and decreasing it is called down-conversion. In the case of up-conversion, even if you simply increase the resolution, the image quality will remain low and the image will be blurred, but technologies such as 'Anime4K' that increase the resolution while maintaining the image quality of the video have also appeared.

◆ Packaging

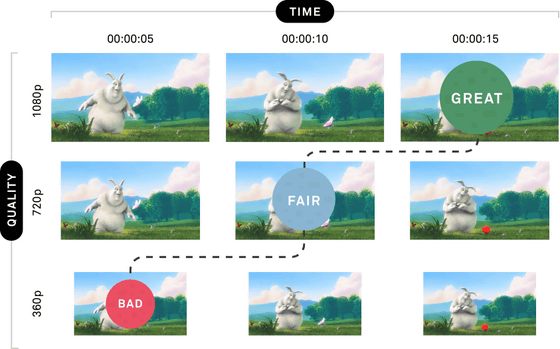

In recent years, instead of downloading all videos and then playing them, streaming that plays while receiving data from the Internet has become the mainstream. However, some playback devices have low performance and cannot process complex video and audio. In addition, it may take some time to download the content because the communication bandwidth varies depending on the user's network environment.

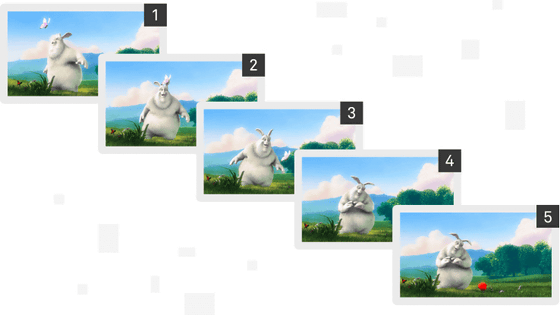

That's where the technology called 'adaptive bit rate (ABR) streaming' came into play. This is for receiving one video data while automatically converting the bit rate. For example, if you want to stream an hour of video by ABR streaming, divide the video into seconds and create each resolution. If the receiving device can no longer process the media, the bitrate will switch dynamically. Even if the network environment becomes poor, it automatically switches to low resolution, so watching videos is less likely to be interrupted. Packaging is the process that enables this ABR streaming.

In addition to the work done to improve compatibility, MUX is doing much more complicated work to improve the user experience or to increase its benefits. It takes time to process digital media. And we need hardware, and we have to strike a balance between the cost of time and money. '

Related Posts:

in Software, Posted by log1i_yk