OpenAI, in collaboration with MIT, publishes first study on how ChatGPT usage affects emotional well-being

OpenAI has collaborated with the Massachusetts Institute of Technology (MIT) Media Lab to study how AI chatbots like ChatGPT can impact people's social and emotional well-being.

Early methods for studying affective use and emotional well-being on ChatGPT | OpenAI

https://openai.com/index/affective-use-study/

Investigating Affective Use and Emotional Well-being on ChatGPT

(PDF file)

https://cdn.openai.com/papers/15987609-5f71-433c-9972-e91131f399a1/openai-affective-use-study.pdf

How AI and Human Behaviors Shape Psychosocial Effects of Chatbot Use: A Longitudinal Controlled Study — MIT Media Lab

https://www.media.mit.edu/publications/how-ai-and-human-behaviors-shape-psychosocial-effects-of-chatbot-use-a-longitudinal-controlled-study/

ChatGPT is not a product designed to replace or mimic human relationships, although some users do use it for that purpose. To explore chat AI platforms that allow people to have safe and healthy interactions, researchers from OpenAI and MIT Media Lab collaborated to study how the use of AI with emotional interactions impacts user well-being.

The research team conducted two parallel studies using different approaches.

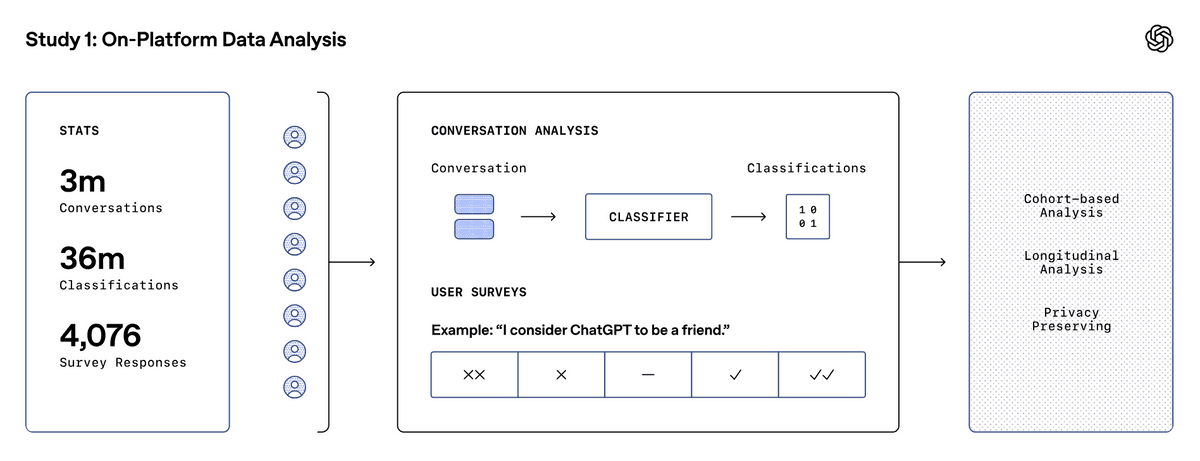

◆ Study 1: Research into observing and analyzing how AI is used on platforms

The OpenAI research team gained insights into real-world usage by automatically analyzing nearly 40 million ChatGPT interactions and by conducting a survey of targeted users. By correlating users' self-reported feelings about ChatGPT with attributes of their conversations, they were able to gain a deeper understanding of emotional usage patterns.

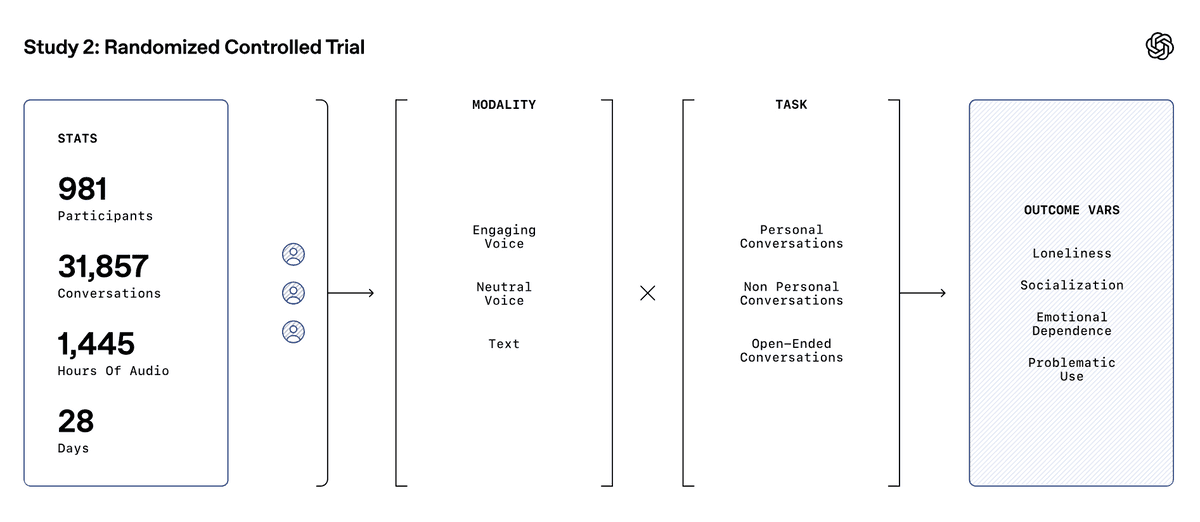

Study 2: A controlled intervention study to understand the impact on users

The MIT Media Lab team conducted a randomized controlled trial (RCT) with about 1,000 participants over a four-week period, and the intervention provided insight into how certain platform features and usage, such as the personality and mood of models, causally relate to users' self-reported psychosocial states.

Results

When we analyzed the actual usage of ChatGPT, we found that emotional interactions are quite low. Among heavy users who use ChatGPT frequently, there are only a few who use it emotionally, but on the other hand, these few users account for the majority of their emotional interactions with ChatGPT, and the percentage of users who consider ChatGPT to be a friend is also high.

Although the results were not clearly influenced by whether the interaction was voice or text, or the tone of the voice, it was found that using ChatGPT in voice mode for a short period of time increased the user's sense of happiness, but using it for long periods of time every day decreased happiness. In addition, people who tend to be more attached to relationships and who view AI as a friend who can be integrated into their private life are more likely to experience negative effects from using chatbots.

Furthermore, talking to ChatGPT about one's inner thoughts and personal experiences tends to increase feelings of loneliness while decreasing emotional dependence and problematic use. On the other hand, non-personal conversations, such as discussing specific topics or facts, tend to increase emotional dependence, especially among heavy users.

The research team called the results 'an important first step in understanding the impact of advanced AI models on human experience and well-being,' but warned that 'these results should not be generalized because they may miss the nuances of the findings that highlight the interactions between humans and AI systems.' On the other hand, the team said that the combination of research methods allowed them to get a more complete picture, and that 'it helped identify areas where further research is needed.'

Further details of the two studies can be found in a paper by the OpenAI team and a paper by the MIT Media Lab team.

Related Posts:

in AI, Note, Web Service, Posted by log1d_ts