In the year 2024, the number of Bluesky users exploded from 2.89 million to 25.94 million, and the number of accounts deleted by moderators was 66,308, including requests from Japan. A summary of moderation reports

In February 2024, Bluesky abolished the invitation system and allowed anyone to register as a user, and the number of users increased from 2.89 million to 25.94 million in one year. As the number of users increases, harmful content also increases, so Bluesky has revealed that it has

Bluesky 2024 Moderation Report - Bluesky

https://bsky.social/about/blog/01-17-2025-moderation-2024

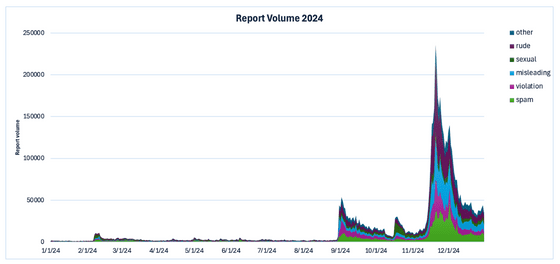

Bluesky's moderation team works 24/7 to review user reports and works to remove harmful content from the platform. According to the Trust & Safety team, 6.48 million reports were submitted by users in 2024, a significant increase from 358,000 the previous year.

In late August 2024, the number of Brazilian users increased significantly, with up to 50,000 reports per day. Prior to that, the moderation team was able to process most reports within 40 minutes, but the sudden increase in Brazilian users meant that they could no longer keep up, so they expanded the size of their Portuguese moderation team and hired moderators through an external contractor for the first time.

In response to the sudden increase in users in Brazil, Bluesky strengthened the automated spam detection function that it had already implemented. It invested in more automation, and in December it said it had benefited from automation on certain content, such as 'impersonation.' The automation also produced false positives, but these were checked by human moderators.

The percentage of users reporting content remained roughly the same in 2023 and 2024, with 5.6% of active users making at least one report in 2023 and this figure dropping to approximately 4.57% in 2024. In 2023, 3.4% of active users received at least one report, decreasing to 2.97% in 2024.

The majority of the reports (3.5 million) were about individual posts, with 1.77 million about DMs, 47,000 about account profiles, and 45,000 about lists.

Users reported content for a variety of reasons, including 1.75 million reports of anti-social behavior, such as harassment and trolling; 1.2 million reports of misleading content, such as impersonation and misinformation; 1.4 million reports of spam, such as excessive replies and repetitive content; 933,000 reports of content that clearly violated the law or terms of use; 630,000 reports of sexual content that was not properly labelled, such as nudity or adult content; and 726,000 reports of other content.

Bluesky labels posted content and accounts with an accuracy rate of 99.90%. Most of this labeling is automated, and 5.5 million labels were applied to individual posts and accounts in 2024. In November 2024 alone, 2.5 million videos and 36.14 million images were posted to Bluesky.

As a result, in 2024, Bluesky moderators removed 66,308 accounts, while automated tools removed 35,842 spam and bot accounts. In addition, moderators removed 6,334 posts, lists, feeds, etc., while automated tools removed 282.

In 2024 alone, 93,076 users filed at least one appeal against Bluesky's moderation. In most cases, the appeals were due to disagreement with the label's verdict.

According to the Trust & Safety team, at the time of this announcement, appeals for deleted accounts can be submitted via email, but in the future, appeals will be possible directly within the app.

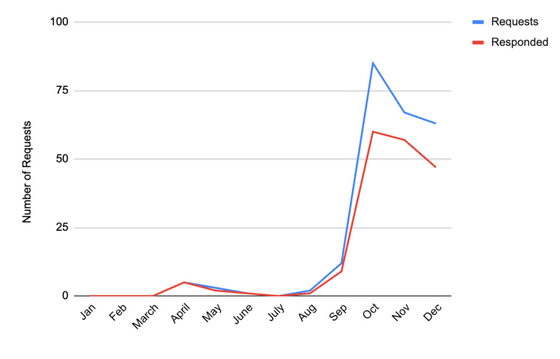

Additionally, in 2024 alone, Bluesky received 238 requests from law enforcement agencies, governments and law firms, responded to 182, and complied with 146. The majority of requests came from law enforcement agencies in Germany, the United States, Brazil and Japan. These legal demands peaked between September and December 2024.

In addition, a total of 937 copyright and trademark cases were received by Bluesky in 2024. There were four copyright-related actions identified in the entire first half of 2024, rising to 160 in September. Bluesky has made its copyright infringement claim form available in the second half of 2024, making its response to reports more systematic.

The harmful content also included child sexual abuse material (CSAM). Some internet services subscribe to known CSAM 'hashes' (like fingerprints) recorded for regulatory purposes to detect CSAM, and Bluesky does the same. When an image or video is uploaded to Bluesky and matches one of the hashes, it is immediately deleted from Bluesky without any human viewing of the content.

In 2024, Bluesky submitted 1,154 reports of CSAM to the National Center for Missing and Exploited Children. The Trust & Safety team said, 'CSAM is a serious issue on any social networking site. Child safety has also become more complex as the number of users has increased dramatically. Cases reported by Bluesky include accounts linking off-platform to sell CSAM, potential minors trying to sell explicit images, and pedophiles trying to share encrypted chat links. In these cases, we quickly updated our internal guidance to our moderation team to ensure we could act quickly when removing activity.'

Related Posts:

in Web Service, Posted by log1p_kr