OpenAI's crawler bot takes down 3D scan data sales site with thorough scraping that's almost like a DDoS attack

It turns out that a website called '

How OpenAI's bot crushed this seven-person company's website 'like a DDoS attack' | TechCrunch

https://techcrunch.com/2025/01/10/how-openais-bot-crushed-this-seven-person-companys-web-site-like-a-ddos-attack/

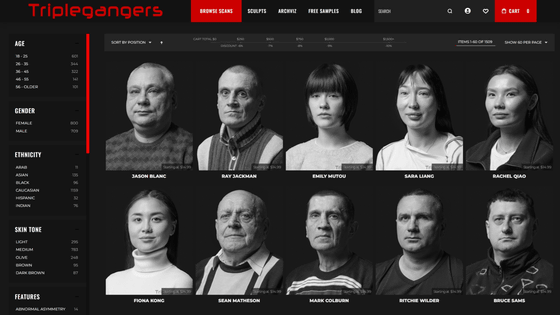

Triplegangers is a website that has been selling 3D scan data for over 10 years. The data they handle is divided into categories such as 'face', 'full body', 'full body with pose', 'full body couple', 'hand', 'full body clothed', 'hand statue', and 'face statue'.

For example, at the time of writing, there is data for 1,509 people in the 'face' category, with around 20 different facial expressions registered for each person. In other categories, at least three images are registered for each product, so the total data is said to be hundreds of thousands of points.

High-Resolution Face Models, 21 FACS expressions each. | Triplegangers

https://triplegangers.com/browse/scans/faces

Triplegangers CEO Oleksandr Tomchuk told the news site TechCrunch that he had received a warning that the site was down, so he investigated and discovered that an OpenAI bot had attempted to scrape the entire Triplegangers site, downloading all of its hundreds of thousands of pieces of material.

They quickly determined that the site was down due to an OpenAI bot, but they said the bot was using 600 different IP addresses and they still don't know when it started scraping.

'OpenAI's crawler took down our site, it was essentially a DDoS attack,' Tomczuk said.

In addition, Triplegangers' terms of use page states that 'you may not collect data through indexing, scraping, data mining, or other methods using bots or search apps without our prior consent.' However, TechCrunch points out that the robots.txt file, which tells crawler bots whether they can index or crawl, did not contain appropriate information about OpenAI's bots.

OpenAI announced 'GPTBot,' a web crawler for collecting data to improve large-scale language models, in August 2023 and also published how to block it.

On the other hand, TechCrunch also points out that 'OpenAI's bots include ChatGPT-User and OAI-SearchBot,' and that 'robots.txt is not absolute.'

For example, the search engine Perplexity has been accused of '

In response to the criticism that 'Perplexity's AI ignores robots.txt that blocks crawlers,' CEO claims that 'we don't ignore it, but we rely on third-party crawlers' - GIGAZINE

In addition, there have been reports of an AI company called Anthropic receiving 1 million hits in 24 hours because its anti-crawler protection measures were out of date.

By the way, TechCrunch reached out to OpenAI about this matter but did not receive a response.

Related Posts:

in Web Service, Posted by logc_nt