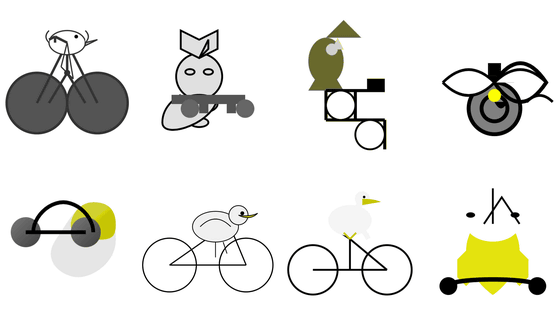

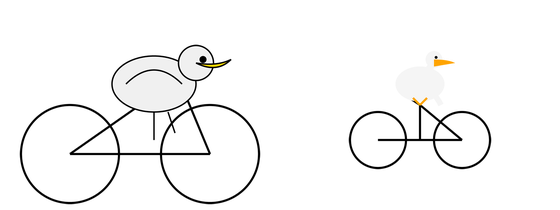

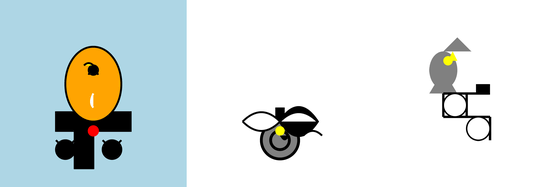

If you try the benchmark to draw 'Pelican on a bicycle' in SVG format on GPT-4o or Google Gemini, it looks like this

Various companies, including OpenAI, Google, Anthropic, and Meta, are developing large-scale language models, and the performance differences between the models developed by each company are compared using benchmarks. Engineer Simon Willison reports that he has devised his own benchmark, which involves drawing a pelican on a bicycle.

Pelicans on a bicycle

pelican-bicycle/README.md at main · simonw/pelican-bicycle · GitHub

https://github.com/simonw/pelican-bicycle/blob/main/README.md

Willison said the reason he chose to draw a pelican on a bicycle as a benchmark is because he likes pelicans and that there are probably no SVG files of a pelican on a bicycle out there yet, so he's pretty sure there's no chance it's been included in the training data.

The benchmark is simple: just enter the prompt 'Generate an SVG of a pelican riding a bicycle.'

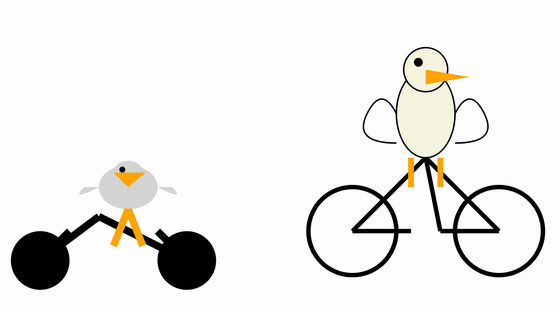

Below are images of a pelican riding a bicycle that Willison actually input into Anthropic's Claude 3.5 Sonnet. The image on the left was output on June 20, 2024, and the image on the right was output on October 22, 2024.

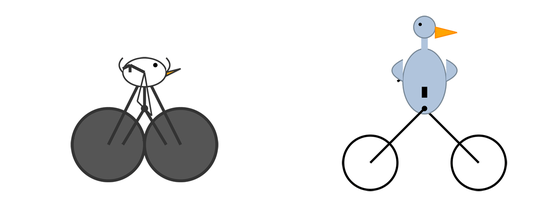

Google's Gemini 1.5 Flash 001 (left) and Gemini 1.5 Flash 002 (right)

GPT-4o mini (left) and GPT-4o (right)

OpenAI's o1-mini (left) and o1-preview (right)

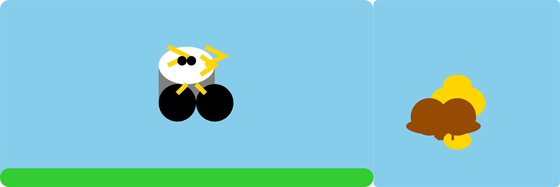

Cerebras Llama 3.1 70B model (left) and 8B model (right)

In addition, Willison posted the results of a video of a pelican riding a bicycle generated by Google's video generation AI, Veo 2 , on X (formerly Twitter). Previous large-scale language models were instructed to output in SVG format, resulting in a geometric design, but in the case of Veo 2, videos that look almost like live-action images are generated.

Veo 2 did pretty well at 'A pelican riding a bicycle along a coastal path overlooking a harbor' - two of these videos have the pelican actually cycling! https://t.co/h9BaOWKbsa pic.twitter.com/Bx1ThtiHzn

— Simon Willison (@simonw) December 16, 2024

Related Posts: