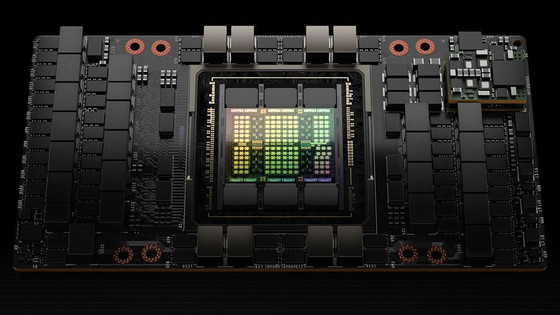

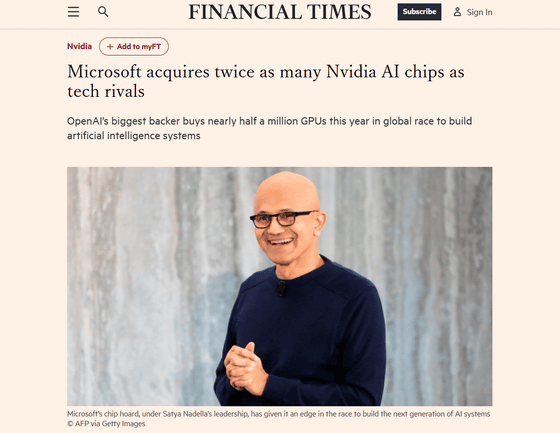

Microsoft has purchased 485,000 Nvidia Hopper GPUs, double its rivals' total, while ByteDance and Tencent have each purchased around 230,000, and Meta has purchased 225,000.

It has been revealed that Microsoft, which has invested a cumulative total of $13 billion (approximately 2 trillion yen) in OpenAI, is far ahead of other companies in the number of NVIDIA GPUs it will purchase in 2024. Microsoft's aggressive semiconductor procurement is attracting attention as a move to secure an advantage in next-generation AI development.

Microsoft acquires twice as many Nvidia AI chips as tech rivals

Microsoft bought twice as many Nvidia Hopper GPUs as other big tech companies - report - DCD

https://www.datacenterdynamics.com/en/news/microsoft-bought-twice-as-many-nvidia-hopper-gpus-as-other-big-tech-companies-report/

According to an analysis by technology consulting firm Omdia, Microsoft plans to purchase 485,000 H100 GPUs using NVIDIA's Hopper architecture in 2024. Meta, which is also actively developing AI, has procured 224,000 H100 GPUs, more than double the number purchased by Microsoft. In addition, China's ByteDance and Tencent have purchased approximately 230,000 NVIDIA GPUs each, Amazon 196,000, and Google 169,000.

According to Omdia, global server investment is expected to reach $229 billion (approximately 35.4 trillion yen) in 2024, of which 43% is going to NVIDIA. Microsoft, known for its huge investment in OpenAI, is also accelerating the development of data center infrastructure to operate its AI assistant Copilot and provide customer services through its cloud computing service Azure, and Microsoft's chip procurement in 2024 is more than three times that of 2023.

The industry is also moving ahead with the development of its own AI chips. Meta and Google have deployed around 1.5 million of their own chips each, and Amazon has also introduced around 1.3 million of its own chips, Trainium and Inferentia , in an effort to reduce its reliance on NVIDIA.

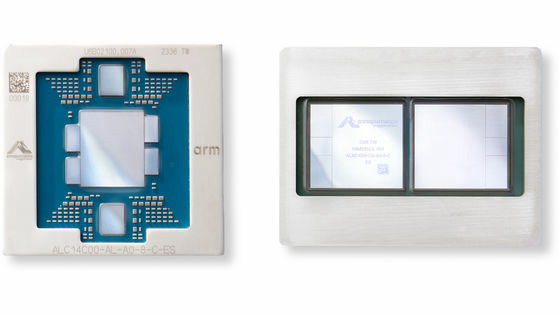

However, Microsoft is lagging behind in the development and deployment of its own AI accelerators that can compete with NVIDIA. Microsoft's proprietary AI-specialized chip, ' Maia 100 ,' was announced in November 2023 and was finally deployed in its own data centers in 2024, but at the time of writing, the number of units deployed is only about 200,000.

Alistair Spears, senior director of Azure Global Infrastructure at Microsoft, told the Financial Times, 'Good data center infrastructure is a very complex, capital-intensive project. It takes multiple years of planning, and it's important to build in some buffer space for growth projections. In building an AI infrastructure, it's not enough to just have the best chips. It's also important to have the right storage components, the right infrastructure, the right software layer, the right host management layer, error correction, and all the other components to build that system.'

However, issues have also emerged in the development of NVIDIA's next-generation chip ' Blackwell '. According to market research firm Trendforce , NVIDIA announced that it would postpone delivery of Blackwell after reports of overheating issues in data center racks with 72 chips. In addition, due to the advanced design specifications of the GB200, it will take time to optimize and adjust the supply chain, and full-scale shipments are expected to be delayed until the second or third quarter of 2025.

Related Posts:

in Hardware, Posted by log1i_yk