A list of the AI inference processing capabilities of NVIDIA graphics cards and Apple chips, useful for deciding which graphics card and Mac to buy

Until recently, the main use of graphics boards was for 3D graphics processing such as games, but in recent years, there are more and more cases where graphics boards are chosen for the purpose of 'running AI locally.' I found a webpage ' GPU-Benchmarks-on-LLM-Inference ' that summarizes the processing performance when inference processing of the large-scale language model 'LLaMA 3' is executed on a large number of NVIDIA graphics boards and Apple chips, so I summarized the contents.

GitHub - XiongjieDai/GPU-Benchmarks-on-LLM-Inference: Multiple NVIDIA GPUs or Apple Silicon for Large Language Model Inference?

https://github.com/XiongjieDai/GPU-Benchmarks-on-LLM-Inference

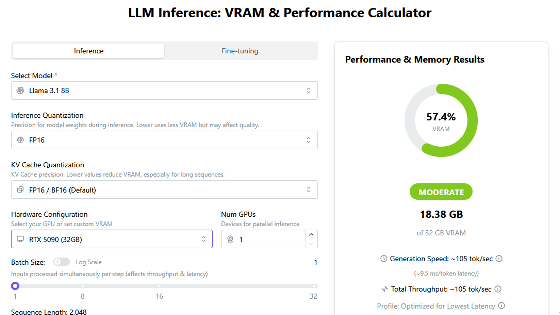

GPU-Benchmarks-on-LLM-Inference is a performance comparison page created by AI researcher Xiongjie Dai , which summarizes the number of tokens processed per second when running LLaMA 3 inference processing on various graphics boards and Apple chips. In addition, ' llama.cpp ' is used to run LLaMA 3, and performance is verified for 'a model with 8B parameters,' 'a quantized model with 8B parameters,' 'a model with 70B parameters,' and 'a quantized model with 70B parameters.'

Below are the results of the verification of the graphics boards for gaming use that are relatively easy to obtain from the graphics boards included in the performance comparison table. The highest performance was shown by the 'RTX 4090 24GB'. In addition, the three models 'RTX 3090 24GB', 'RTX 4080 16GB', and 'RTX 4090 24GB' can run LLaMA 3 8B without quantization. On the other hand, LLaMA 3 70B was not able to run even on the quantized model.

| GPU | 8B Q4_K_M | 8B F16 | 70B Q4_K_M | 70B F16 |

|---|---|---|---|---|

| RTX 3070 8GB | 70.94 | Out of memory | Out of memory | Out of memory |

| RTX 3080 10GB | 106.40 | Out of memory | Out of memory | Out of memory |

| RTX 3080 Ti 12GB | 106.71 | Out of memory | Out of memory | Out of memory |

| RTX 3090 24GB | 111.74 | 46.51 | Out of memory | Out of memory |

| RTX 4070 Ti 12GB | 82.21 | Out of memory | Out of memory | Out of memory |

| RTX 4080 16GB | 106.22 | 40.29 | Out of memory | Out of memory |

| RTX 4090 24GB | 127.74 | 54.34 | Out of memory | Out of memory |

The processing performance of a machine equipped with multiple graphic boards looks like this. Although the memory shortage can be resolved by increasing the number of graphic boards, it can be seen that there is no significant difference in the number of tokens processed per second.

| GPU | 8B Q4_K_M | 8B F16 | 70B Q4_K_M | 70B F16 |

|---|---|---|---|---|

| RTX 3090 24GB x 2 | 108.07 | 47.15 | 16.29 | Out of memory |

| RTX 3090 24GB x 4 | 104.94 | 46.40 | 16.89 | Out of memory |

| RTX 3090 24GB x 6 | 101.07 | 45.55 | 16.93 | 5.82 |

| RTX 4090 24GB x 2 | 122.56 | 53.27 | 19.06 | Out of memory |

| RTX 4090 24GB x 4 | 117.61 | 52.69 | 18.83 | Out of memory |

| RTX 4090 24GB x 8 | 116.13 | 52.12 | 18.76 | 6.45 |

The processing performance of graphics boards for computing is as follows:

| GPU | 8B Q4_K_M | 8B F16 | 70B Q4_K_M | 70B F16 |

|---|---|---|---|---|

| RTX 4000 Ada 20GB | 58.59 | 20.85 | Out of memory | Out of memory |

| RTX 4000 Ada 20GB x 4 | 56.14 | 20.58 | 7.33 | Out of memory |

| RTX 5000 Ada 32GB | 89.87 | 32.67 | Out of memory | Out of memory |

| RTX 5000 Ada 32GB x 4 | 82.73 | 31.94 | 11.45 | Out of memory |

| RTX A6000 48GB | 102.22 | 40.25 | 14.58 | Out of memory |

| RTX A6000 48GB x 4 | 93.73 | 38.87 | 14.32 | 4.74 |

| RTX 6000 Ada 48GB | 130.99 | 51.97 | 18.36 | Out of memory |

| RTX 6000 Ada 48GB x 4 | 118.99 | 50.25 | 17.96 | 6.06 |

And the inference processing performance when each model of LLaMA 3 is executed on a chip for high performance computing and AI processing is as follows.

| GPU | 8B Q4_K_M | 8B F16 | 70B Q4_K_M | 70B F16 |

|---|---|---|---|---|

| A40 48GB | 88.95 | 33.95 | 12.08 | Out of memory |

| A40 48GB x 4 | 83.79 | 33.28 | 11.91 | 3.98 |

| L40S 48GB | 113.60 | 43.42 | 15.31 | Out of memory |

| L40S 48GB 4pcs | 105.72 | 42.48 | 14.99 | 5.03 |

| A100 PCIe 80GB | 138.31 | 53.18 | 24.33 | Out of memory |

| 4x A100 PCIe 80GB | 117.30 | 51.54 | 22.68 | 7.38 |

| A100 SXM 80GB | 133.38 | 53.18 | 24.33 | Out of memory |

| A100 SXM 80GB x 4 | 97.70 | 45.45 | 19.60 | 6.92 |

| H100 PCIe 80GB | 144.49 | 67.79 | 25.01 | Out of memory |

| 4x H100 PCIe 80GB | 118.14 | 62.90 | 26.20 | 9.63 |

Also, the inference processing performance of a Mac equipped with an Apple chip looks like this. Looking at the performance difference between the M2 Ultra and the M3 Max, we can see the importance of memory capacity in processing LLM.

| GPU | 8B Q4_K_M | 8B F16 | 70B Q4_K_M | 70B F16 |

|---|---|---|---|---|

| M1 7-Core GPU 8GB | 9.72 | Out of memory | Out of memory | Out of memory |

| M1 Max 32‑Core GPU 64GB | 34.49 | 18.43 | 4.09 | Out of memory |

| M2 Ultra 76-Core GPU 192GB | 76.28 | 36.25 | 12.13 | 4.71 |

| M3 Max 40‑Core GPU 64GB | 50.74 | 22.39 | 7.53 | Out of memory |

Based on the performance comparison results, Dai concludes, 'If you want to save money, buy an NVIDIA gaming graphics card, if you want business use, buy a professional graphics card, and if you want power-saving and quiet performance, buy a Mac.'

Related Posts: