AI model 'Chameleon' developed to prevent fraudulent facial recognition

Researchers at the Georgia Institute of Technology have developed a technology called ' Chameleon ' that applies a special privacy mask to facial photos, making them unidentifiable even when scanned.

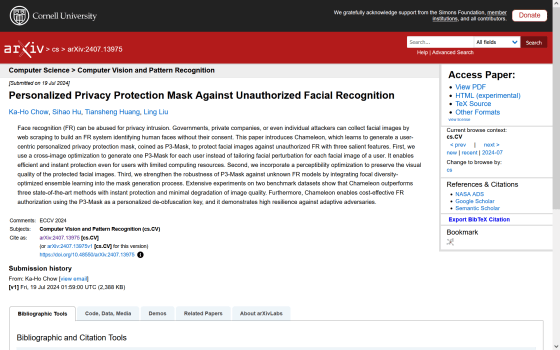

[2407.13975] Personalized Privacy Protection Mask Against Unauthorized Facial Recognition

AI Model Creates Invisible Digital Masks to Defend Against Unwanted Facial Recognition | Research

Meet 'Chameleon' – an AI model that can protect you from facial recognition thanks to a sophisticated digital mask | Live Science

https://www.livescience.com/technology/artificial-intelligence/meet-chameleon-an-ai-model-that-can-protect-you-from-facial-recognition-thanks-to-a-sophisticated-digital-mask

Facial recognition technology has become commonplace in everyday life, such as the iPhone's 'Face ID,' which unlocks your phone using facial recognition. However, there are increasing cases of people being scanned without their permission and then identified through searches of photos of themselves online, which could lead to stalking or even fraud.

To prevent such fraudulent facial recognition, researchers at Georgia Institute of Technology developed an AI model called 'Chameleon.' Chameleon adds 'privacy masks' to facial photos to make facial recognition more difficult.

Chameleon uses a series of photos of a person's face to create a privacy mask called a 'P-3 mask.' With this mask applied, the photo cannot be linked to the person in the photo, so even if someone scans the person's face, they will not be able to identify the person in the photo.

While the technology of applying privacy masks to images is not new, existing techniques often suffer from blurry or poor image quality. Chameleon has several features that overcome these drawbacks.

The first is that one P-3 mask is created for each person. Existing technologies create one privacy mask for each photo, which was inefficient. By creating a privacy mask for each person, it is possible to instantly protect privacy and also to efficiently use limited computing resources.

The second optimization technique is called 'perceptual optimization,' which automatically prevents image degradation caused by adding privacy masks and maintains image quality.

'Privacy-preserving data sharing and analysis technologies like Chameleon can help promote the governance and responsible use of AI and stimulate science and innovation,' said Professor Lin Liu, who led the development of Chameleon.

'We want to use Chameleon to protect images used to train generative AI models,' said Tiansheng Huang, a doctoral student at Georgia Tech who helped develop Chameleon. 'We can prevent images from being trained without consent.' He expressed his hope to use Chameleon's techniques for more than just protecting facial images.

Related Posts:

in Posted by log1p_kr