Pokémon GO developer Niantic unveils spatial computing platform, Niantic Spatial Platform

Niantic, known as the developer and operator of location-based games such as 'Pokemon GO' and 'Ingress,' has built a spatial computing platform called 'Niantic Spatial Platform' by utilizing the Visual Positioning System (VPS) information accumulated through the operation of the games. In addition, the company has revealed that it is building a 'Large Geospatial Model (LGM)' that can navigate the physical world based on the information of more than 1 million spots obtained from the VPS.

Niantic Spatial Platform

Building a Large Geospatial Model to Achieve Spatial Intelligence – Niantic Labs

Pokémon Go Players Have Unwittingly Trained AI to Navigate the World

https://www.404media.co/pokemon-go-players-have-unwittingly-trained-ai-to-navigate-the-world/

Niantic uses Pokémon Go player data to build AI navigation system - Ars Technica

https://arstechnica.com/ai/2024/11/niantic-uses-pokemon-go-player-data-to-build-ai-navigation-system/

In developing location-based games such as 'Pokemon GO,' Niantic has acquired detailed data on various spots around the world, taken from various angles. The information collected by Niantic, which calls VPS a system similar to GPS (Global Positioning System), has reached 1 million locations around the world. For example, when you want to display a Pokemon in the real world, you can display it with an accuracy of just a few tens of centimeters.

Niantic Spatial Platform is a platform that uses this VPS information and is composed of 3D digital maps. A video showing an example of its use is available.

Sphere XR | Remote Assistance with Niantic Spatial Technology - YouTube

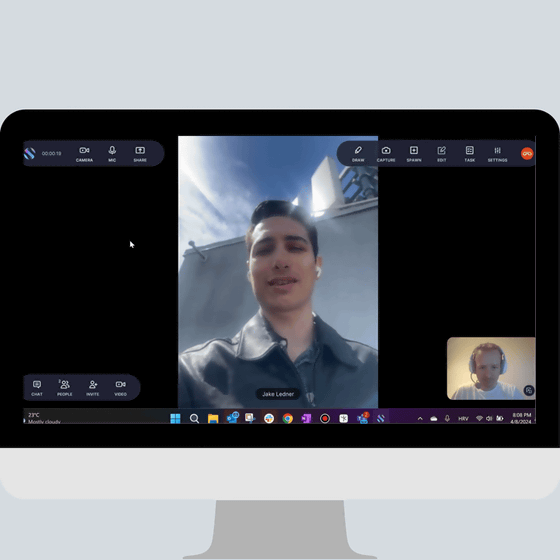

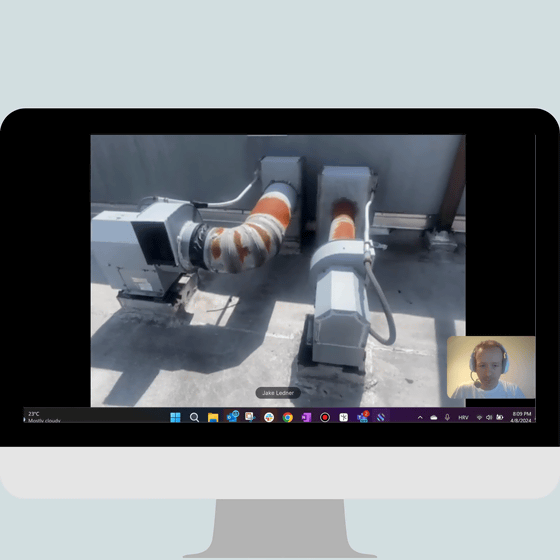

While the worker and operator are on the phone, the worker asks the operator which part they are working on.

The scene looks like this.

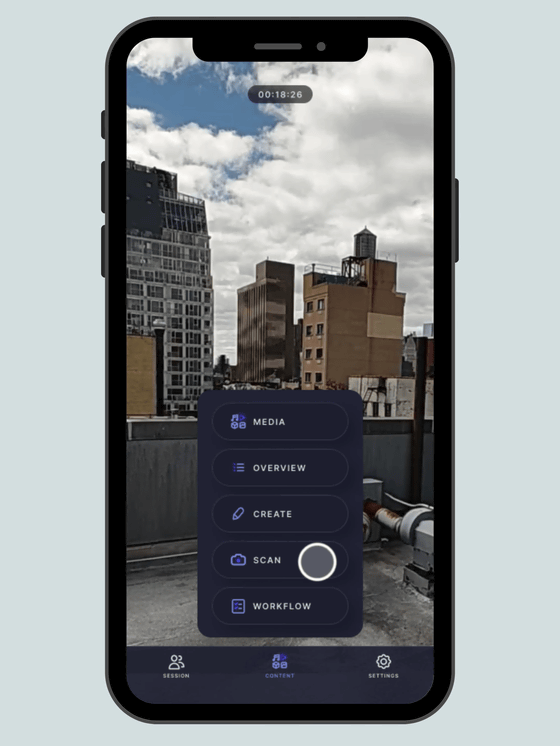

The worker launched the scanning function on his smartphone.

Point the camera at the subject and move around it.

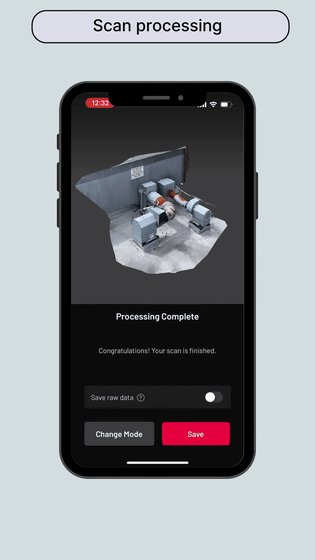

A scanned model of the site was generated.

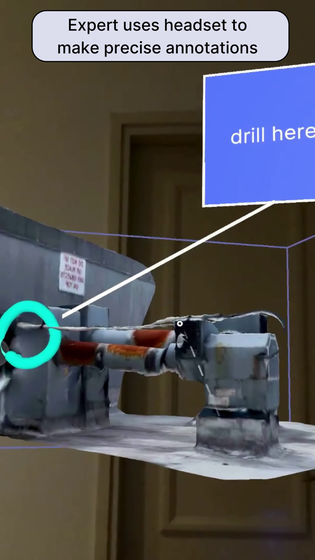

Next, the operator puts on the headset.

Then launch the annotation tool.

I marked the areas to be worked on on the scanned model that had just been generated, then wrote instructions such as 'Drill here.'

The information entered by the operator was then displayed on the worker's smartphone. The information was entered into the scanned model, not into the photograph, and the orientation of the model changed as the operator moved.

This made it easy to locate and get to work.

This is just a demonstration, but Niantic is also working on building an LGM based on a VPS.

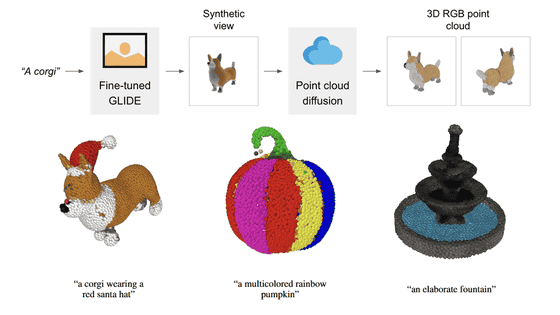

Following the large-scale language model (LLM) capable of understanding and generating language, 2D vision models capable of understanding and generating images, and even 3D vision models capable of modeling the three-dimensional appearance of objects have appeared, but LGM goes beyond 3D vision models and captures 3D entities with metric information based on specific geographic locations, so to speak, a '3D map'. 3D vision models can generate 3D scenes, but LGM specifically understands 'where in the world the scene is related to'.

The VPS is based on the data accumulated by players, and although there were posts on the social message board Reddit saying that ' many people noticed that Niantic's business model was not centered around supporting players ,' there was no particular uproar about the use of the data.

Related Posts:

in Video, Web Service, Posted by logc_nt