Researchers point out that OpenAI's transcription AI 'Whisper' hallucinates and fabricates sentences

OpenAI touts its transcription AI,

Researchers say AI transcription tool used in hospitals invents things no one ever said | AP News

https://apnews.com/article/ai-artificial-intelligence-health-business-90020cdf5fa16c79ca2e5b6c4c9bbb14

OpenAI's Whisper transcription tool has hallucination issues, researchers say | TechCrunch

https://techcrunch.com/2024/10/26/openais-whisper-transcription-tool-has-hallucination-issues-researchers-say/

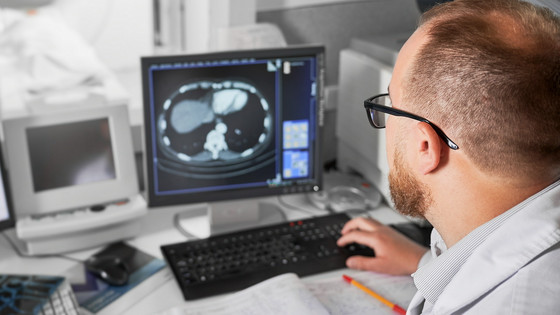

According to experts interviewed by the Associated Press, Whisper is used for a variety of purposes, including transcription, translating interviews, and creating subtitles for videos. The experts interviewed pointed out that it is 'problematic' that such tools can cause hallucination and fabricate text. It has also been revealed that some medical institutions use Whisper to transcribe doctor's examinations, despite the developer OpenAI's warning not to use Whisper in 'high-risk areas.'

Experts interviewed by The Associated Press reported that they frequently encountered Whisper-induced hallucinations in their work: For example, a University of Michigan researcher studying public meetings reported that, before Whisper's AI model was updated, eight out of 10 audio recordings contained hallucinations.

Another machine learning engineer reported that he had used Whisper to transcribe over 100 hours of audio data and found that about half of the transcripts contained hallucination artifacts. Yet another developer said that he had found hallucination artifacts in nearly all of the 26,000 transcripts he had created with Whisper.

It seems that Whisper's hallucination fakery can occur even when the audio data is well recorded. In a recent study by computer scientists, Whisper was used to transcribe over 13,000 audio samples with good sound quality to investigate whether hallucination fakery was occurring, and 187 cases of fakery were found.

Alondra Nelson, who will head

Whisper is also being used to create closed captions for people who are deaf or hard of hearing, who are 'particularly at risk for mistranslation' because they have no way of identifying AI-generated fakes, said Christian Vogler, a deaf scientist who oversees Gallaudet University's technology access program.

Such hallucinations are so prevalent that experts, advocates, and former OpenAI employees are calling on the U.S. government to consider regulating AI. William Sanders, who left OpenAI in February 2024 due to concerns about the company's direction, said, 'This is a problem that can be solved if companies are willing to prioritize it,' and 'I think it's a problem that we put AI in this state and people become overconfident about what AI can do and integrate it into all kinds of other systems,' expressing concern about the rapid spread of AI.

The Associated Press contacted OpenAI about the matter, and a spokesperson for the company responded, 'We are continually researching ways to mitigate hallucination and appreciate the researchers' findings. The spokesperson added that OpenAI incorporates feedback into updating its models.

Most of the developers interviewed by the AP said they expect transcription tools to make spelling mistakes and other errors, but that they have never seen a transcription AI that makes as many typos as Whisper does.

In fact, Whisper's fabricated text includes designating speakers as 'black' in conversations where there is no mention of race, and creating a non-existent drug called 'hyperactive antibiotics.' It's unclear exactly why these fabrications occur, but the software's developers say that hallucinations tend to occur when 'pauses, background sounds, music is playing,' etc.

Related Posts: