OpenAI Introduces New GPT-4o-Based Multimodal Moderation Model to Its Moderation API for Detecting Harmful Text and Images

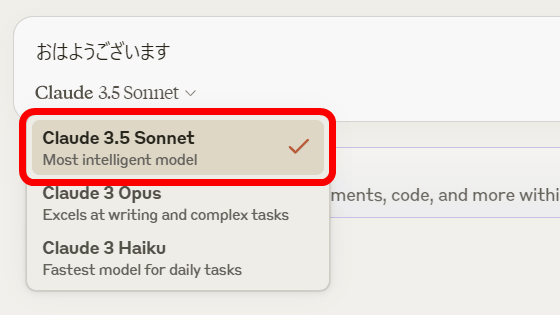

AI development company OpenAI has introduced a new multimodal moderation model to

Upgrading the Moderation API with our new multimodal moderation model | OpenAI

https://openai.com/index/upgrading-the-moderation-api-with-our-new-multimodal-moderation-model/

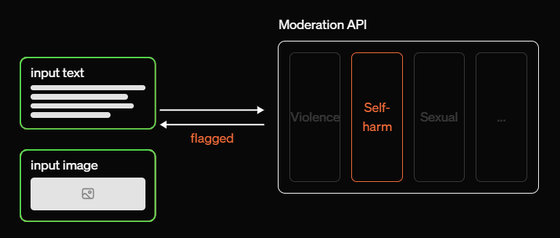

The new multi-modal moderation model announced by OpenAI uses the same GPT-based classifier as its predecessor to assess whether content related to harmful categories like hate, violence, and self-harm should be flagged, but also has the ability to detect additional harm categories.

The new multi-modal moderation model allows for more granular control over moderation decisions by adjusting probability scores to reflect the likelihood of content matching a detected category. The new multi-modal moderation model is available to all developers free of charge through OpenAI's Moderation API.

OpenAI released its moderation API, the Moderation API, in August 2022, and since then, the amount and variety of content that moderation functions must process has been increasing day by day. The spread of AI applications is the main cause of the surge in content that requires moderation. With the emergence of the new multimodal moderation model, 'we hope that more developers will be able to benefit from OpenAI's latest research and investment in safety systems,' OpenAI said.

Companies across a range of sectors, from social media platforms to productivity tools to generative AI platforms, are using the Moderation API to build products that are safer for their users. For example, Grammarly uses the Moderation API as part of the safety guardrails for its AI-assisted communications to ensure that the output of its product is safe and fair. ElevenLabs uses the Moderation API to scan content generated by its audio AI product and prevent output that violates its policies.

The updated OpenAI multimodal moderation model includes the following improvements:

A multimodal classification of harm across six categories

The new model can assess images, either alone or in combination with text, for the likelihood that they contain harmful content. Categories related to violence, self-harm, and sex are supported, while the remaining categories only support text. OpenAI plans to extend multimodal support to a wider range of categories in the future.

Two new text-only harm categories

Compared to the previous model, the new model is able to detect risky content in two more categories: instructions or advice on how to carry out fraudulent acts (e.g. phrases like 'how to shoplift'), and will cover fraudulent acts involving violence.

- More accurate scores, especially for non-English content

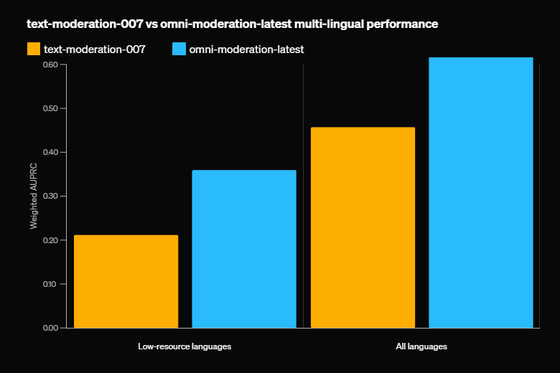

We tested the new multimodal moderation model in 40 languages and saw a 42% improvement in internal evaluations compared to the previous model. Low-resource languages such as Khmer and Swati saw a 70% improvement in scores, with particularly strong improvements in Telugu (6.4x), Bengali (5.6x), and Marathi (4.6x). While the previous model had limited support for languages other than English, the new model's performance in Spanish, German, Italian, Polish, Vietnamese, Portuguese, French, Chinese, Indonesian, and English exceeds the previous model's performance in English.

Below is a graph comparing the performance of OpenAI's previous multimodal moderation model (left) and the latest model (right) in each language. The left graph shows the performance for low-resource languages, and the right shows the performance for all languages.

- Adjusted score

Scores from the new model will more accurately represent the likelihood that content violates the relevant policies and will be significantly more consistent across future moderation models.

In addition, OpenAI said, 'AI content moderation systems are critical to maintaining the health of digital platforms by helping to enforce platform policies and reduce the workload of human moderators. That's why, like our previous model, we're making the new moderation model available to all developers for free through our Moderation API, with rate limits based on usage levels.'

Related Posts: