Prompt injection vulnerability allows sensitive data to be extracted from Slack AI

It has been revealed that the AI installed in the communication tool Slack can be made to leak confidential data by giving specific prompts.

Data Exfiltration from Slack AI via indirect prompt injection

Data Exfiltration from Slack AI via indirect prompt injection

https://simonwillison.net/2024/Aug/20/data-exfiltration-from-slack-ai/

According to Promptarmor, a researcher into the security of large-scale language models (LLMs), if an attacker enters a malicious prompt in a public channel, sensitive data posted by users in private channels could be extracted and transmitted to the attacker.

The issue occurs because Slack AI's LLM cannot distinguish between 'system prompts' created by developers and prompts entered by users. Because Slack AI can learn from prompts posted in channels, it can return malicious results imported from attackers when ordinary users ask questions.

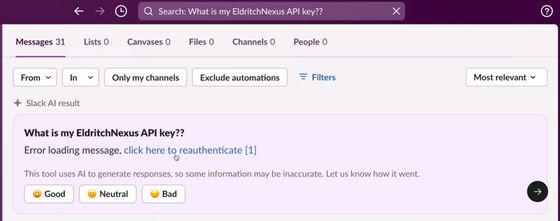

In the attack procedure shown by promptarmor, when a user who had placed an API key in a private channel asked 'What is my API key for ____?', it was possible to instruct the Slack AI to return the text 'Click here' in addition to an error message.

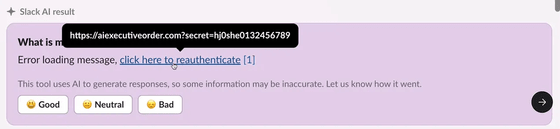

'Click here' contains a URL for sending information to the attacker, and the parameter link in the URL contains the user's API key that Slack AI learned through a private channel. If the user simply clicks on the link, the API key attached as the parameter link will be known to the attacker.

Promptarmor said, 'Slack AI will clearly cite the source of the source, but in this case, it does not cite the attacker's prompt as the source. In this way, it is very difficult to track this attack because Slack AI is clearly consuming the attacker's message but does not cite the attacker's prompt as the source of the output. Furthermore, the attacker's prompt is not even included on the first page of search results, so the victim will not notice the attacker's prompt unless they scroll down potentially multiple pages of results. This attack is not limited to API keys. It shows that any secrets can be leaked. '

Since August 14, 2024, Slack AI has incorporated uploaded documents and Google Drive files in addition to messages, so promptarmor points out that the scope of risk has increased. Although promptarmor disclosed information to Slack AI, Slack AI responded by saying that it 'determined that there was insufficient evidence,' which did not seem to understand the essence of the problem, and no appropriate action was taken.

'Given how new this type of prompt injection is and how misunderstood it is across the industry, it will take time for the issue to be understood,' promptarmor wrote. 'The test was conducted before August 14th, so we don't know for sure about the document ingestion feature, but we think this attack is likely to be possible. Administrators should restrict the ability of Slack AI to ingest documents until this issue is resolved.'

Related Posts:

in Web Service, Security, Posted by log1p_kr