Users take issue with Meta's AI not answering questions about the assassination attempt on former President Trump, and Meta points out that it was due to hallucinations

On July 14, 2024, Japan time, former President Donald Trump

Review of Fact-Checking Label and Meta AI Responses | Meta

https://about.fb.com/news/2024/07/review-of-fact-checking-label-and-meta-ai-responses/

Meta apologies after its AI chatbot said Trump shooting didn't happen - The Verge

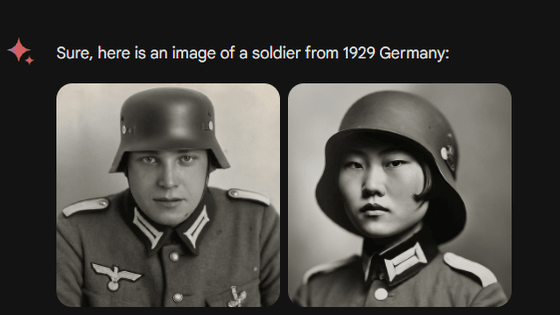

ChatGPT and other chat AIs explain various things in natural language, but unlike Google Search or Wikipedia, they do not have a wealth of knowledge, so they sometimes explain things that are not true or that are out of the ordinary as if they were facts. This phenomenon is called 'hallucination.'

Most recently, a Trump supporter, @libsoftikok, reported a case in which Meta AI, when asked about the assassination attempt on former President Trump, responded as if the incident had never occurred. @libsoftiktok commented, 'We are witnessing the suppression and concealment of this shooting, one of the most significant events.' The response shows an exchange between @libsoftiktok, who asks about the assassination attempt on Trump, and the AI, which makes no mention of the incident in Pennsylvania.

Meta AI won't give any details on the attempted ass*ss*nation.

— Libs of TikTok (@libsoftiktok) July 28, 2024

We're witnessing the suppression and coverup of one of the biggest most consequential stories in real time.

Simply unreal. pic.twitter.com/BoBLZILp5M

Meta has issued an official statement on the matter.

Meta said, 'First, it is a well-known problem that AI chatbots, including Meta AI, are not always trustworthy when it comes to breaking news or providing real-time information. Simply put, the models that drive chatbots are based on training data, which can cause some problems if asked about real-time topics that arise after the AI was trained.'

He added, 'We programmed it to not answer questions about the assassination attempt, rather than provide false information. We believe this is responsible for reports of the AI refusing to talk about the incident. We have since updated the information about the assassination attempt, but should have done this sooner. In a small number of cases, we have even asserted that the assassination attempt did not occur. These types of answers are called hallucinations and are an industry-wide issue found in all generative AI systems. We are addressing these issues and will continue to improve our capabilities.'

In addition, a subtly altered photo taken shortly after the shooting was circulated, and Meta's system labeled the photo with a fact-check label. Meta then apologized for labeling the real photo with a fact-check label.

Related Posts:

in Software, Web Service, Posted by log1p_kr