Gemini 1.5 Pro, capable of inputting 2 million tokens, is now open to all developers & open model Gemma 2 is now available

On June 28, 2024, Gemini 1.5 Pro , which allows the use of a context window of 2 million tokens, was released to all developers. On the same day, Gemma 2 , a large-scale language model with parameter sizes of 9 billion (9B) and 27 billion (27B), was also released and became available through

Gemini 1.5 Pro 2M context window, code execution capabilities, and Gemma 2 are available today - Google Developers Blog

https://developers.googleblog.com/en/new-features-for-the-gemini-api-and-google-ai-studio/

Google launches Gemma 2, its next generation of open models

https://blog.google/technology/developers/google-gemma-2/

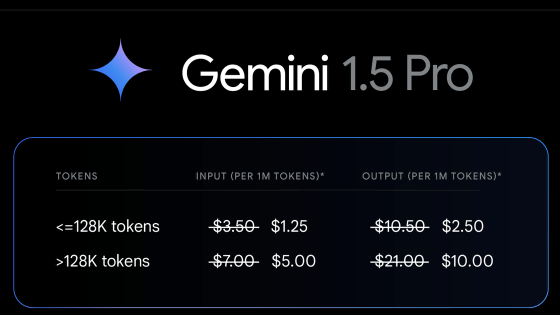

Gemini 1.5 Pro , announced in February 2024, was updated in May and doubled the context window from 1 million to 2 million, and was released exclusively to users who signed up for the Google AI Studio or Vertex AI waitlist.

Gemini 1.5 Pro will be released to all developers from June 28th.

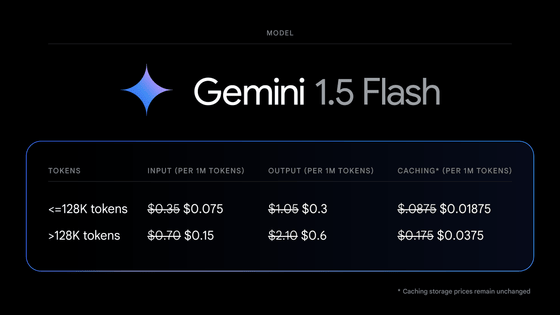

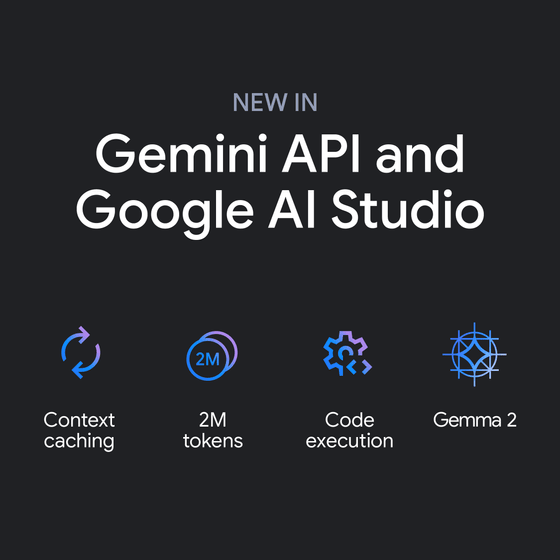

Google is also concerned about the increasing input costs associated with expanding the context window, and has introduced a cost-reduction system called '

With context caching turned on, the code execution feature is dynamically leveraged by the model to iteratively learn results until it reaches the desired final output, and developers only pay based on the output tokens from the model.

Context caching is available via the Gemini API and the Advanced Settings item in Google AI Studio, and can be enabled in both Gemini 1.5 Pro and Gemini 1.5 Flash.

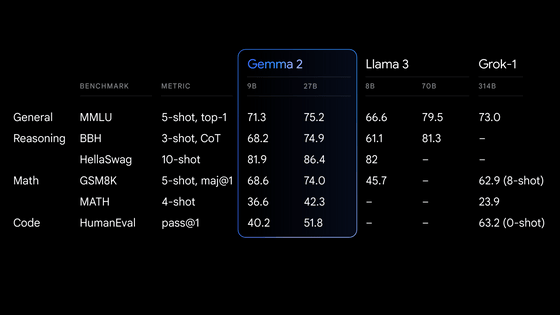

Gemma 2, which was released at the same time as the Gemini 1.5 Pro, is said to be capable of higher performance and more efficient inference than the previous generation Gemma , with the 27B model outperforming the 314B Grok and the 9B model outperforming the 8B Llama 3 .

In addition, the 27B Gemma 2 is designed to efficiently run inference on a single Google Cloud TPU host, NVIDIA A100 80GB Tensor Core GPU, or NVIDIA H100 Tensor Core GPU, significantly reducing costs while maintaining high performance. This makes it even more accessible, and Google says that 'it will be possible to introduce AI to fit any budget.'

In addition, the Gemma 2 model is now available in Google AI Studio. Google said, 'Try Gemma 2 at full accuracy in Google AI Studio, or on your home computer with NVIDIA RTX or GeForce RTX via Hugging Face Transformers.'

Gemma 2 is provided under a commercially available license .

Related Posts:

in Software, Posted by log1p_kr