Former OpenAI Chief Scientist Ilya Satskiver Founds AI Company Safe Superintelligence

Ilya Satskever, co-founder and former chief scientist at OpenAI , a pioneer in the AI industry known for developing ChatGPT and believed to be the person behind the CEO dismissal scandal of Sam Altman in November 2023, has announced the launch of a new AI company called Safe Superintelligence.

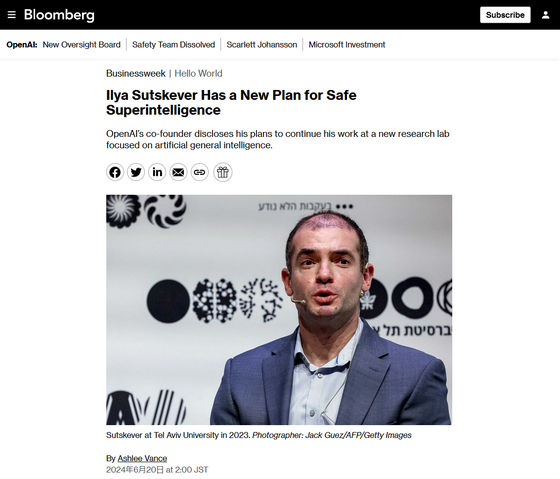

OpenAI Co-founder Plans New AI Focused Research Lab - Bloomberg

https://www.bloomberg.com/news/articles/2024-06-19/openai-co-founder-plans-new-ai-focused-research-lab

Ilya Sutskever, former chief scientist at OpenAI, launches new AI company | TechCrunch

https://techcrunch.com/2024/06/19/ilya-sutskever-openais-former-chief-scientist-launches-new-ai-company/

OpenAI's former chief scientist starts new AI company - The Verge

https://www.theverge.com/2024/6/19/24181870/openai-former-chief-scientist-ilya-sutskever-ssi-safe-superintelligence

The former OpenAI chief scientist has co-founded a new company, Safe Superintelligence Inc. - Neowin

https://www.neowin.net/news/the-former-openai-chief-scientist-has-co-founded-a-new-company-safe-superintelligence-inc/

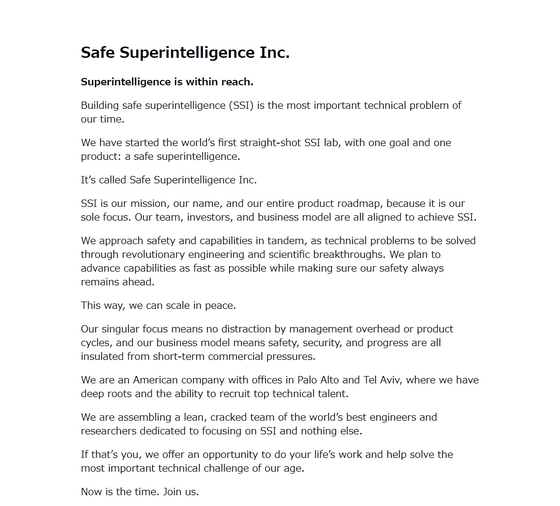

The official Safe Superintelligence website is already up and running, with the following message:

Building Safe Superintelligence (SSI) is the most important technological challenge of our time. We launched the world's first straight-shooting SSI lab with one goal and one product: SSI. The name of the company is Safe Superintelligence. SSI is our mission, our name, and our entire product roadmap, because it is our sole focus.

Our team, investors, and business model are all aligned towards achieving SSI. We see safety and functionality simultaneously as technical problems to be solved through innovative engineering and scientific advances. We plan to improve functionality as quickly as possible, while always keeping safety first. This allows us to scale peacefully. We are single-mindedly focused, not bogged down in administrative overhead and product cycles. And our business model protects safety, security, and progress, all from short-term commercial pressures.

We are a US company with offices in Palo Alto, California and Tel Aviv, Israel, with deep roots in the region and the ability to recruit and hire the best technologists. We are solely focused on SSI and have assembled an elite team of the best engineers and researchers in the world. If you are the best engineer or researcher in the world, we offer you the opportunity to do your life's work and contribute to solving the most important technological challenges of our time. The time is now, join us.'

Safe Superintelligence Inc.

https://ssi.inc/

Safe Superintelligence also has an official X (formerly Twitter) account, where they post similar messages.

Superintelligence is within reach.

— SSI Inc. (@ssi) June 19, 2024

Building safe superintelligence (SSI) is the most important technical problem of our time.

We've started the world's first straight-shot SSI lab, with one goal and one product: a safe superintelligence.

It's called Safe Superintelligence...

'I'm starting a new company,' Satskivar wrote on his X account, quoting a post from Safe Superintelligence.

I am starting a new company: https://t.co/BG3K3SI3A1

— Ilya Sutskever (@ilyasut) June 19, 2024

In addition to Satskever, Safe Superintelligence's founders include Daniel Gross, an American entrepreneur who co-founded the search engine Cue , led Apple's AI efforts and was a partner at Y Combinator, and Daniel Levy, a former engineer at OpenAI.

At OpenAI, Satskever was an essential figure in the effort to improve AI safety. In that field, Satskever led the 'Superalignment' team, which worked with Jan Reich to ensure the safety of AI, but Superalignment was dissolved after Satskever left OpenAI.

OpenAI's 'Super Alignment' team, which was researching the control and safety of superintelligence, has been disbanded, with a former executive saying 'flashy products are being prioritized over safety' - GIGAZINE

By Jernej Furman

OpenAI launched as a nonprofit in 2015, but was forced to change course after it became clear it needed huge amounts of money to build computing power. Satskivar's Safe Superintelligence is set up as a for-profit from the start, so 'we face a lot of problems, but fundraising won't be one of them,' co-founder Gross told Bloomberg.

Related Posts:

in Note, Posted by logu_ii