What are the 'sites that don't support slow connections' that were discovered when using the Internet from Antarctica?

Engineering for Slow Internet

https://brr.fyi/posts/engineering-for-slow-internet

In Antarctica, internet access was provided by satellite, limiting communication speeds to just a few tens of Mbps across the entire base. Although the situation improved at some bases when Starlink was launched in 2023, the total bandwidth available to the approximately 1,000 staff at the base was less than the bandwidth available to one person on a typical 4G network in a developed country.

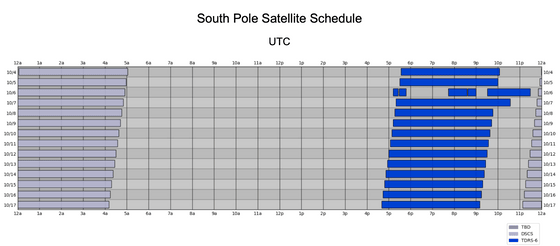

The Amundsen-Scott base at the South Pole will not be able to use Starlink as of the end of 2023, and will only be able to connect to the Internet for a few hours each day when the satellites are above the horizon. In addition, because the satellites are thousands of kilometers above the horizon, packet delays are so severe that it takes 750 ms round trip to access a typical website.

brr summarized the communications environment in Antarctica as follows:

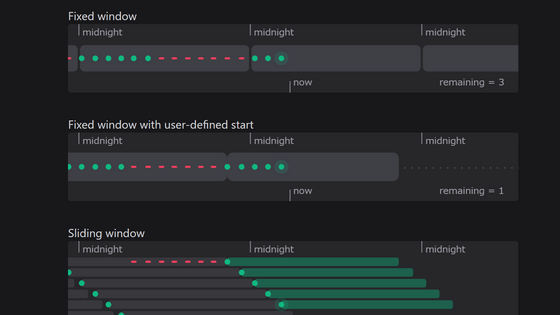

A round trip packet takes an average of 750 ms, and the jitter between packets can exceed several seconds

-Speeds available to end-user devices are only a few kbps, with up to 2 mbps on a good day

- Communications are extremely congested, much more so than any other provider, with packet delays and drops occurring frequently

- Frequent disconnections and essential communications at the base may take priority

While browsing websites in such an environment can be difficult, brr says that much of the impact on end users is 'caused by web engineers who don't anticipate access from slower, lower-quality internet.' He goes on to point out the following characteristics of site design that make it difficult to access from Antarctica:

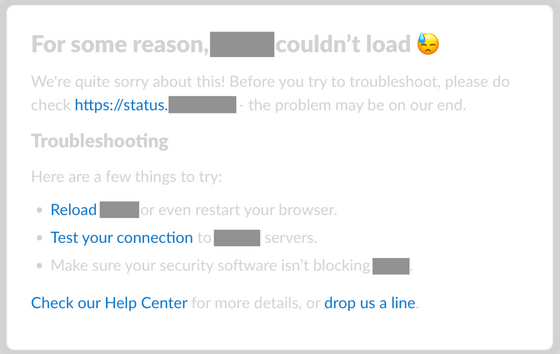

The first design issue that brr cited was that the timeout was hard-coded. When brr tried to open a site to chat, it had to load about 20MB of JavaScript. The browser was compatible with slow internet, and even huge amounts of data were loaded slowly compared to the line.

However, the developer of the site brr was trying to access had implemented a custom timeout mechanism. When the timeout was triggered, the site would redirect to an error page, discard any data currently being loaded, and invalidate the cache.

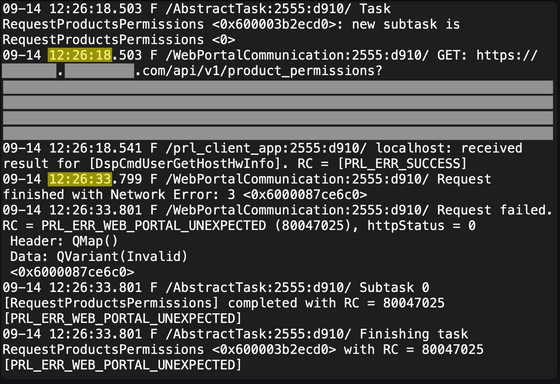

Brr also discovered a similar issue while trying to figure out why a desktop application wouldn't launch: it repeatedly failed to load due to a fixed, hard-coded timeout value.

Brr says it's necessary to gradually lengthen the timeout, introduce a mechanism to notify users that a timeout has occurred, or have users manually download and install the necessary data.

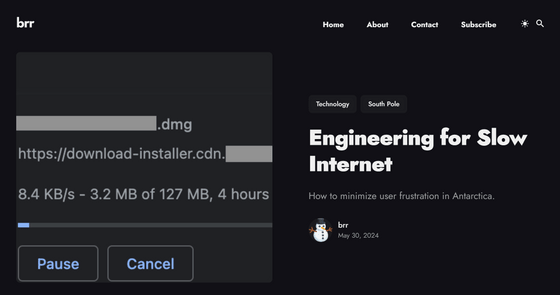

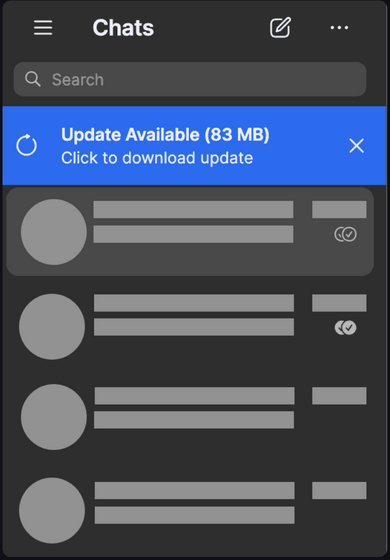

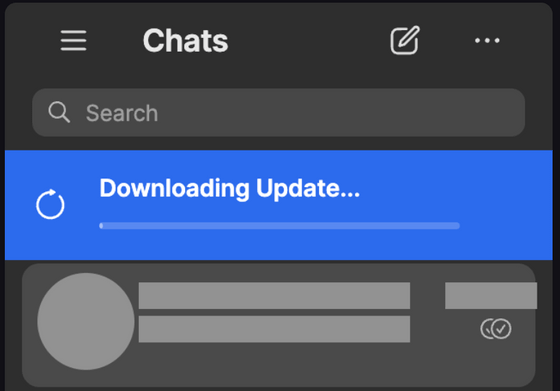

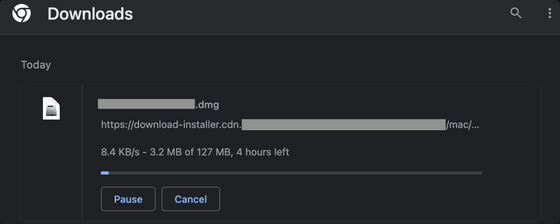

Another issue that brr raised is the issue of apps having their own built-in downloaders. Certain apps may have a built-in downloader, as shown in the image below.

In-app downloaders often have issues with pause/resume functionality, status notifications, retry logic, progress tracking, and other issues. They are also often designed to cancel if the download is not completed within a certain time frame.

brr responded to this issue by saying, 'You should provide a link to the file so that users can use their own downloader.' With a link, users can use a powerful downloader such as a browser, share downloaded files across multiple devices, and flexibly schedule downloads.

In his post, brr said that he wasn't asking for a huge amount of time to optimize edge cases like Antarctica, but that there are elements that could become big problems in places with slow internet.

Related Posts:

in Web Service, Web Application, Posted by log1d_ts